Introduction

KNIME Business Hub is a customer-managed Hub instance. Once you have a license for it and proceed with installation you will have access to Hub resources and will be able to customize specific features, as well as give access to these resources to your employees, organize them into Teams and give them the ability to manage specific resources.

This guide provides information on how to administrate a KNIME Business Hub instance.

To install a KNIME Business Hub please refer to the KNIME Business Hub Installation Guide.

A user guide is also available here, which contains instructions on how to perform team administration tasks. Team admins are designated by the global Hub admin, and have control over their team’s allocated resources, can add users to their team, create execution contexts and have an overview of the team’s deployments. In this way the Hub administration tasks are distributed and reallocated to those users that have a better overview of their own team necessities.

Create and manage teams

A team is a group of users on KNIME Hub that work together on shared projects. Specific Hub resources can be owned by a team (e.g. spaces and the contained workflows, files, or components) so that the team members will have access to these resources.

Sign in to the KNIME Business Hub instance with the admin user name by visiting the KNIME Business Hub URL.

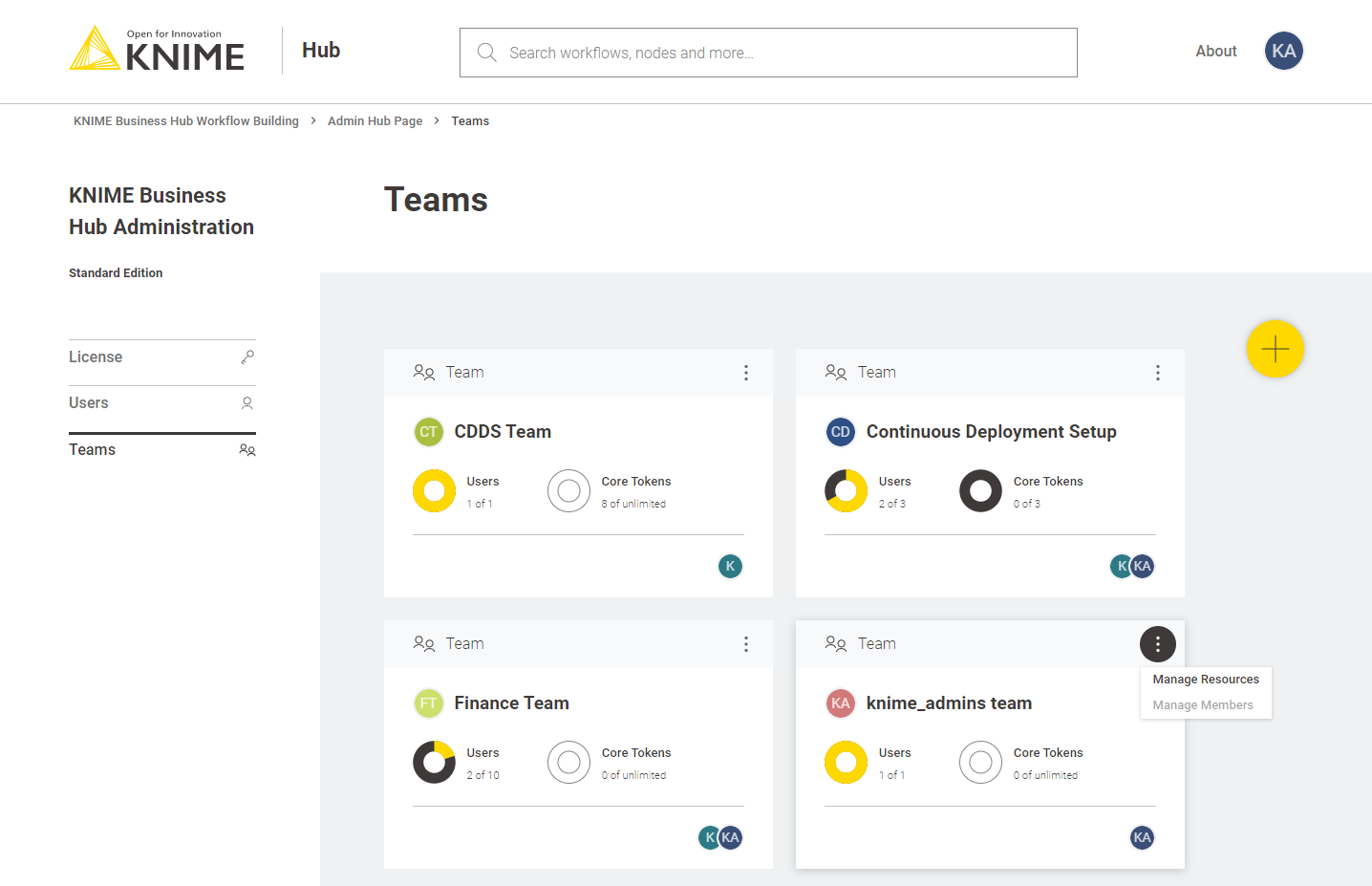

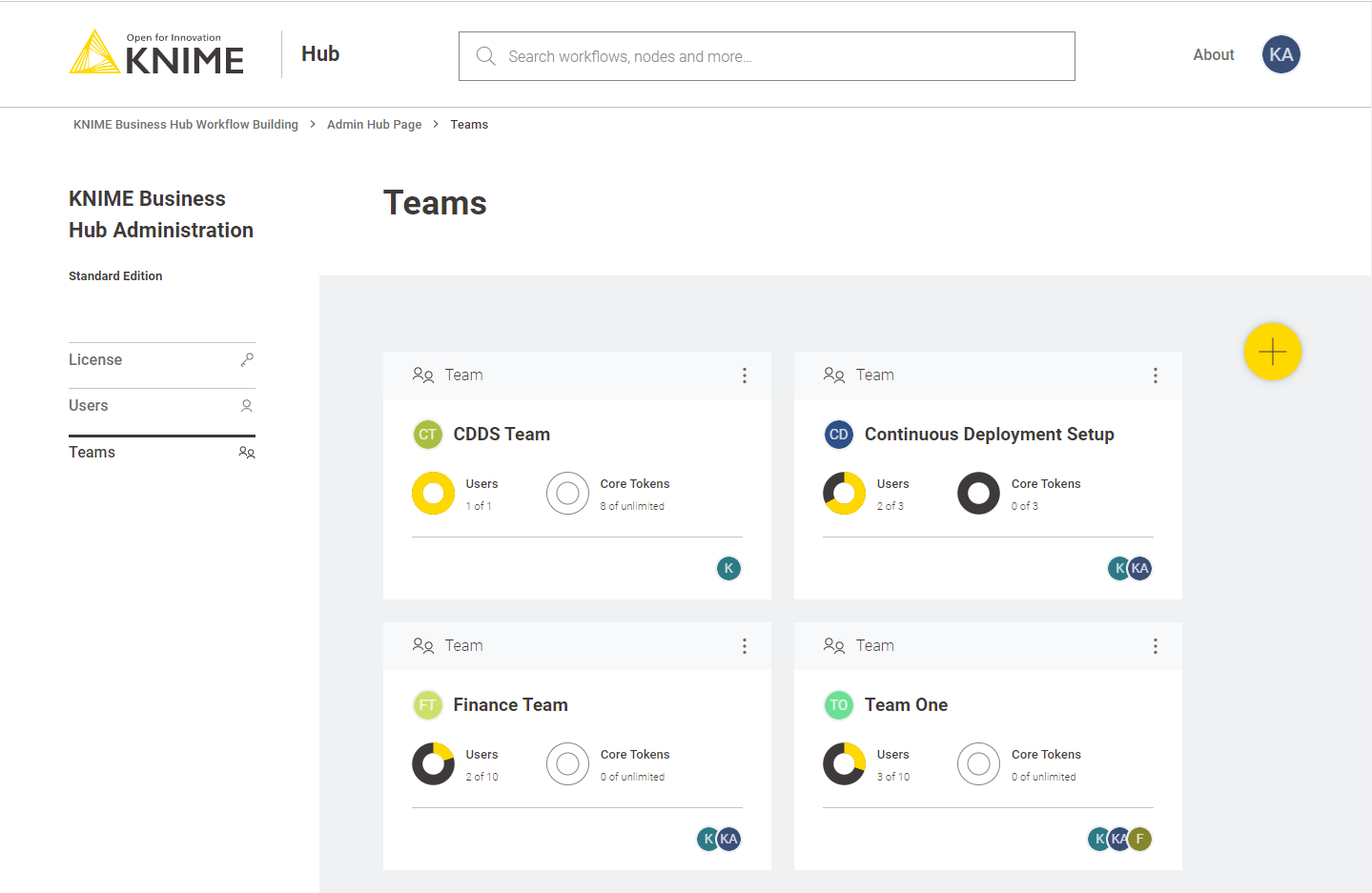

Then click your profile picture on the right upper corner of the page and select Administration to go to the KNIME Business Hub Administration page. Click Teams in the menu on the left. Here you will be able to see an overview of the existing teams and you will be able to manage them.

Create a team

To create a new team click the yellow plus button on the right.

After you create a new team you will be redirected to the new team’s page. Here you can change the name of the team. To do so click the name of the team under the team logo on the left side of the page. The name of the team can also be changed at any point in time by the team administrator.

From the team’s page you can:

-

Add members to the team

-

Change their role to, for example, promote a user to team admininistrator role

Here you might for example want to assign the team to a team administrator. To do so click Manage team and enter the user name of the user you want to assign as a team administrator for the current team. Then click on the role and select Member and Admin. At the same time you might want to delete the global admin user name from the team members list. To do so click the bin icon corresponding to that user. Click Save changes to finalize the setting.

Allocate resources to a team

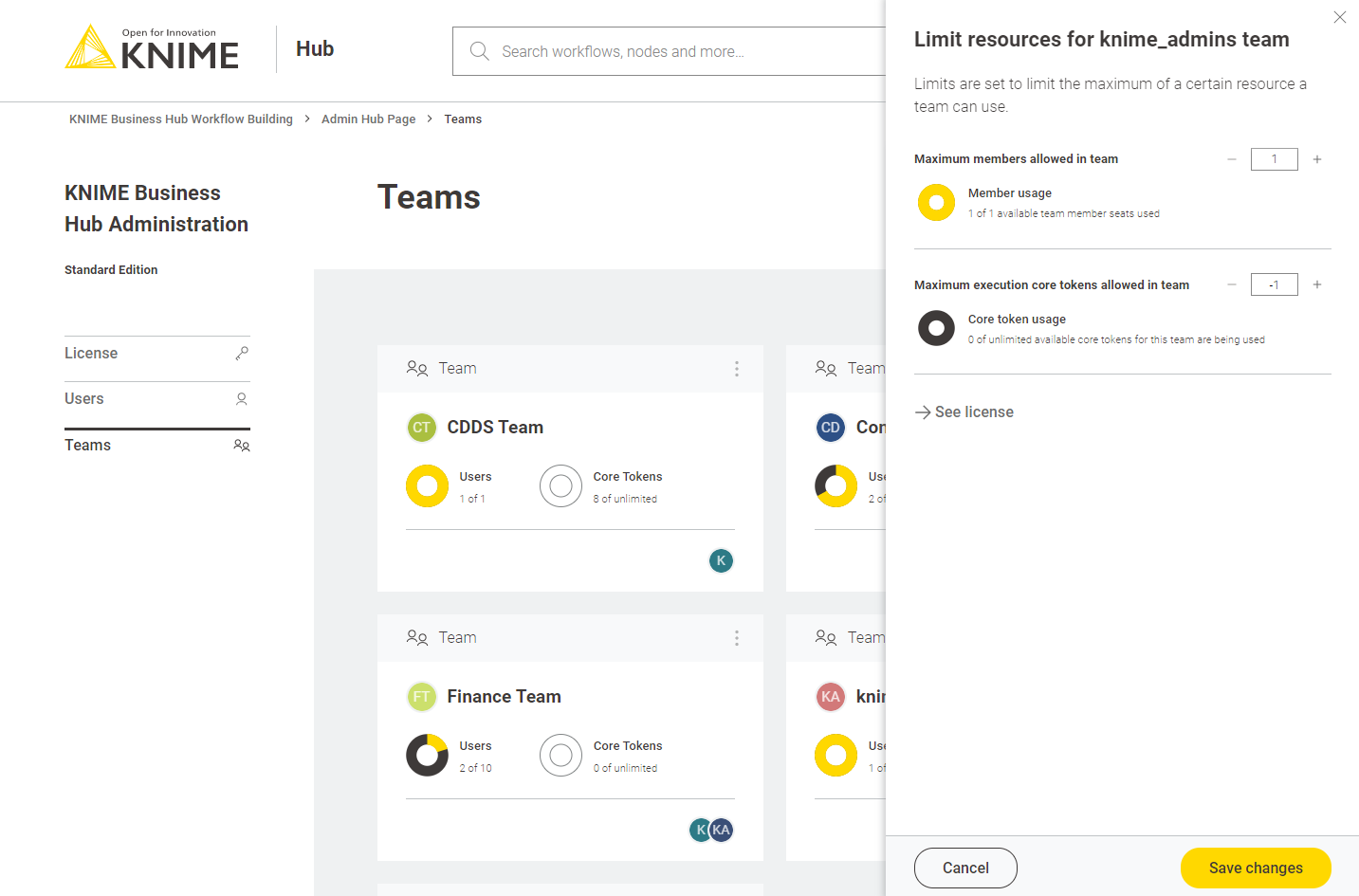

To allocate resources to a team navigate to the KNIME Business Hub Administrator page and select Teams from the menu on the left.

Here you can see an overview of the teams available, their allocated resourced, and of their current usage. Click the three dots on the right upper corner of the card corresponding to the team you want to allocate resources to.

Select Manage resources from the menu. A panel on the right will open where you can select the resources you want to allocate.

Here you can change:

-

The maximum number of members allowed in that team

-

The maximum number of execution vCore tokens allowed for that team

Click Save changes when you have set up the resources for the current team.

Manage team members

From the KNIME Business Hub Administration page you can also manage the team members.

Click the three dots on the right upper corner of the card corresponding to the team. From the menu that opens select Manage members. In the side panel that opens you can add members to a team, or change the team members role.

Delete a team

From the KNIME Business Hub Administration page you can also delete a team.

Click the three dots on the right upper corner of the card corresponding to the team. From the menu that opens select Delete. Be aware that this operation will delete also all the team resources, data and deployments.

Execution resources

As mentioned in the previous section you as an Hub admin can assign execution resources to each team.

Team admins will then be able to build execution contexts according to the execution resources that you assigned to their team. These execution contexts will then be dedicated specifically to that team.

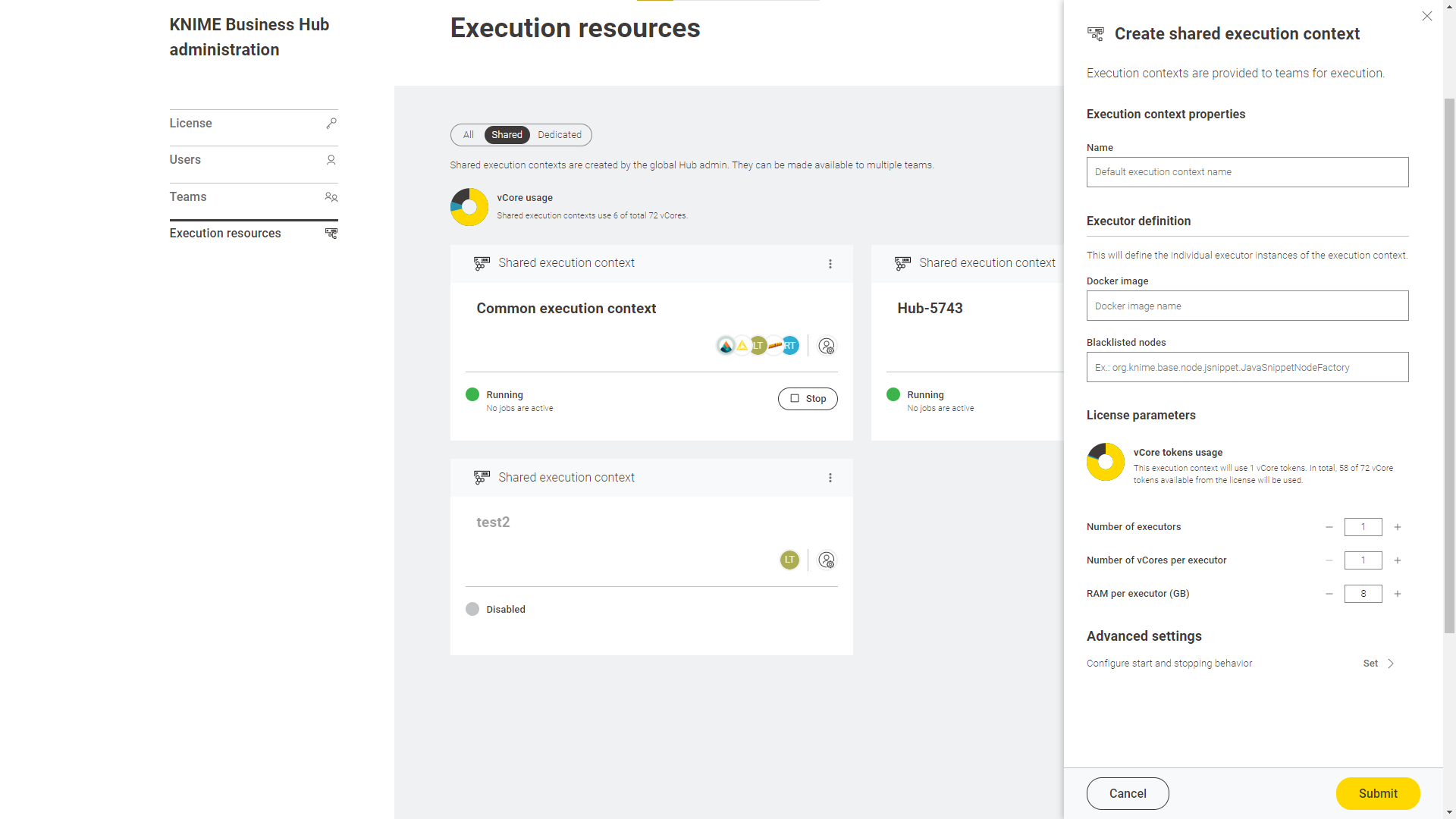

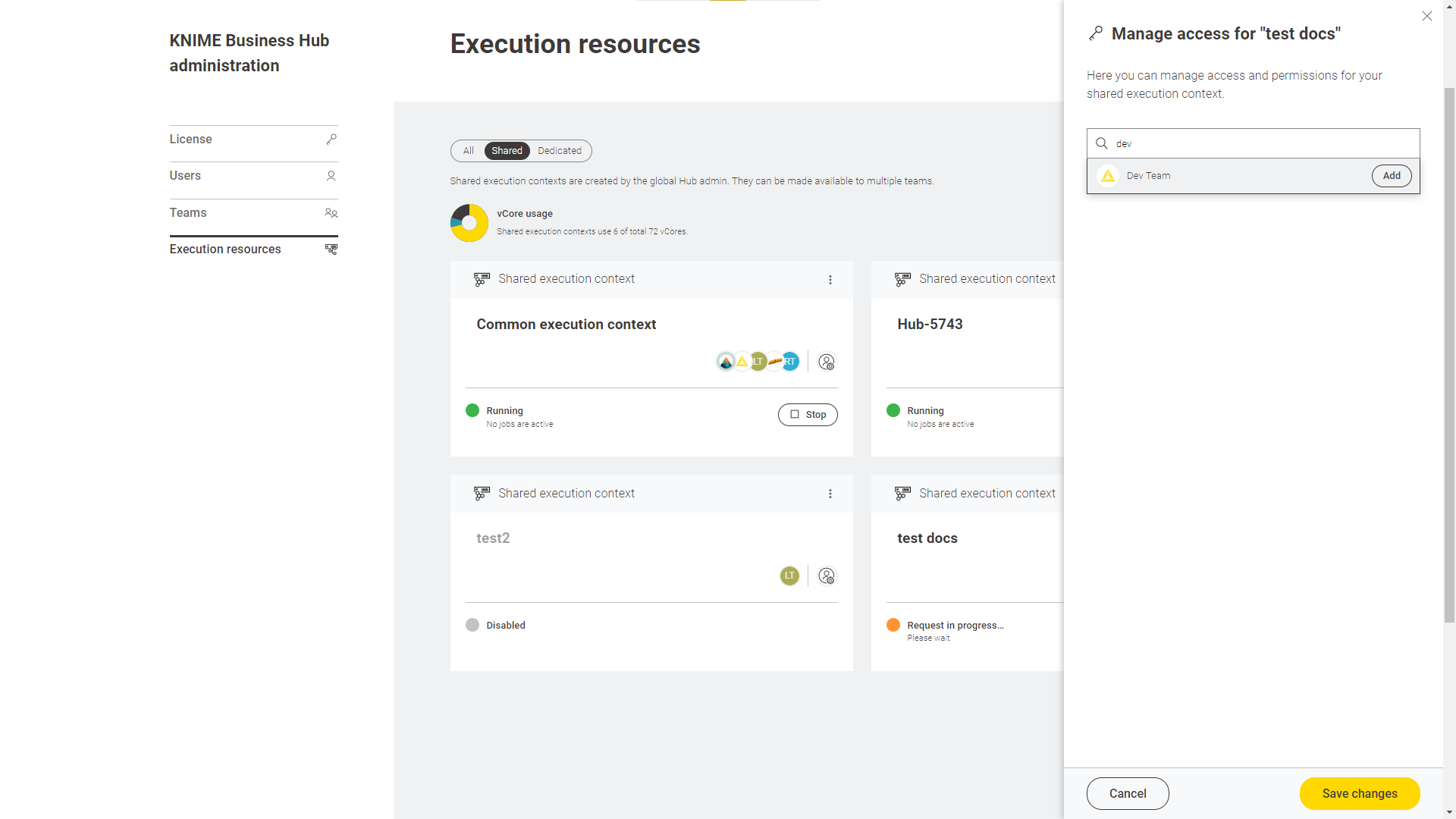

As an Hub admin you can also create a shared execution context. Once you create one you can share it with multiple teams.

For an overview of all the available execution contexts click your profile icon on the top right corner of the KNIME Hub and select Administration from the drop-down.

You will be then redirected to the KNIME Business Hub administration page.

Here, select Execution resources from the menu on the left.

In this page you can see an overview of All the execution contexts available on the Hub.

From the toggle at the top you can filter to see only a specific type of execution contexts available in the Hub instance.

Select:

-

Shared: Shared execution contexts are created by the Hub admin. They can be made available to multiple teams.

-

Dedicated: Dedicated execution contexts are created by the team admins for their team. Dedicated execution contexts are exclusively used by a single team.

|

Each team can by default have a maximum of 10 execution contexts and 10000 jobs. As a KNIME Hub admin you can change these limits via a REST API call like the following: ``PUT https://api.<base-url>/execution/limits/{scopeId}/{limitKey}`` where |

Advanced configuration of execution contexts

Execution contexts can be created and edited also via the Business Hub API.

Find more information on the available configurations in the Advanced configuration of execution contexts section in KNIME Business Hub User Guide.

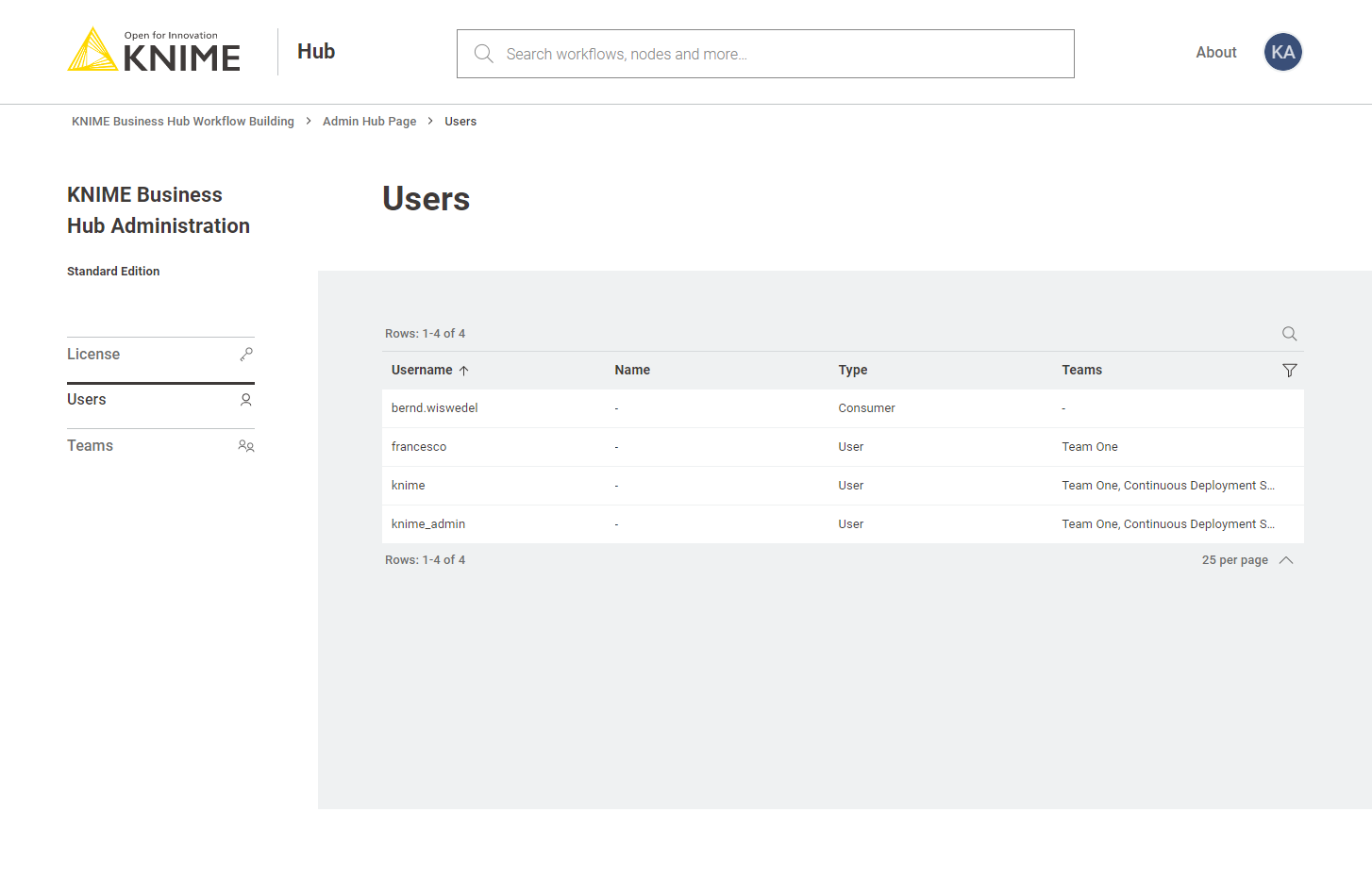

Users management

To see a list of all the users that have access to your KNIME Business Hub instance you can go to the KNIME Business Hub Administration page and select Users from the menu on the left.

Here you can filter the users based on their team, the type of users and their username and name. To do so click the funnel icon in the users list. You can also search the users by using the magnifier icon and typing the keyword in the field that appears.

Delete a user

To delete a user follow these steps in this order:

-

Delete the user from the KNIME Business Hub Administration page. Click the three dots and select Delete. You will need to confirm the user deletion in the window that opens by clicking Delete user. Be aware that this action will also delete all data from the deleted user and it will not be possible to restore the user.

-

Delete the user from Keycloak. Follow the steps in the next section to access Keycloak and manage users.

Make a user Hub admin

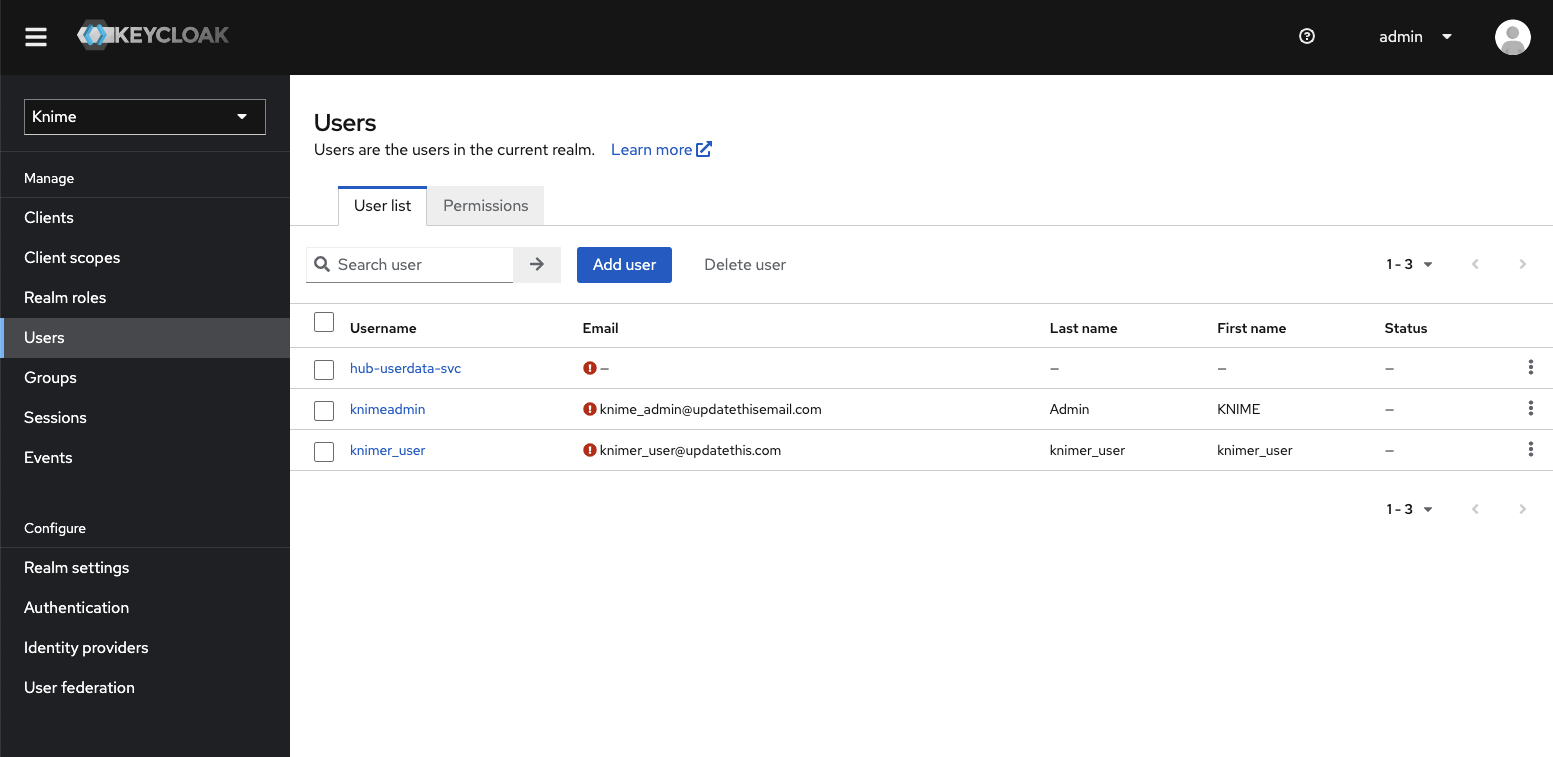

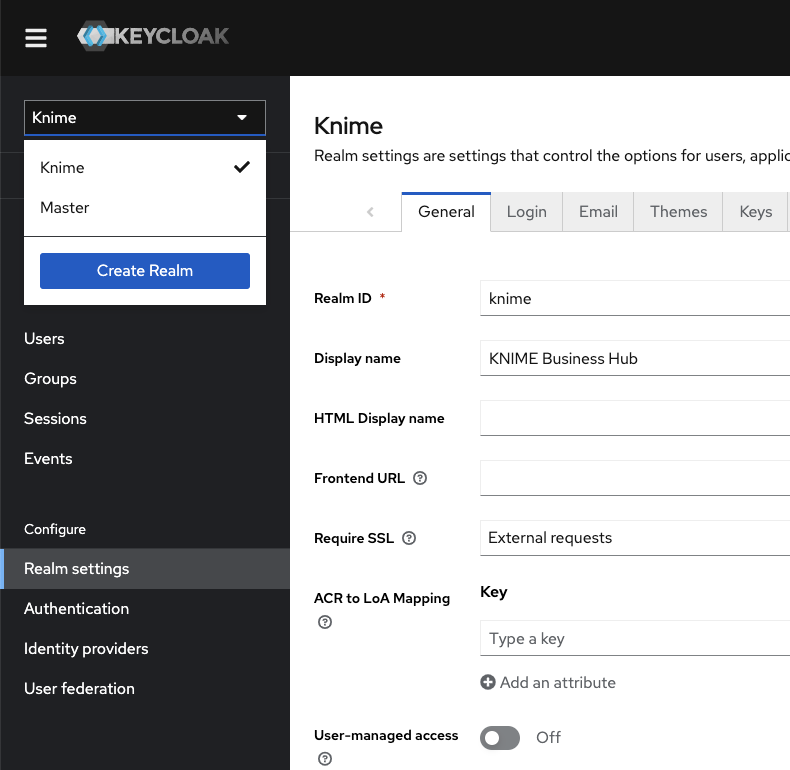

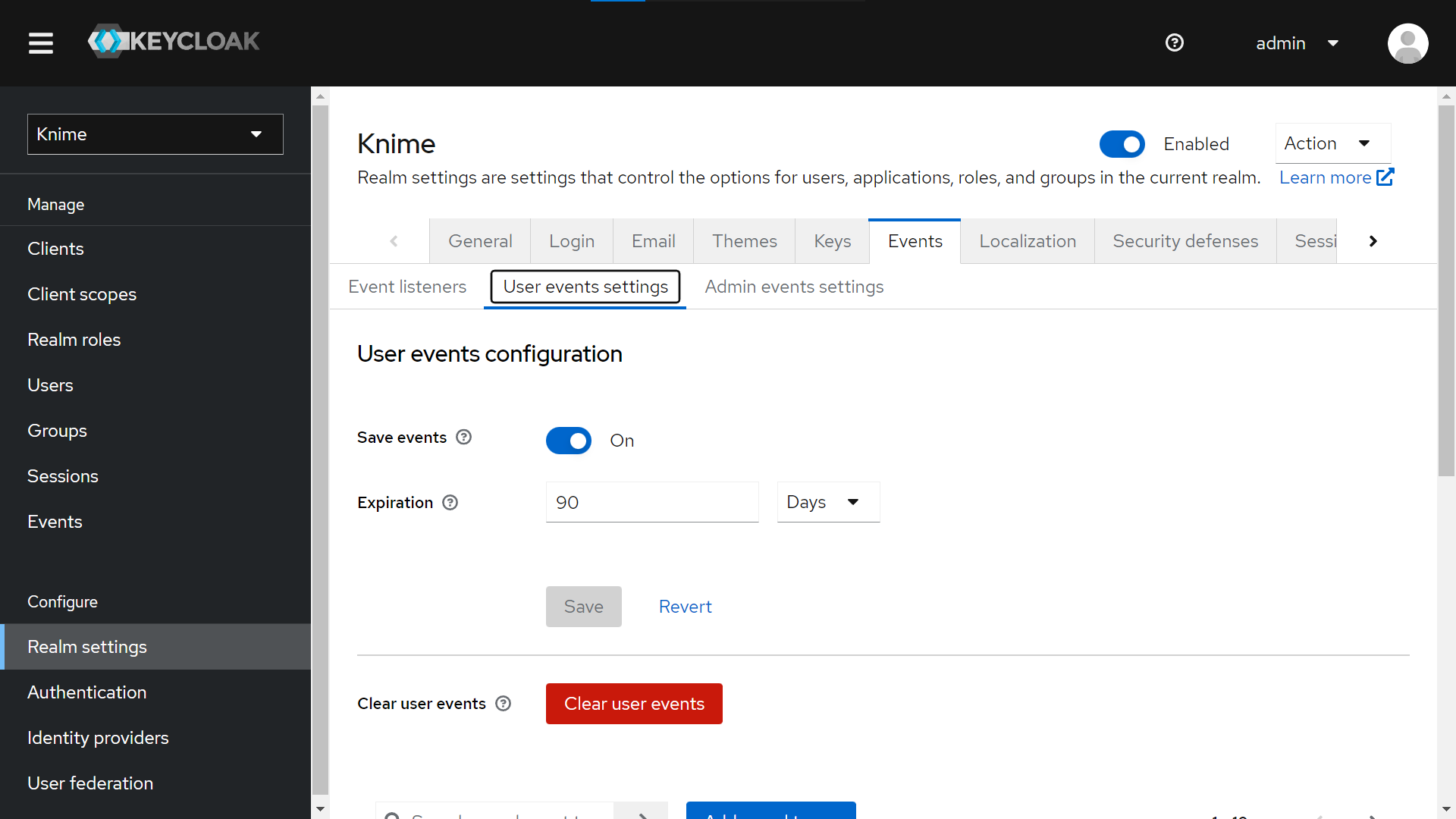

Users are managed in the backend via the Keycloak instance embedded in KNIME Business Hub. Therefore, the operation of promoting a registered user to the role of Hub admin is done in Keycloak.

To do so follow these steps:

-

First you will need to access the keycloak admin console. To do so you will need the credentials that are stored in a kubernetes secret called

credential-knime-keycloakin theknimenamespace. To get the required credentials, you need to access the instance the Business Hub is running on and run the following command:kubectl -n knime get secret credential-knime-keycloak -o yaml

This will return a file that contains the

ADMIN_PASSWORDand theADMIN_USERNAME. Please notice that they are bothbase64encrypted. In order to get the decrypted username and password, you can run the following commands:echo <ADMIN_PASSWORD> | base64 -d echo <ADMIN_USERNAME> | base64 -d

-

Then go to

http://auth.<base-url>/auth/and login. -

In the top left corner click the dropdown and select the "Knime" realm, if you are not there already.

Figure 8. Select the "Knime" realm

Figure 8. Select the "Knime" realm -

Navigate to the Users menu and search for the user by name or email:

In order for a user to appear in this list, it is necessary that they have logged into your KNIME Business Hub installation at least once.

-

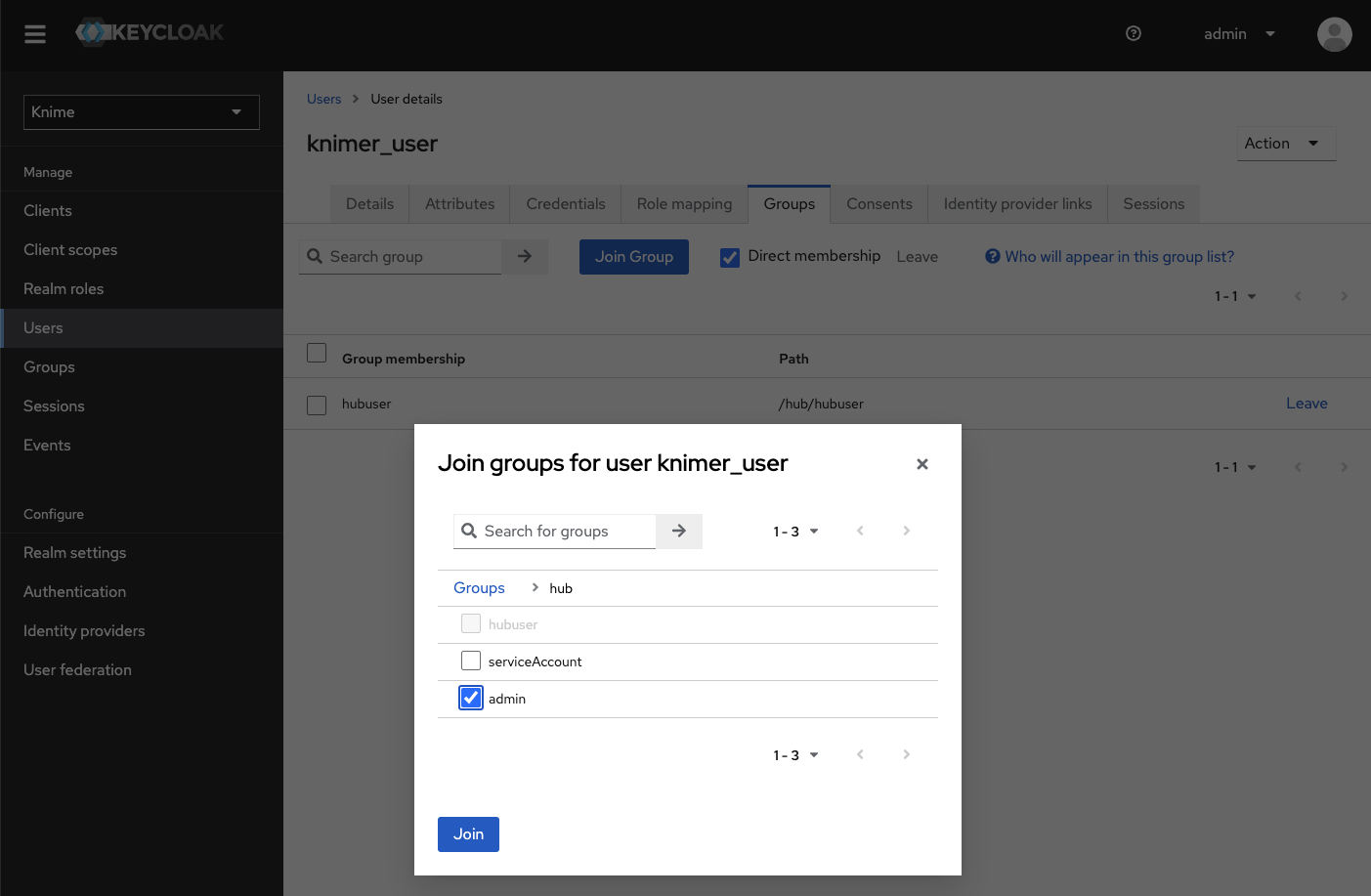

Click the user and go to the Groups tab. Click Join Group and either expand the hub group by clicking it, or search for "admin". Select the admin group and click Join:

Figure 10. Making a user a Hub admin in Keycloak. If you are searching for the group then the group might show up under its full path "/hub/admin"

Figure 10. Making a user a Hub admin in Keycloak. If you are searching for the group then the group might show up under its full path "/hub/admin" -

Done. The user now has the role of Hub admin, and can access the admin pages from within the Hub application to e.g., create teams or delete users.

Expose external groups inside KNIME Business Hub

As a Global KNIME Hub administrator you can configure groups that are provided via an external identity provider to be exposed inside the KNIME Business Hub instance.

Two possible sources for your external groups are:

-

Groups are provided within the access token of your OIDC provider.

-

Groups are imported from LDAP by federating the login.

External OIDC provider

Assume you have an identity provider that provides groups through a groups claim in the access token.

{ ..., "groups": [ "finance", "marketing", "data" ] }

First you need to configure Keycloak in such a way that it can map these groups to a user attribute. The second step is to add a mapper that maps these user attributes into the Keycloak’s tokens.

Your third-party identity provider should have been set up already. Keycloak has to be configured as follows:

First step is to add an Attribute Importer Mapper.

-

In Keycloak select realm Knime in the top left dropdown menu

-

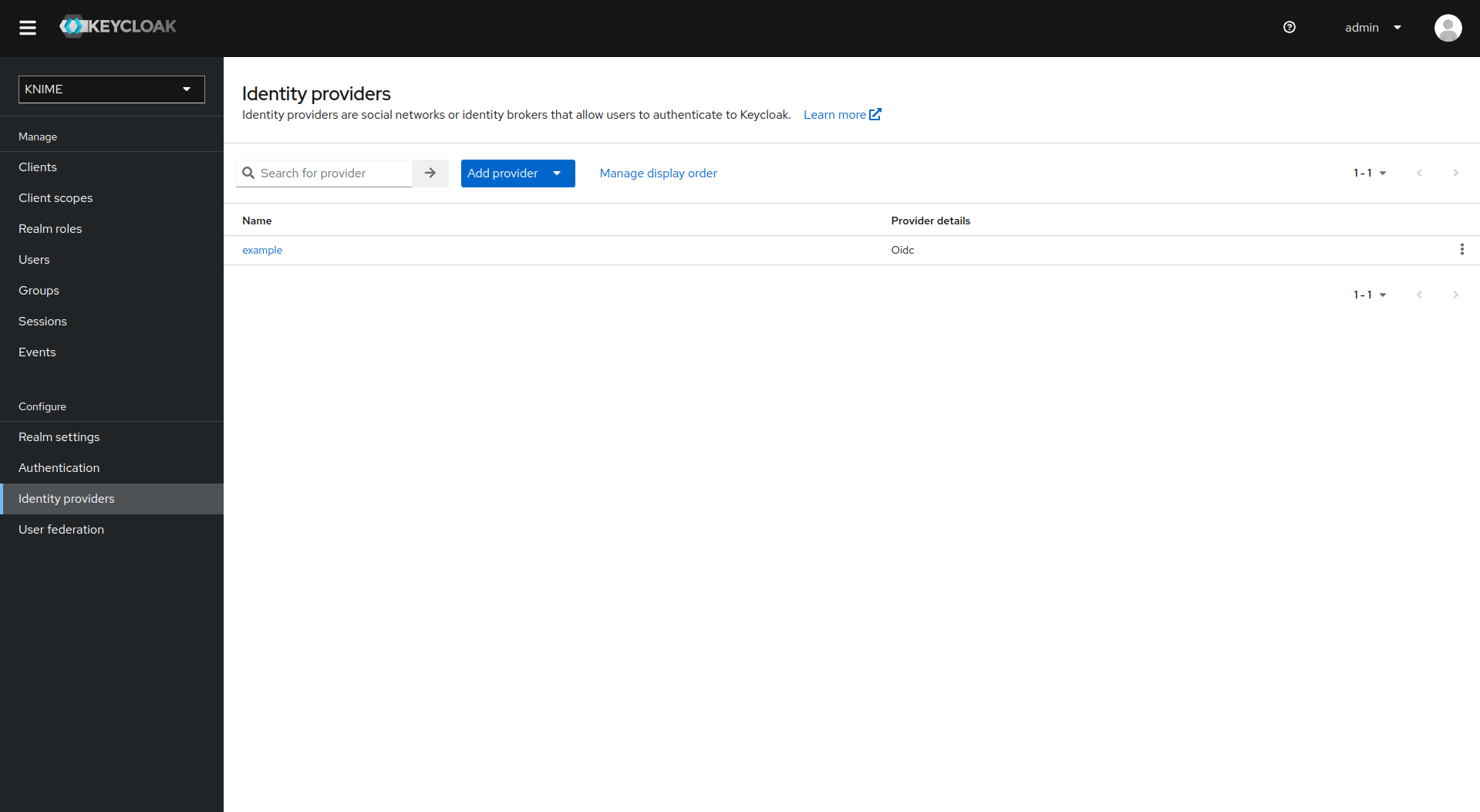

On the left tab select Identity Providers

-

Select your third-party provider

-

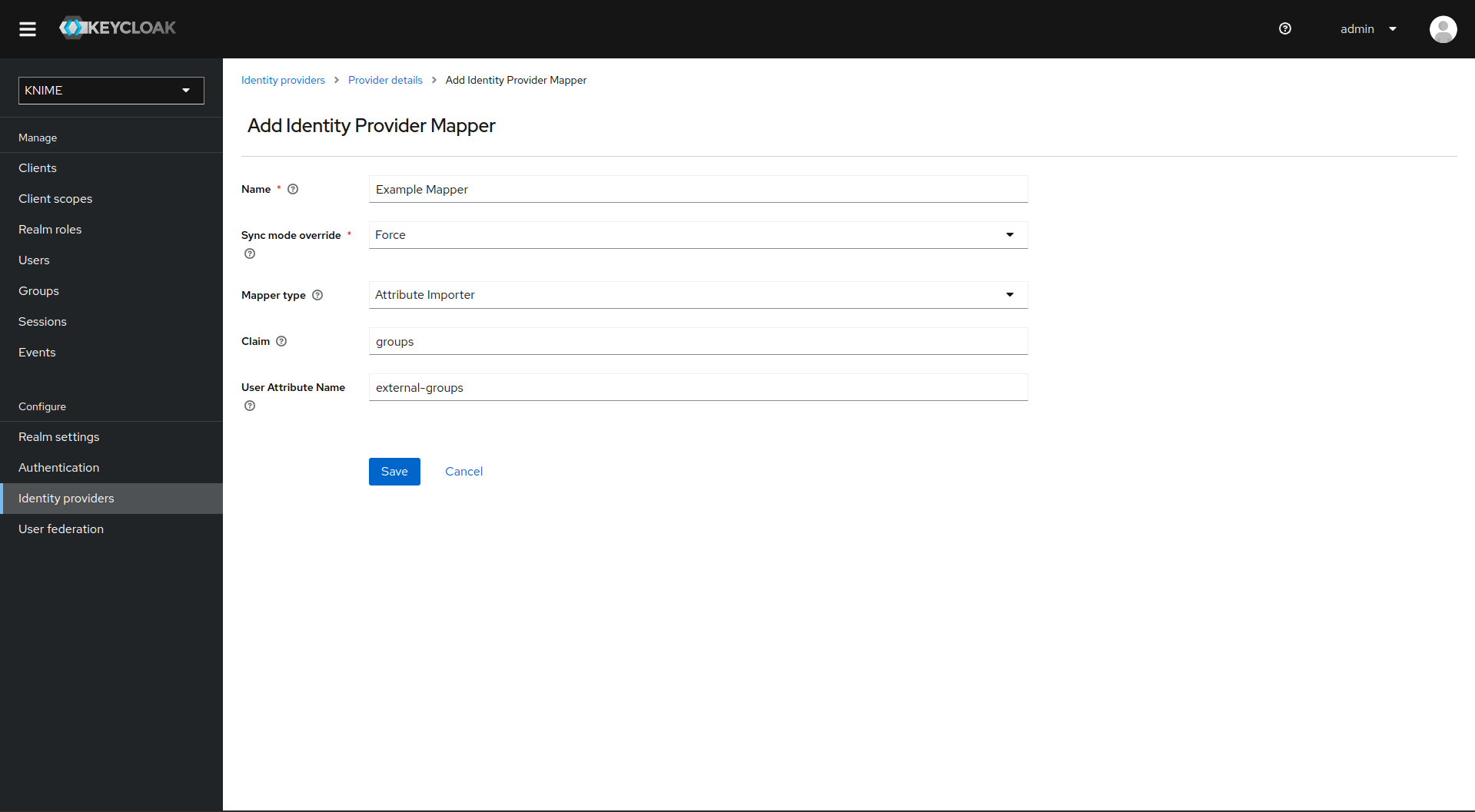

Switch to the tab Mappers and click on Add mapper

-

Provide a name for the mapper and set the Sync mode override to Force to ensure that the user’s group memberships are updated upon every login

-

Set Mapper type to Attribute importer

-

Enter the Claim that contains the external groups in the original token (in our example groups)

-

In the User Attribute Name field enter external-groups

-

Click on Save

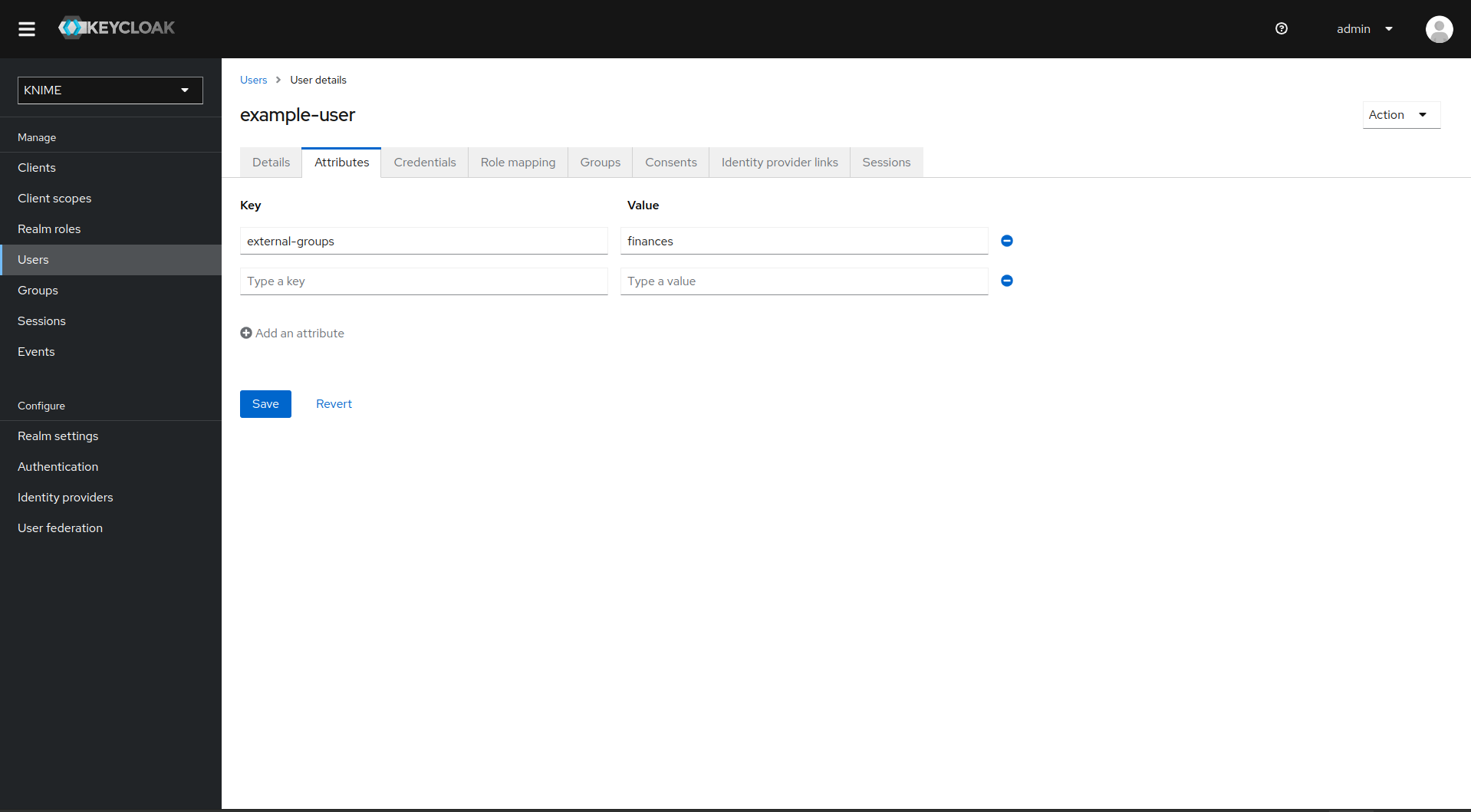

Now, every user in Keycloak who logged in after the mapper has been added will have an external-groups attribute associated like in the following picture:

Now, the external groups are known to Keycloak. To expose them inside KNIME Business Hub they need to be mapped into the access tokens issued by Keycloak. For this a second mapper needs to be added, that maps the user attribute external-groups to a claim in the user’s access token.

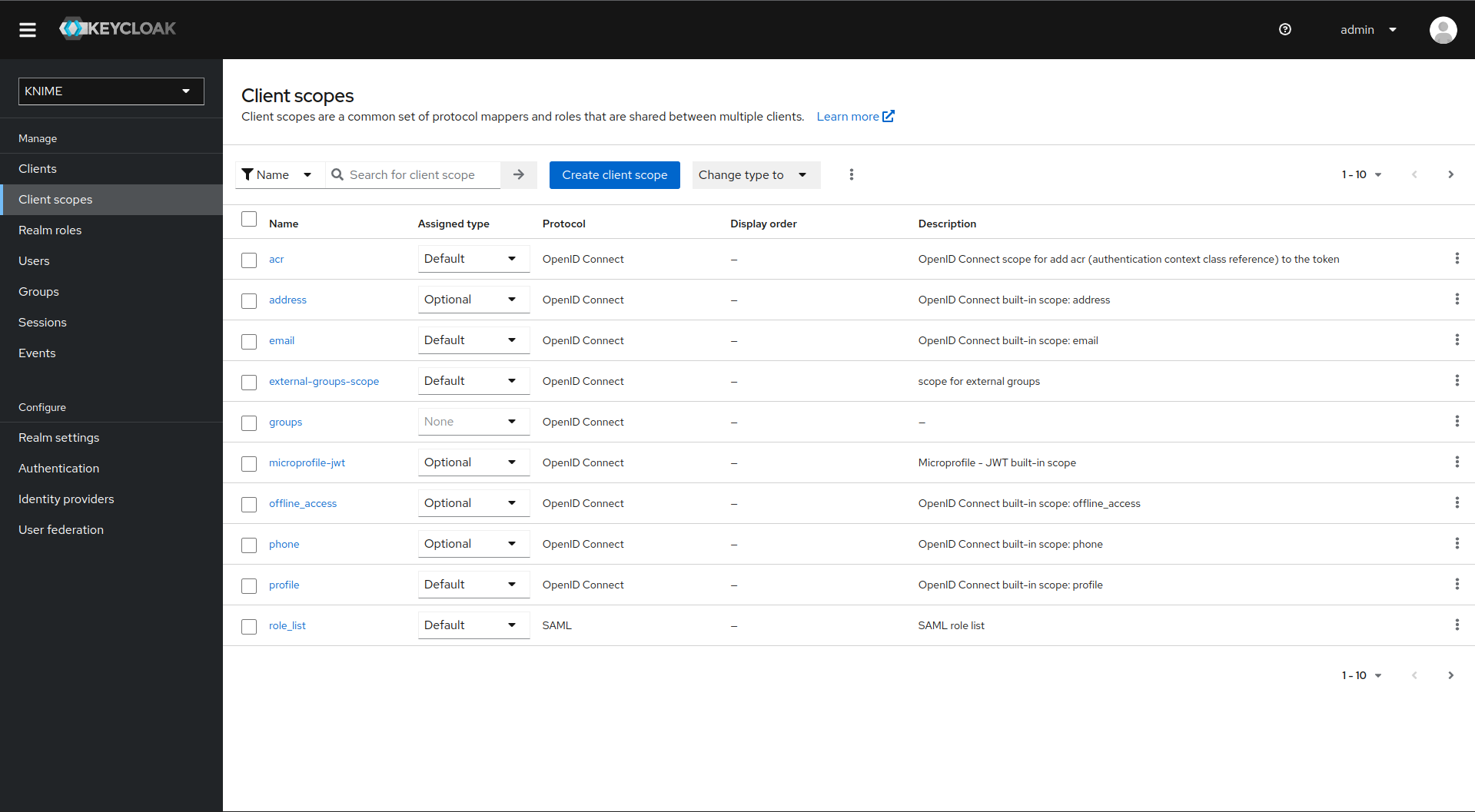

To do this you need to add a client scope, which includes a mapper for the user attribute.

-

On the left tab select Client scopes

-

Select groups

-

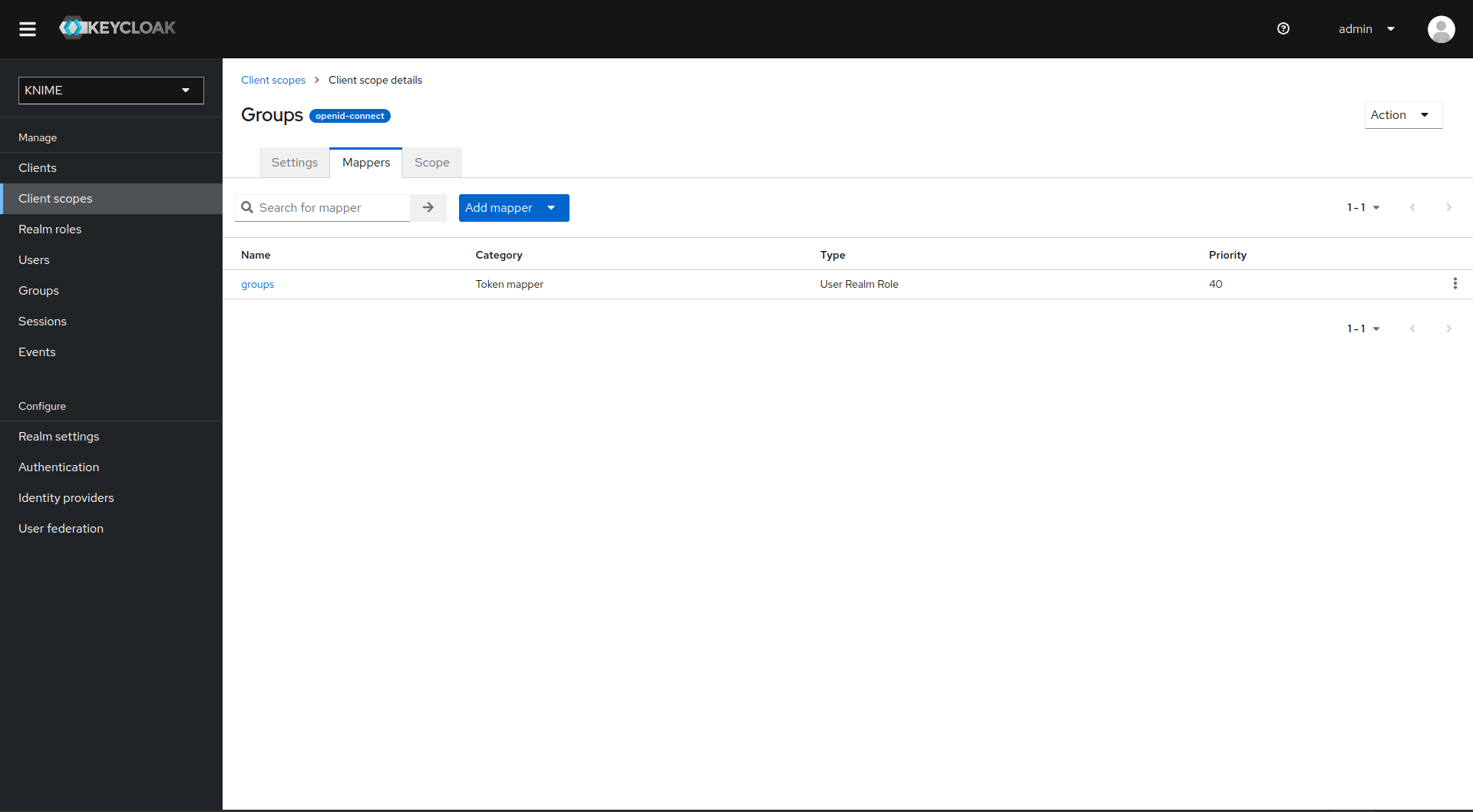

Switch to the tab Mappers

-

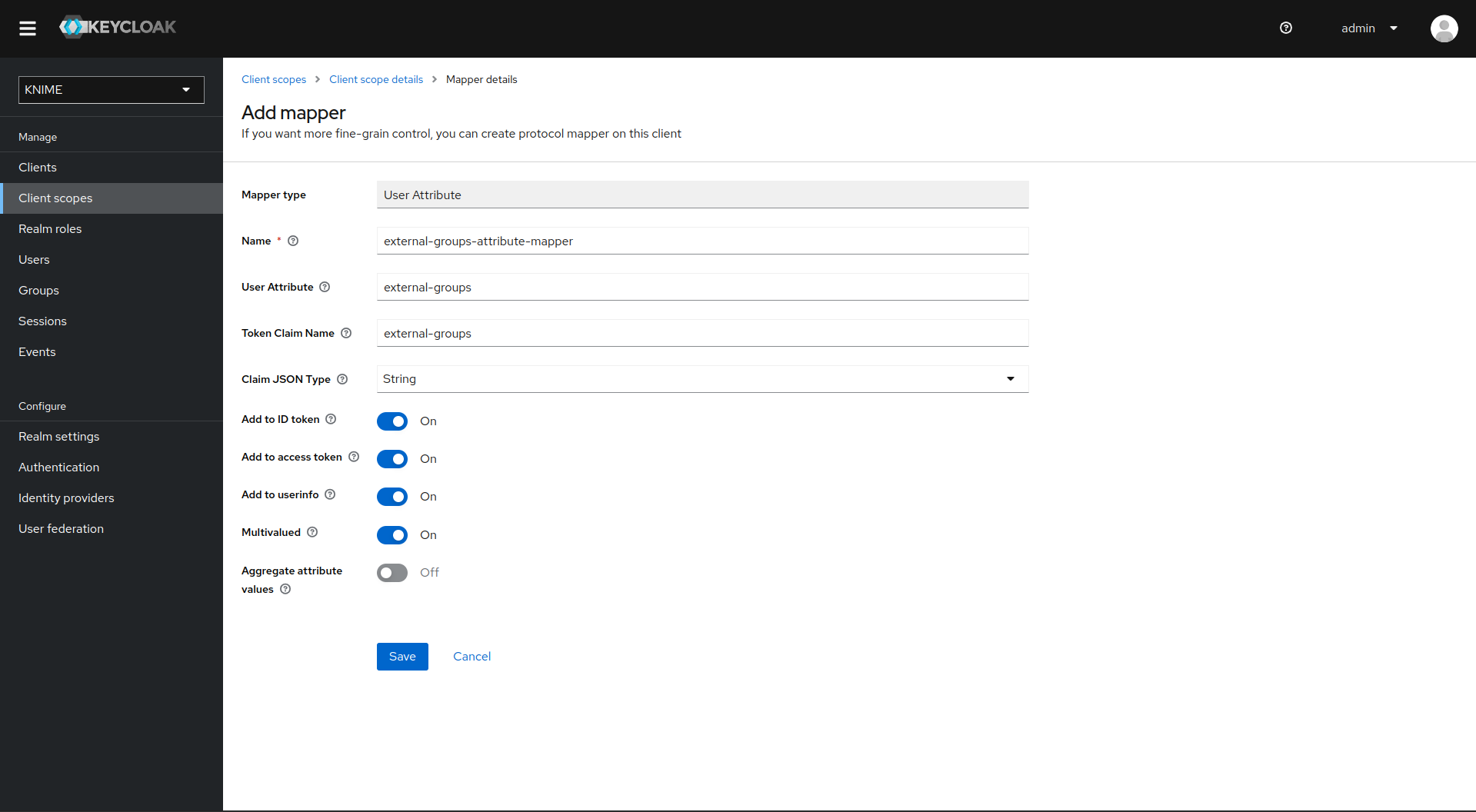

Click on Add mapper > By configuration and select User Attribute from the list

-

Provide a name, e.g. external-groups-attribute-mapper

-

Set both fields User Attribute and Token Claim Name to external-groups

-

Ensure that Add to ID token, Add to access token, Add to userinfo, and Multivalued are turned on and that Aggregate attribute values is turned off

-

Click on Save

With both mappers in place, the external groups are part of the access tokens issued by Keycloak. By this, the external groups are exposed inside KNIME Business Hub. In order to enable external groups to be used for permissions and access management they need to be configured separately through the admin REST API as described in Enable external groups.

LDAP federation

If you have user federation configured for an LDAP instance that also supplies external group names you need to configure mappers that map these groups into the access tokens used inside the Hub instance.

To ensure that groups from Keycloak groups and groups from LDAP are not mixed we recommend to treat external groups as realm roles.

In order to do this we recommend to first create a dummy client for which roles can be created based on the LDAP groups. This will guarantee that any changes will be compatible with future changes to the KNIME Hub client in Keycloak.

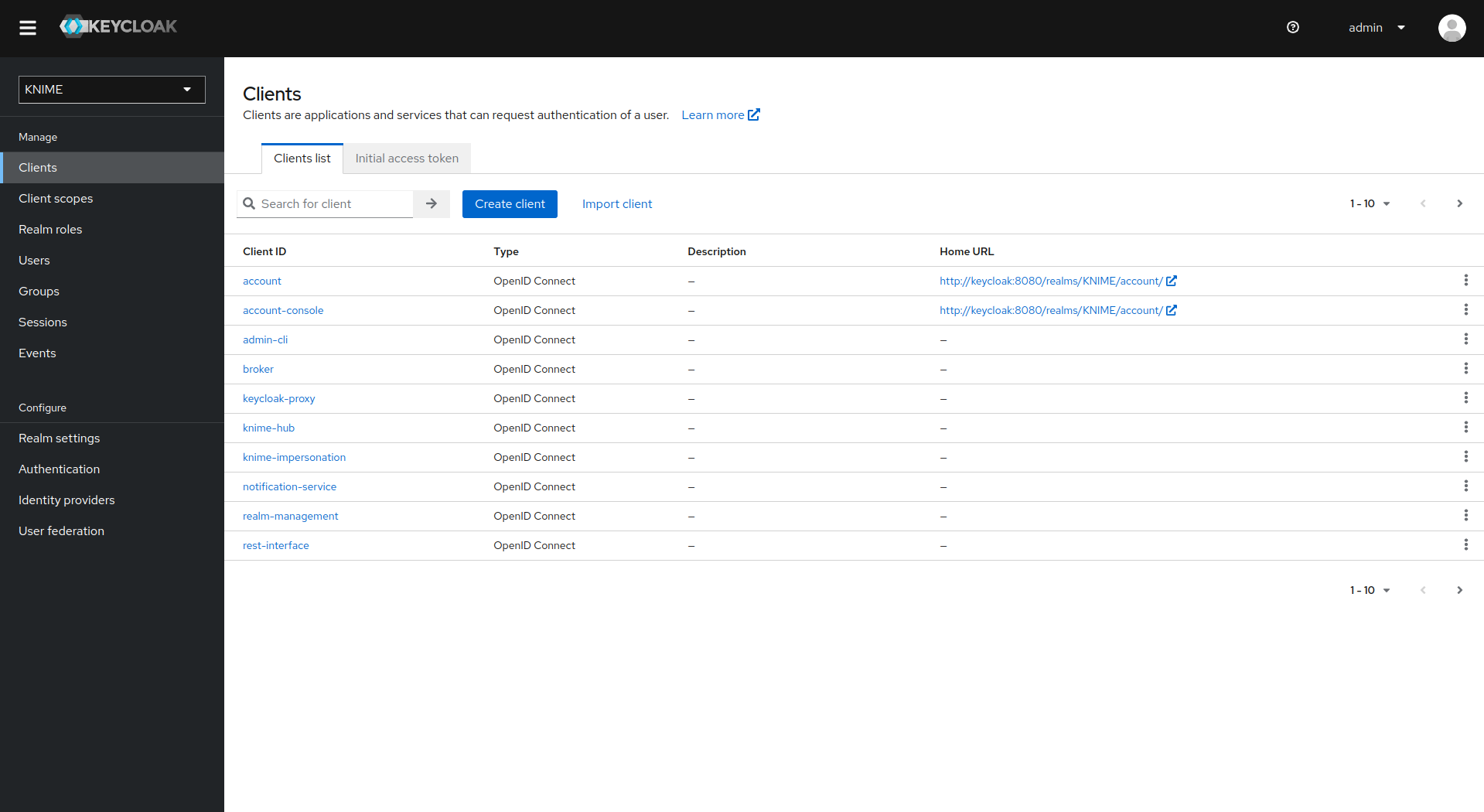

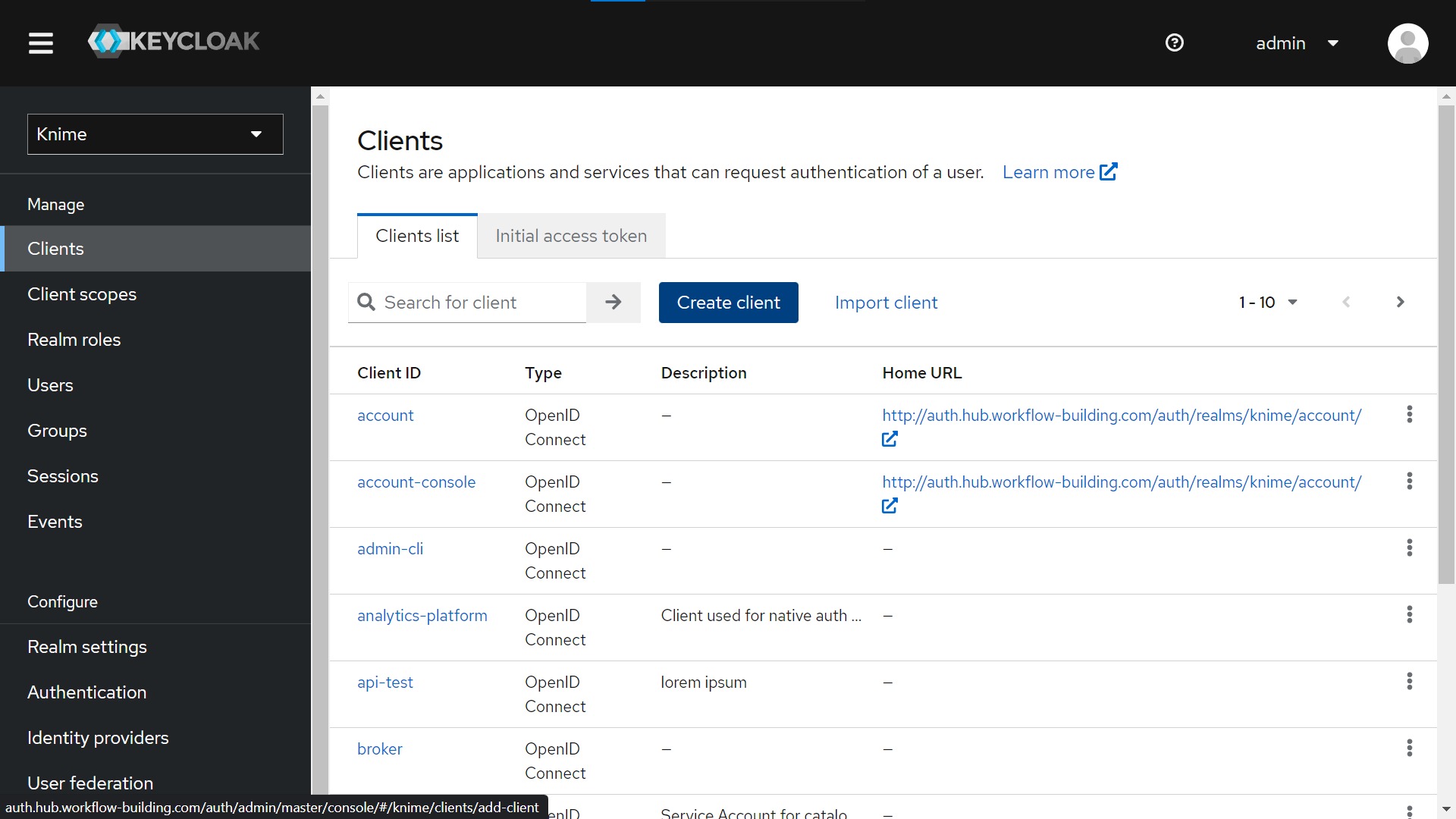

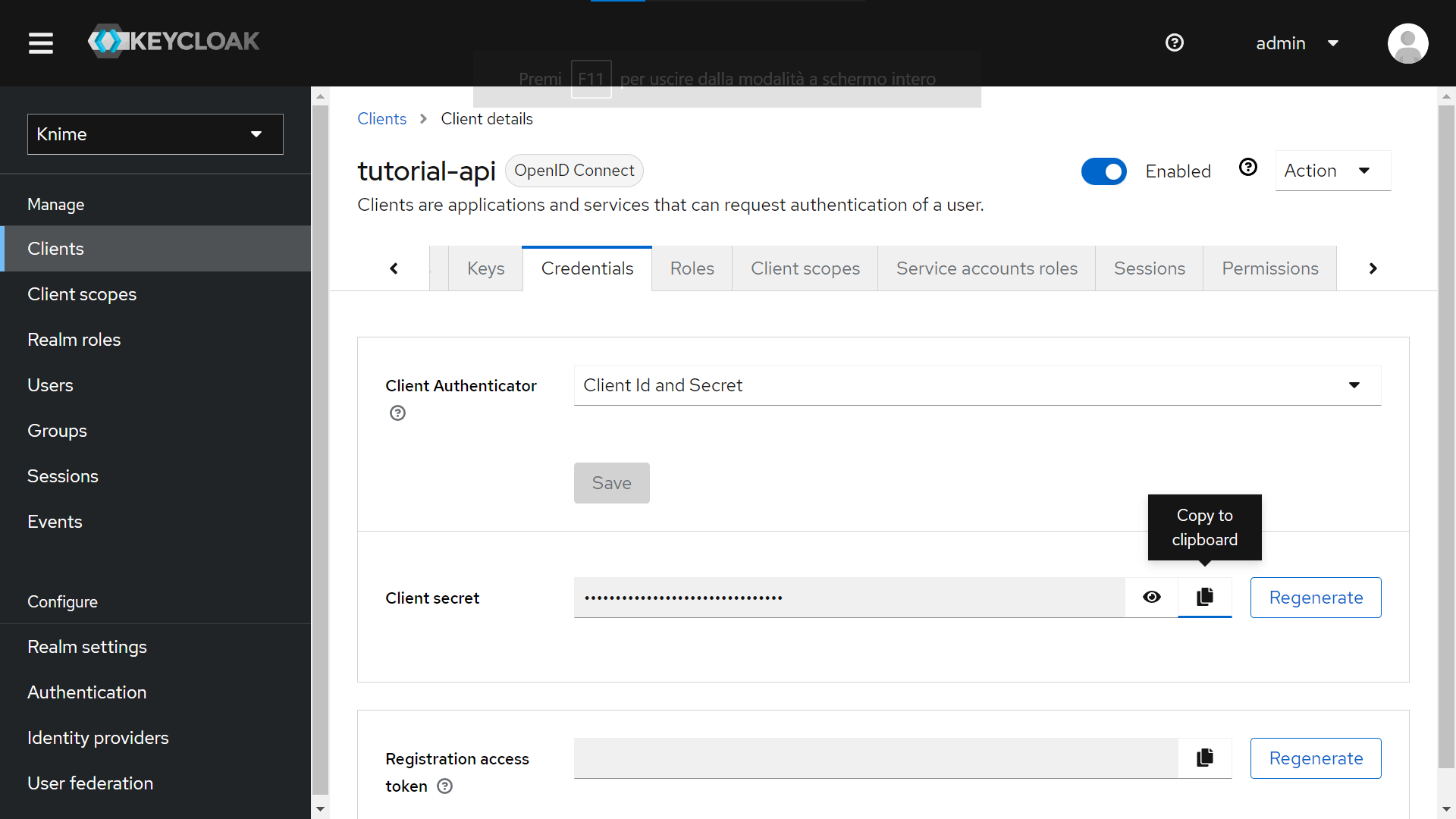

To create a new client follow these steps:

-

In Keycloak select realm Knime in the top left dropdown menu

-

On the left tab select Clients and click Create client

-

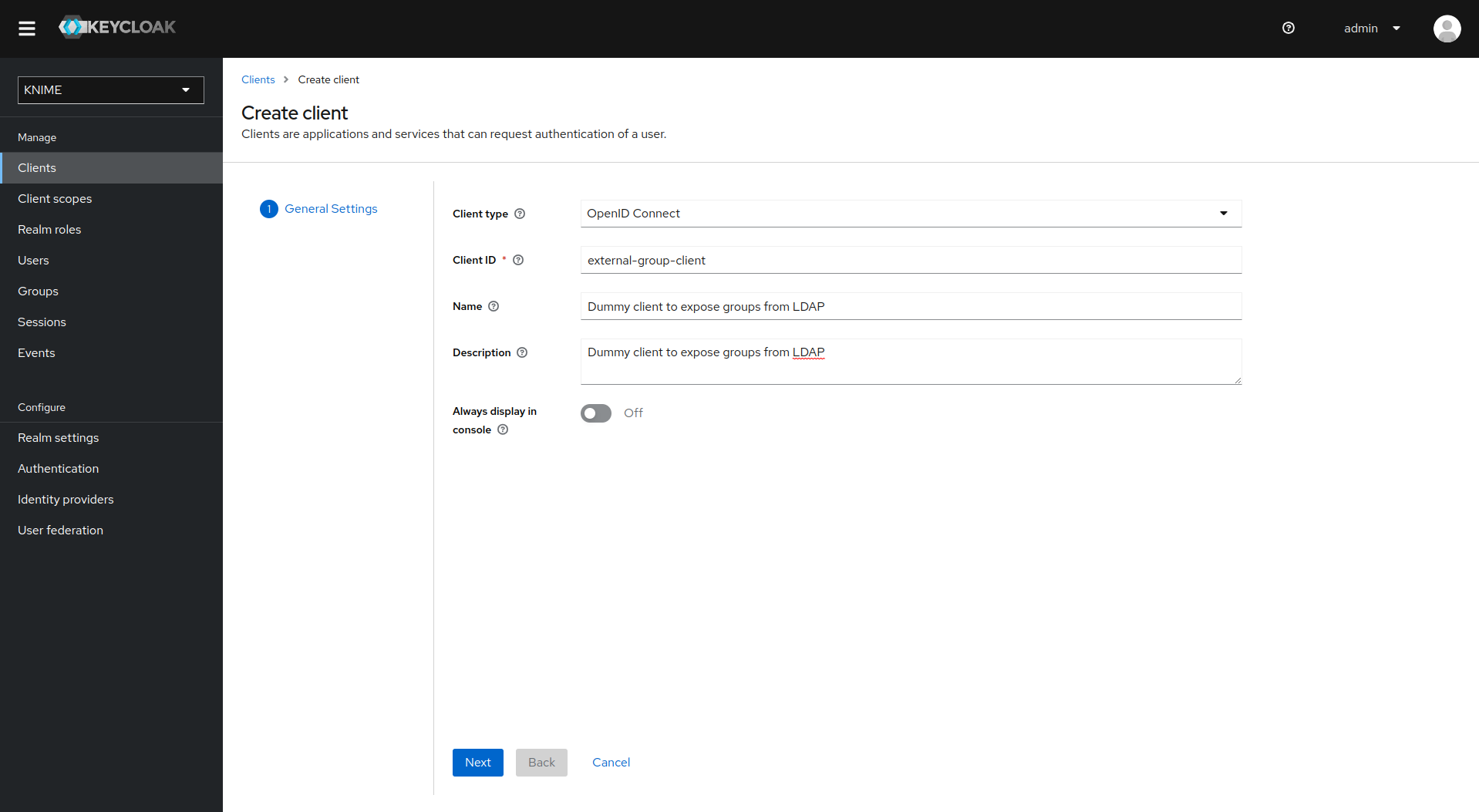

Set Client type to OpenID Connect

-

Enter a Client ID (in our example external-group-client), and a useful Name and Description

-

Click on Next

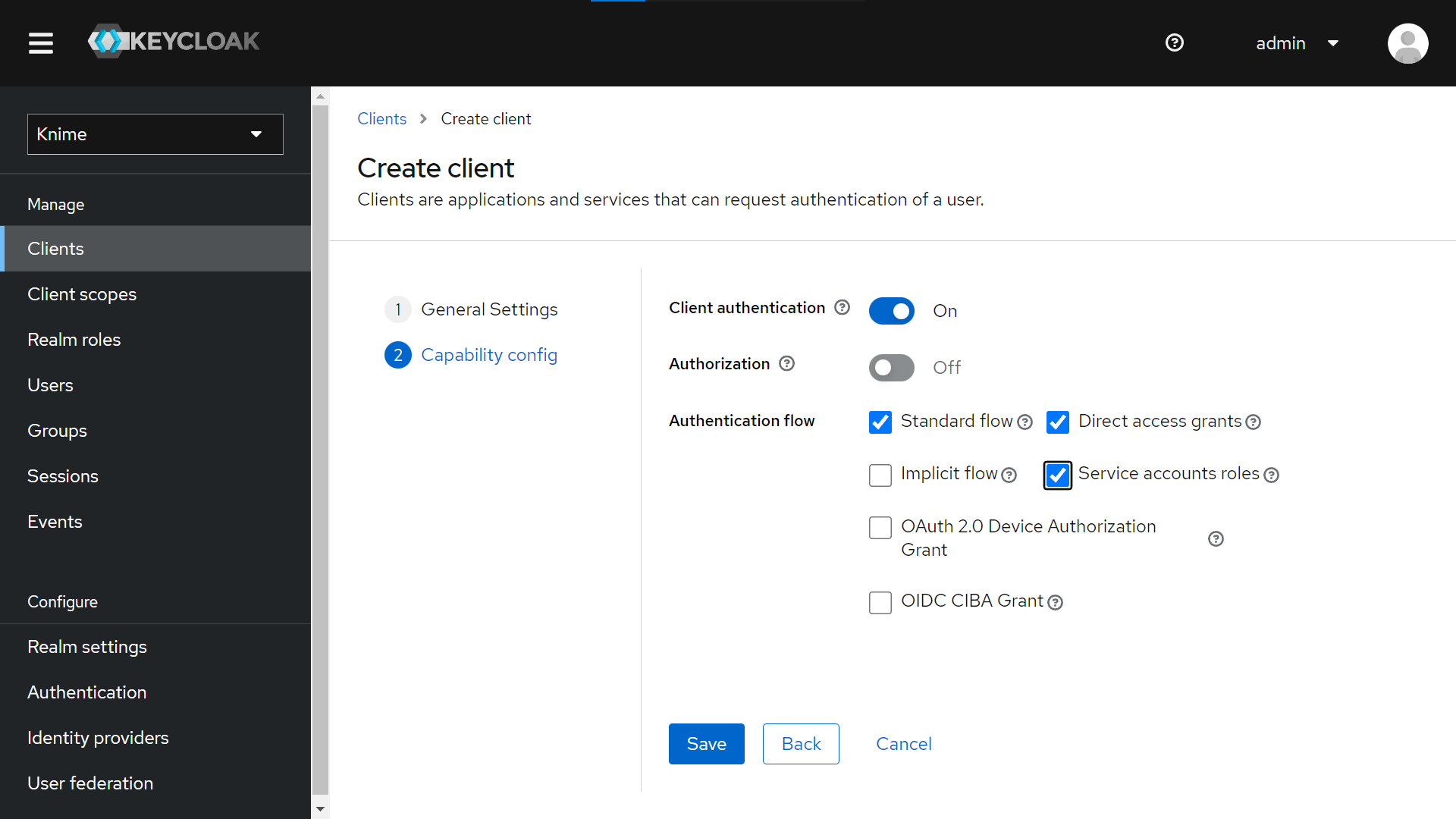

-

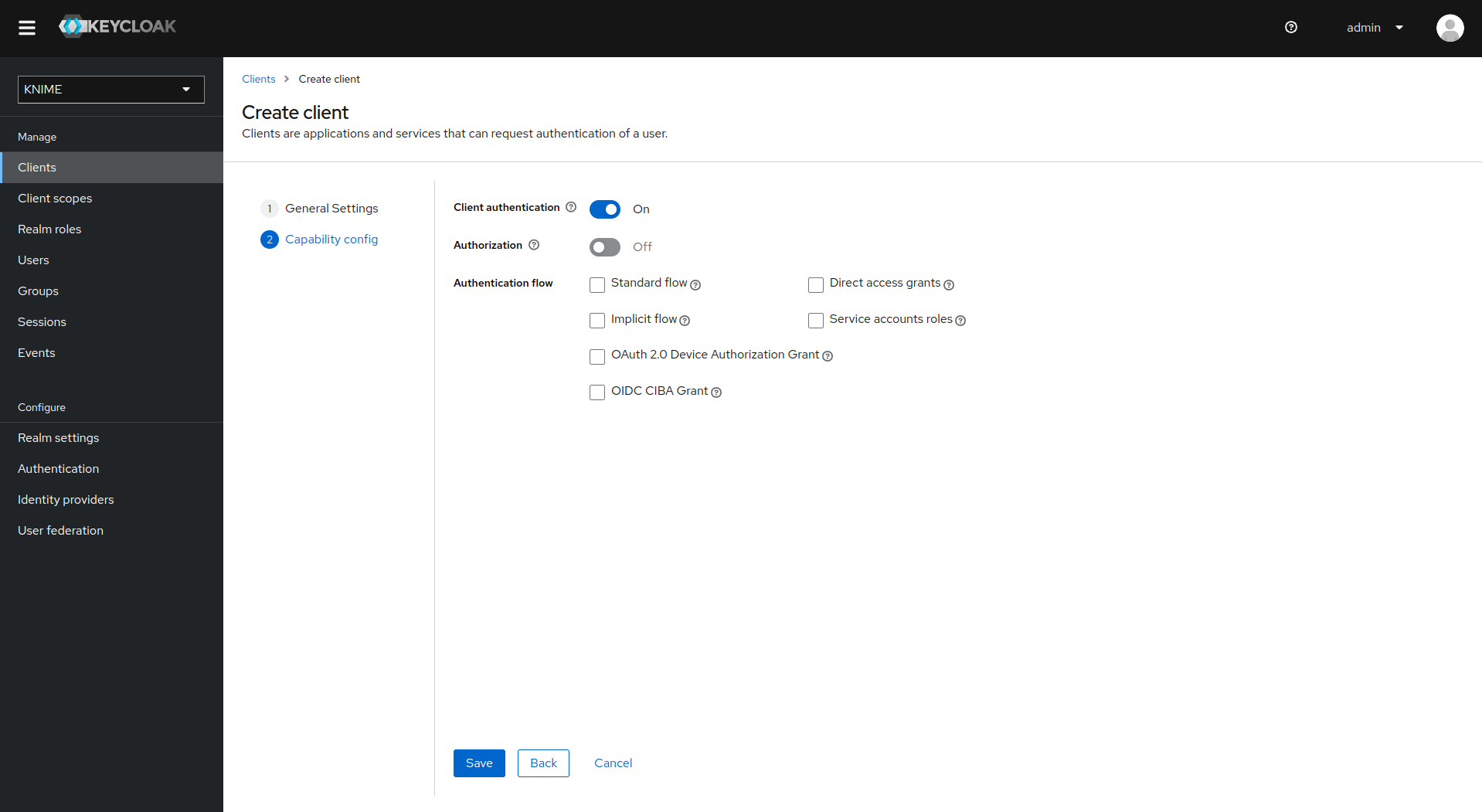

De-select all checkboxes of Authentication flow in the Capability config section, since this client will not require any capabilities

-

Enable Client authentication

-

Click on Save

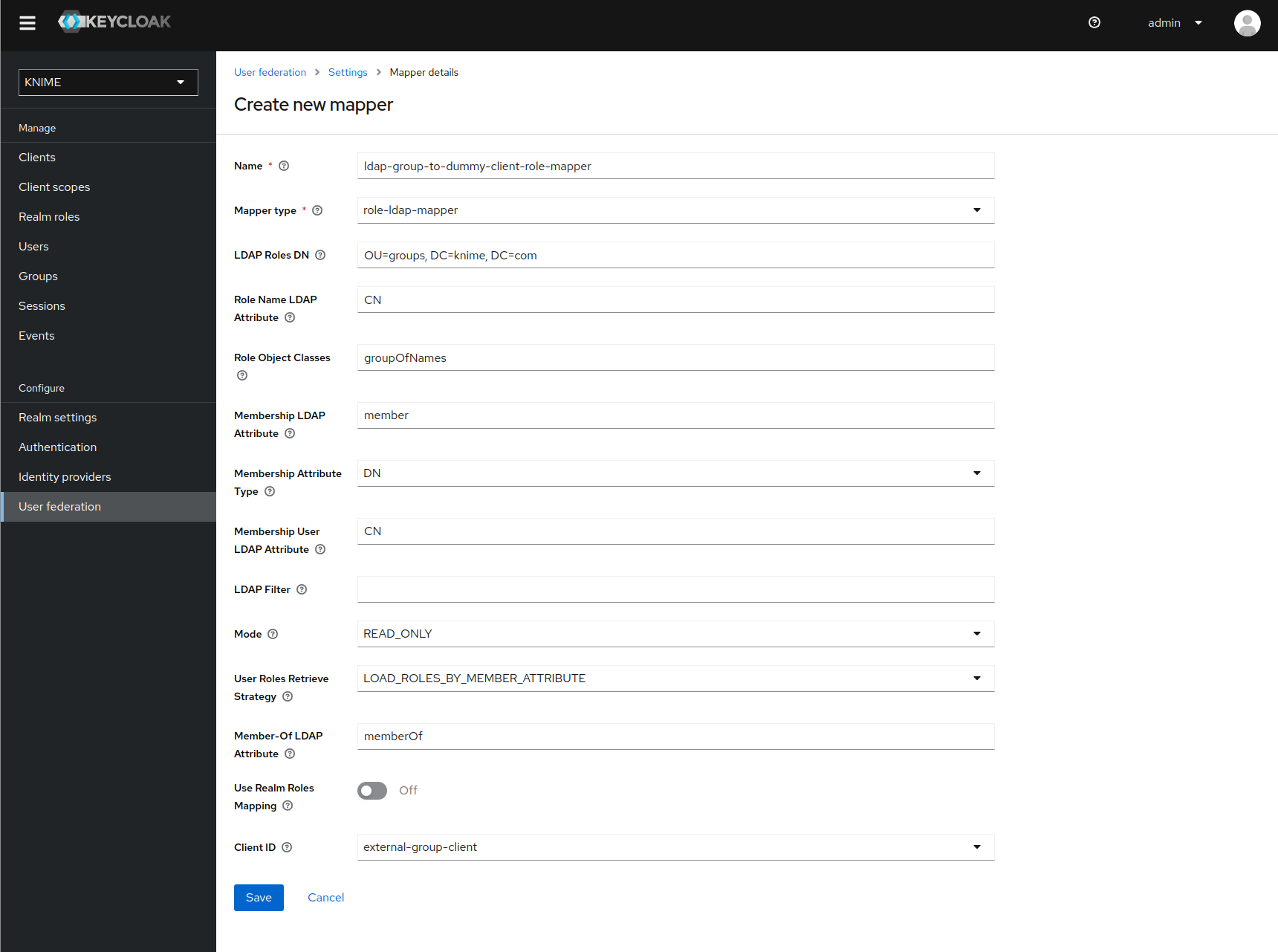

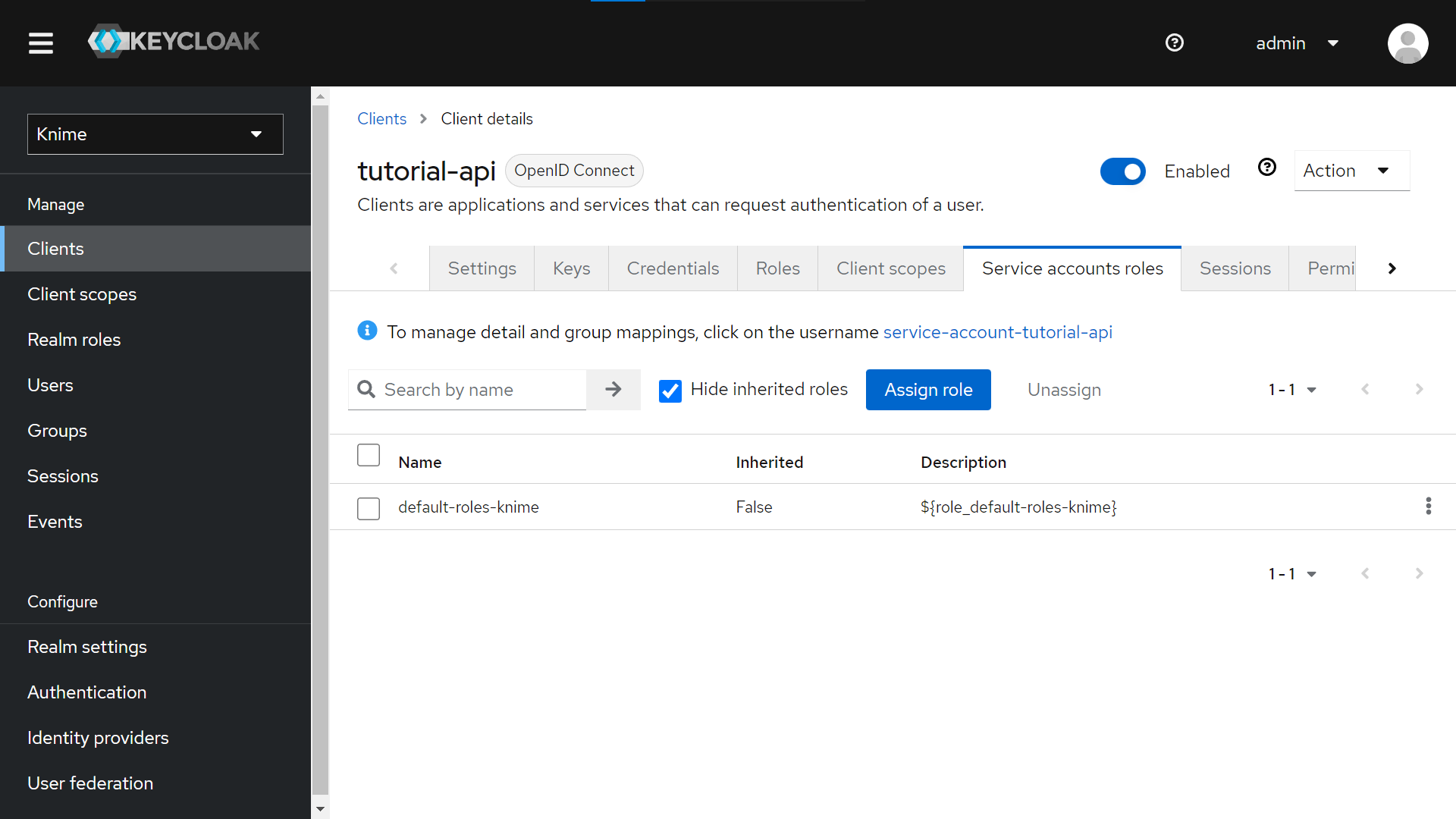

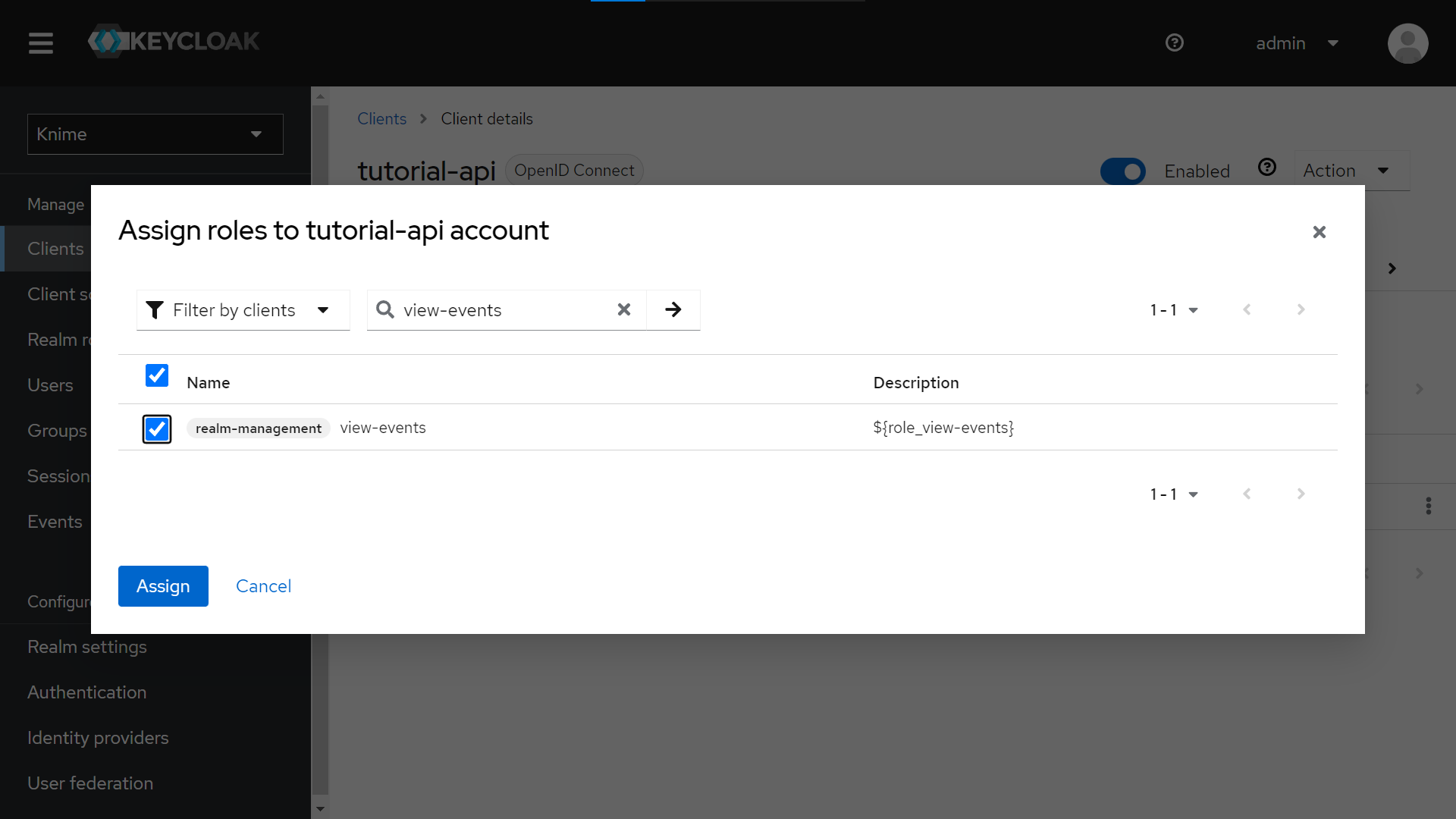

Now that the dummy client is set up, you can proceed to create a mapper that maps the user groups from LDAP to roles inside the dummy client:

-

On the left tab select User federation and click on your LDAP configuration

-

Switch to the tab Mappers

-

Click on Add mapper

-

Provide a name, e.g. ldap-group-to-dummy-client-role-mapper

-

Set Mapper type to role-ldap-mapper

-

Setup the mapper according to your LDAP

-

Disable User Realm Roles Mapping

-

Set Client ID to the previously created dummy client (in our example external-group_client)

-

Click on Save

Now if a user logs in with the LDAP credentials the user’s groups will be mapped to ad-hoc created client roles inside the 'external-group-client'.

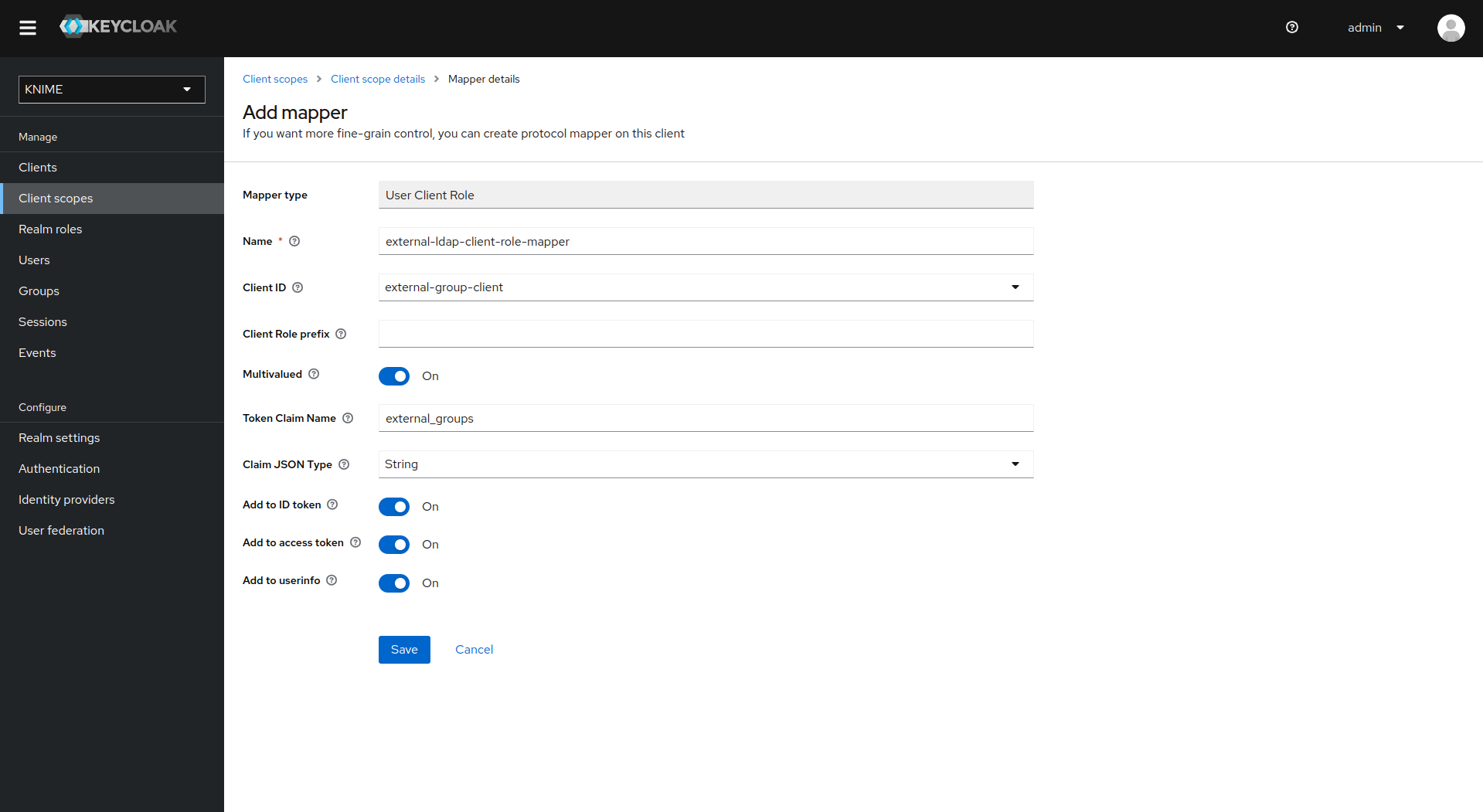

Next, you need to create a mapper that maps a user’s realm roles from the dummy realm to the access tokens:

-

On the left tab select Client scopes

-

Select groups

-

Switch to the tab Mappers

-

Click on Add mapper > By configuration and select User Client Role from the list

-

Provide a name, e.g. external-ldap-client-role-mapper

-

Set Client ID to the previously created dummy client (in our example external-group_client)

-

Set Token Claim Name to external-groups

-

Set Claim JSON Type to String

-

Ensure that Add to ID token, Add to access token, Add to userinfo, and Multivalued are turned on

-

Click on Save

Enable external groups

Once you have configured the external groups in Keycloak you need to create the groups that you want to be available inside KNIME Business Hub.

To do so you have to make a PUT request to the corresponding endpoint:

PUT https://api.<base-url>/accounts/hub:global/groups/<external-group-name>

where <external-group-name> is the name of the group and it must match the group name in the external identity provider.

Docker executor images

In order to create execution contexts for their teams, team admins will need to reference the Docker image of the KNIME Executor that they want to use.

Public Docker executor images are made available by KNIME which correspond to the full builds of KNIME Executor versions 4.7.0 and higher.

The currently available executor images have the following docker image name:

-

registry.hub.knime.com/knime/knime-full:r-4.7.4-179 -

registry.hub.knime.com/knime/knime-full:r-4.7.5-199 -

registry.hub.knime.com/knime/knime-full:r-4.7.6-209 -

registry.hub.knime.com/knime/knime-full:r-4.7.7-221 -

registry.hub.knime.com/knime/knime-full:r-4.7.8-231 -

registry.hub.knime.com/knime/knime-full:r-5.1.0-251 -

registry.hub.knime.com/knime/knime-full:r-5.1.1-379 -

registry.hub.knime.com/knime/knime-full:r-5.1.2-433 -

registry.hub.knime.com/knime/knime-full:r-5.1.3-594 -

registry.hub.knime.com/knime/knime-full:r-5.2.0-271 -

registry.hub.knime.com/knime/knime-full:r-5.2.1-369 -

registry.hub.knime.com/knime/knime-full:r-5.2.2-445 -

registry.hub.knime.com/knime/knime-full:r-5.2.3-477 -

registry.hub.knime.com/knime/knime-full:r-5.2.4-564 -

registry.hub.knime.com/knime/knime-full:r-5.2.5-592

| Please note that with the release of 5.2.2 KNIME executors will have HTML sanitization of old JavaScript View nodes and Widget nodes turned on by default. This should ensure that no malicious HTML can be output. For more information check the KNIME Analytics Platform 5.2.2 changelog. |

However you might want to add specific extensions to the KNIME Executor image that is made available to team admins to create execution contexts.

The following section explains how to do so.

Add extensions to an existing Docker image

In order to install additional extensions and features to the KNIME Executor image, you will need to first create a Dockerfile.

The file is named Dockerfile with no file extension.

You can use the example Dockerfile below which demonstrates how to extend the base image with a custom set of update sites and features.

If you need to install Docker please make sure not to install it on the same virtual machine (VM) where the KNIME Business Hub instance is installed, as it might interfere with containerd, which is the container runtime used by Kubernetes.

|

# Define the base image FROM registry.hub.knime.com/knime/knime-full:r-4.7.4-179 # Define the list of update sites and features # Optional, the default is the KNIME Analytics Platform update site (first entry in the list below) ENV KNIME_UPDATE_SITES=https://update.knime.com/analytics-platform/4.7,https://update.knime.com/community-contributions/trusted/4.7 # Install a feature from the Community Trusted update site ENV KNIME_FEATURES="org.knime.features.geospatial.feature.group" # Execute extension installation script RUN ./install-extensions.sh

The KNIME_UPDATE_SITES environment variable determines the update sites that will be used for installing KNIME Features. It accepts a comma-delimited list of URLs.

The KNIME_FEATURES environment variable determines the extensions which will be installed in the KNIME Executor. It accepts a comma-delimited list of feature group identifiers.

A corresponding update site must be defined in the KNIME_UPDATE_SITES list for feature groups to be successfully installed.

You can get the necessary identifiers by looking at Help → About KNIME → Installation Details → Installed Software in a KNIME instance that has the desired features installed.

Take the identifiers from the "Id" column and make sure you do not omit the .feature.group at the end (see also screenshot on the next page).

The base image contains a shell script install-extensions.sh which lets you easily install additional extensions in another Dockerfile.

Once the Dockerfile has been customized appropriately, you can build a Docker image from it by using the following command:

# Replace <image_name> and <tag_name> with actual values docker build -t <image_name>:<tag_name> .

Python and Conda in Docker images

When you create an Execution Context on KNIME Business Hub based on a full build you will have KNIME Python bundled available. If you need additional libraries you would need to make them available on the Hub instance.

You can do this in two ways:

-

Use the Conda Environment Propagation node.

-

Customize the Executor image used.

To get started with Conda environment propagation, check out KNIME Python Integration Guide. However, any libraries installed using Conda environment propagation will be removed when the executor restarts and installed again next time, so libraries that are used often should be installed as part of the executor Docker image.

In order to do so you need to:

-

Install Python in the executor Docker image

-

Declare to the execution context the path to the Python installation folder so that the executor can execute Python nodes

Install Python in the executor Docker image

The first step is the installation of Python and an environment manager for instance miniconda on a Docker image.

To do so, first you need the Docker Project to hold a miniconda installer near the Dockerfile, for example:

python-image/ container/ |-Miniconda3-py310_23.3.1-0-Linux-x86_64.sh dockerfiles/ |-Dockerfile

You will also need to provide a .yml file that will contain all the modules, packages and Python version that you need to install in order to execute the Python scripting nodes.

The .yml file could look like the following:

name: py3_knime # Name of the created environment channels: # Repositories to search for packages - defaults - anaconda - conda-forge dependencies: # List of packages that should be installed # - <package>=<version> # This is an example of package entry structure - python=3.6 # Python - scipy=1.1 # Notebook support - numpy=1.16.1 # N-dimensional arrays - matplotlib=3.0 # Plotting - pyarrow=0.11 # Arrow serialization - pandas=0.23 # Table data structures

Then you need to pull any available executor Docker image, install miniconda in batch mode on the image and define the environment variable for conda, as in the following example.

Also you will need to create your environments, that you specified in the .yml files.

# getting recent knime-full image as a basis FROM registry.hub.knime.com/knime/knime-full:r-4.7.3-160 # getting Miniconda and install in batch mode COPY container/Miniconda3-py310_23.3.1-0-Linux-x86_64.sh /home/knime/miniconda-latest.sh RUN bash miniconda-latest.sh -b # adding path to Miniconda bin folder to system PATH variable ENV PATH="/home/knime/miniconda3/bin:$PATH" # copy default conda environments into container COPY --chown=knime envs/ ./temp_envs RUN conda env create -f ./temp_envs/py3_knime.yml && \ rm -rf ./temp_envs

When installing conda and creating the environments you will obtain the following paths that will need to be added in the .epf file of the customization profile during the set up of the execution context.

For example based on the above Dockerfile:

<path to conda installation dir>=/home/knime/miniconda3/ <path to default conda environment dir>=<path to conda installation dir>/envs/<name of the env>

Now you can build the new Docker image, for example:

docker build . -f /dockerfiles/Dockerfile -t knime-full:4.7.3-with-python

Finally retag the image to make it useable for your embedded registry on Business Hub:

docker tag knime-full:4.7.3-with-python registry.<hub-url>/knime-full:4.7.3-with-python

Once you have created the Docker image with Python installed create an execution context that uses the newly created Docker image.

Set up the execution context

Now you need to set up and customize the execution context.

In order to declare to the execution context the path to the Python installation you will need to build a dedicated customization profile and apply it to the execution context.

-

Build the

.epffile by following the steps in KNIME Python Integration Guide and exporting the.epffile. To export the.epffile from KNIME Analytics Platform go to File > Export Preferences… -

Open the file and use only the parts related to Python/conda.

The .epf file could look like the following:

/instance/org.knime.conda/condaDirectoryPath=<path to conda installation dir> /instance/org.knime.python3.scripting.nodes/pythonEnvironmentType=conda /instance/org.knime.python3.scripting.nodes/python2CondaEnvironmentDirectoryPath=<path to default conda environment dir> /instance/org.knime.python3.scripting.nodes/python3CondaEnvironmentDirectoryPath=<path to default conda environment dir>

Find more details on how to set-up the .epf file in the Executor configuration section of the KNIME Python Integration Guide.

|

Now follow these steps to customize the execution context:

-

Build the

.zipfile containing the customization profile using the.epffile you just created. -

Upload the customization profile

.zipfile to KNIME Business Hub. -

Apply the customization profile to the execution context.

Customization profiles

Customization profiles are used to deliver KNIME Analytics Platform configurations from KNIME Hub to KNIME Analytics Platform clients and KNIME Hub executors.

This allows to define centrally managed:

-

Update sites

-

Preference profiles (such as Database drivers, Python/R settings)

A profile consists of a set of files that can:

-

Be applied to the client during startup once the KNIME Analytics Platform client is configured. The files are copied into the user’s workspace.

-

Be applied to the KNIME Hub executors of the execution contexts.

Customization profiles can be:

-

Global customization profiles that need to be uploaded and managed by the Global Admin. These can be applied across teams via shared execution context or to specific teams.

-

Team’s customization profiles, which are scoped to the team, can be uploaded either by a Global Admin or a team admin.

Once uploaded, the customization profile can then be downloaded or used in KNIME Analytics Platform clients and executors.

| The access to the customization profile is not restricted meaning that anyone with the link can download it and use it. |

Currently, customization profiles can be managed on KNIME Hub via REST or using the dedicated Customization Profile data application available here.

Create a customization profile

A customization profile is a file with the .epf extension, that contains the needed profile information. So the first step is to create such a file.

-

Create an

.epffile that contains the needed profile information:-

Build the file from a local KNIME Analytics Platform installation. Set up the needed configuration in a local KNIME Analytics Platform installation, then go to File → Export Preferences. Open the created

.epffile, and look for the entries related to your needed settings. Remove all other settings (as some contain e.g. local paths on your machine, which will inevitably cause issues when applying to another installation). -

Place the

.epffile in a folder, together with any additional files that need to be distributed along the profile (e.g. database drivers) -

Create a

.zipfrom that folder

-

When creating a zip file on macOS using the built in functionality, two files are automatically added that cause the next steps (i.e. applying the profile in Analytics Platform) to fail. There is a way to prevent creation of these files if creating the .zip via command line, see

here. If in doubt, use a Windows or Linux machine to create the .zip file.

|

| The customization profiles on the KNIME Hub instance are going to be accessible without user authentication therefore they shouldn’t contain any confidential data such as passwords. |

For further details and an example on how to distribute JDBC driver files go to the Hub-managed customization profiles section of the KNIME Database Extension Guide.

Variable replacement

It is possible to use variables inside the preferences files (only those files

ending in .epf) which are replaced on the client right before they are

applied. This makes the Hub-managed customizations even more powerful. These

variables have the following format: ${prefix:variable-name}. The following

prefixes are available:

-

env: the variable is replaced with the value of an environment value. For example,${env:TEMP}will be replaced with/tmpunder most Linux systems. -

sysprop: the variable is replaced with a Java system property.

For example,${sysprop:user.name}will be replaced with the current user’s name. For a list of standard Java system properties see the JavaDoc. Additional system properties can be defined via-vmargsin theknime.ini. -

profile: the variable will be replaced with a property of the profile in which the current preference file is contained in. Currentlylocationandnameare supported as variable names. For example,${profile:location}will be replaced by the file system location of the profile on the client. This can be used to reference other files that are part of the profile, such as database drivers:

org.knime.workbench.core/database_drivers=${profile:location}/db-driver.jar

In case you want to have a literal in a preference value that looks like a

variable, you have to use two dollar signs to prevent replacement. For example

$${env:HOME} will be replaced with the plain text ${env:HOME}. If you want

to have two dollars in plain text, you have to write three dollars

($$${env:HOME}) in the preference file.

| Once you use variables in your preference files they are not standard Eclipse preference files anymore and cannot be imported as they are. |

Client customization

Besides the preferences that are exportable by KNIME Analytics Platform there are additional settings that can be added to the preference files to customize clients:

|

|

|

|

|

|

|

|

|

|

|

Upload a customization profile

After creating the .zip file, you need to upload it to KNIME Business Hub. You can do it via REST or via data application.

Upload via Data Application

-

If you have not done so already, on KNIME Business Hub, create an application password for the user uploading the profile.

-

For global customization profiles, use a global admin user.

-

For team’s customization profiles, the user can be either a global admin user or a team admin user.

-

-

Download the workflow:

Click here and download the workflow to your KNIME Analytics Platform. -

Upload the downloaded workflow to your KNIME Business Hub instance via KNIME Analytics Platform.

-

Deploy the workflow as a data application. You can learn more about how to do it here.

-

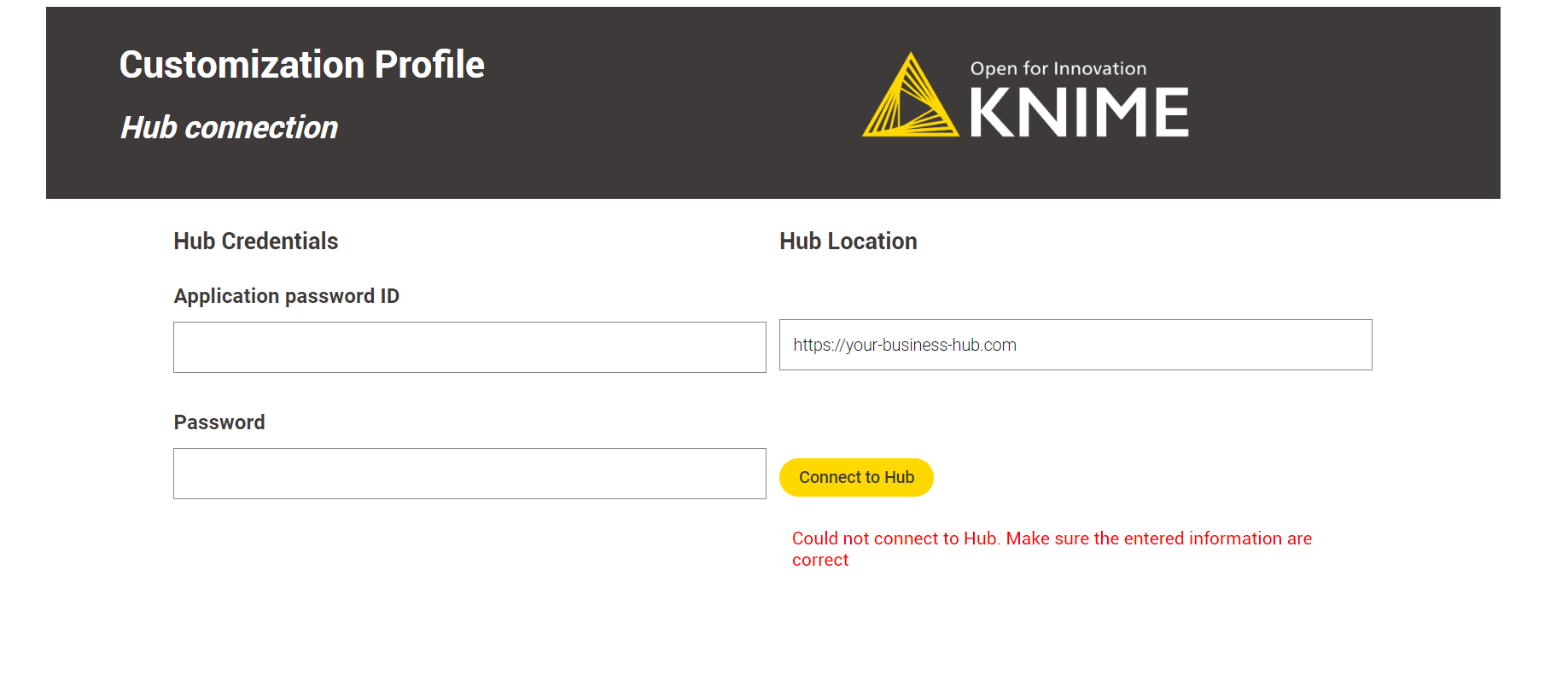

The first step to begin using the data application is establishing a connection with the Hub instance. You must enter your Hub URL and application password in the data app to connect to your KNIME Business Hub instance.

Figure 11. The Hub connection step.

Figure 11. The Hub connection step. -

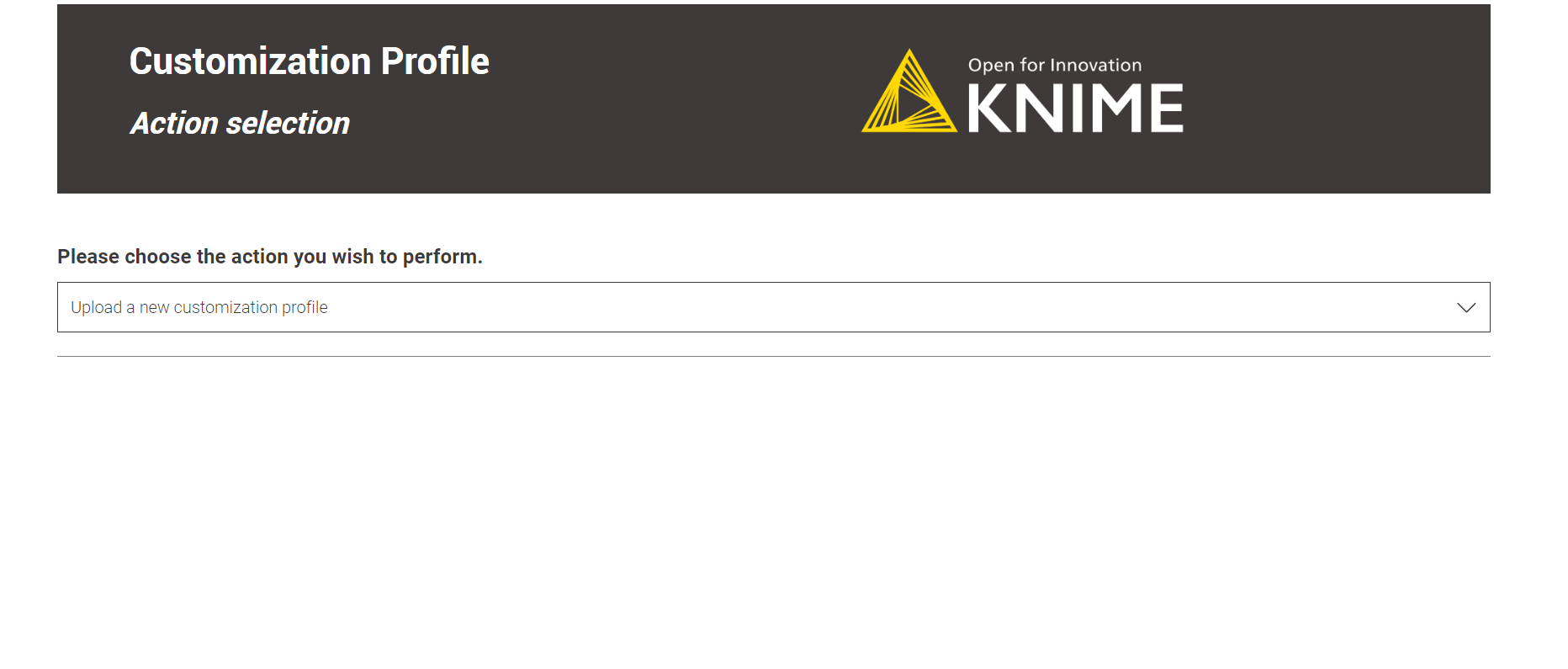

Select Upload a New Customization Profile in the Action Selection menu and click Next. You can upload multiple customization profiles to your KNIME Business Hub instance.

Figure 12. The upload option is selected in the action selection step.

Figure 12. The upload option is selected in the action selection step. -

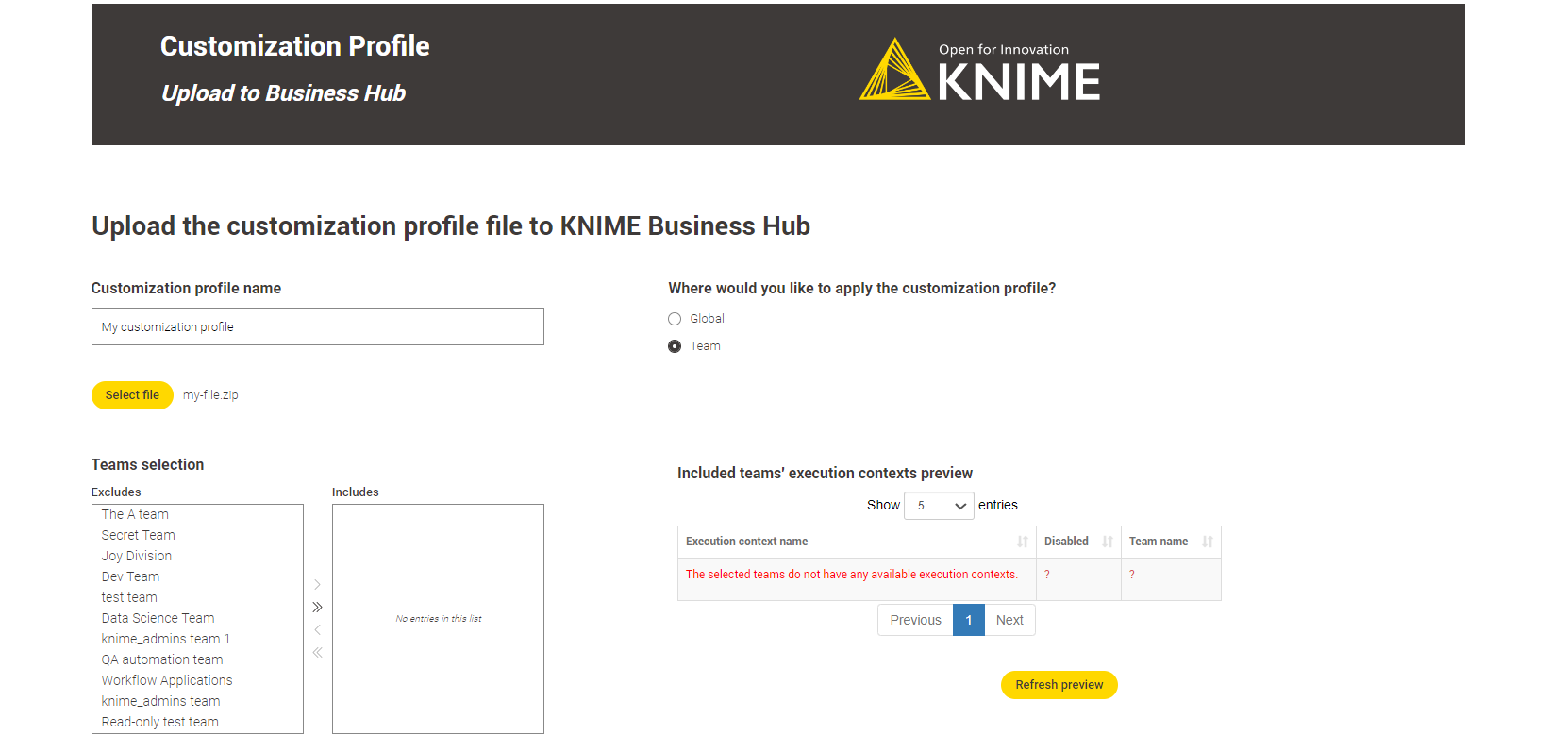

In the next step, select the customization profile file previously created, give it a name, and define its scope.

-

Global Admin: You can choose the customization profile scope if logged in to the Data App as a Global Admin.

-

Global scope: After being uploaded to KNIME Business Hub, the global customization profile can be applied to a shared or a team execution context.

-

Team scope: When a customization profile with team scope is uploaded, it can be applied to the team’s executors. It is possible to upload the same customization profile to multiple teams. The Global Admin can upload the customization profile to any team.

Figure 13. Global Admin executing the data app

Figure 13. Global Admin executing the data app

-

-

Team Admin: If you’re logged in as a team admin, you can only upload the customization profile as a team-scoped type. You can upload the same customization profile for multiple teams. The availability of teams is determined by the permissions of the user who is logged in.

-

-

In the Upload Results step, a table displays the results of the customization profile upload operation, along with a legend.

If everything goes well, the green light means your customization profile has been successfully uploaded to the KNIME Business Hub. You can now apply it to a KNIME Analytics Platform installation or to a KNIME Business Hub executor.

-

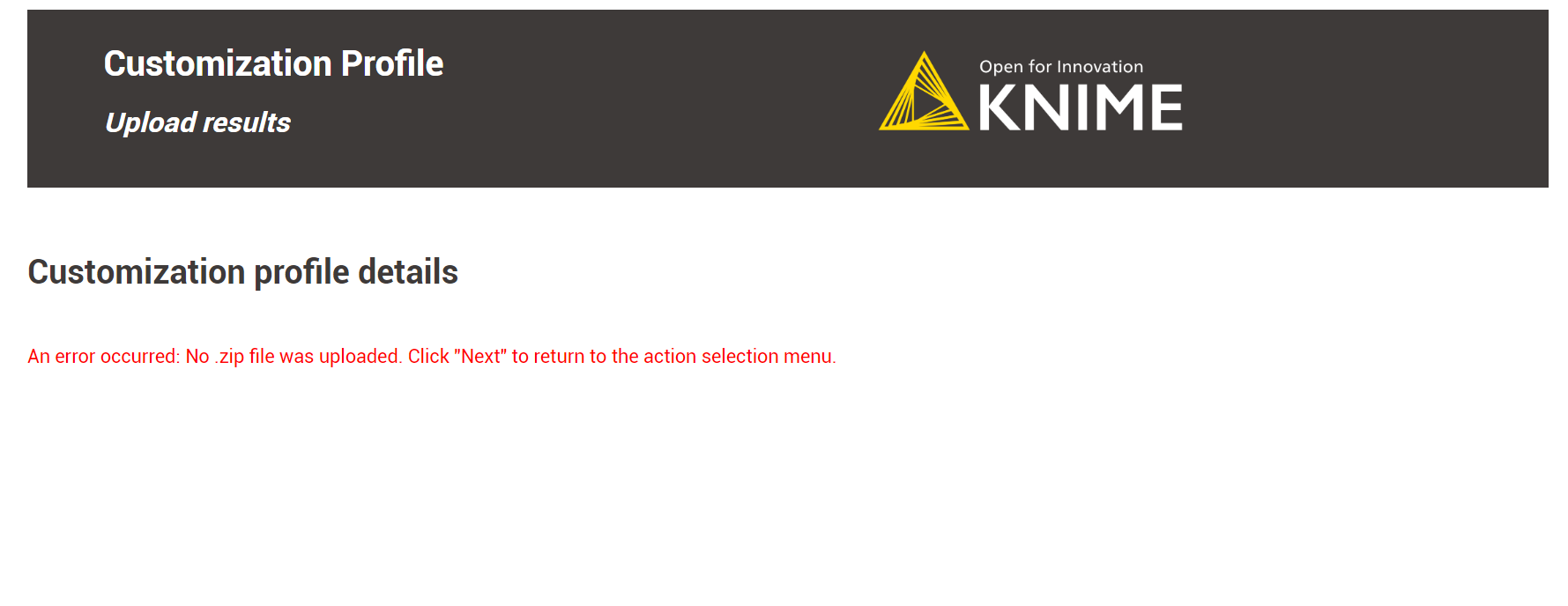

Error Handling: The data application is designed to handle two types of errors:

-

Missing customization profile file.

Figure 14. This error occurs when no customization profile file is provided.

Figure 14. This error occurs when no customization profile file is provided. -

No team selection (for team-scoped customization profiles).

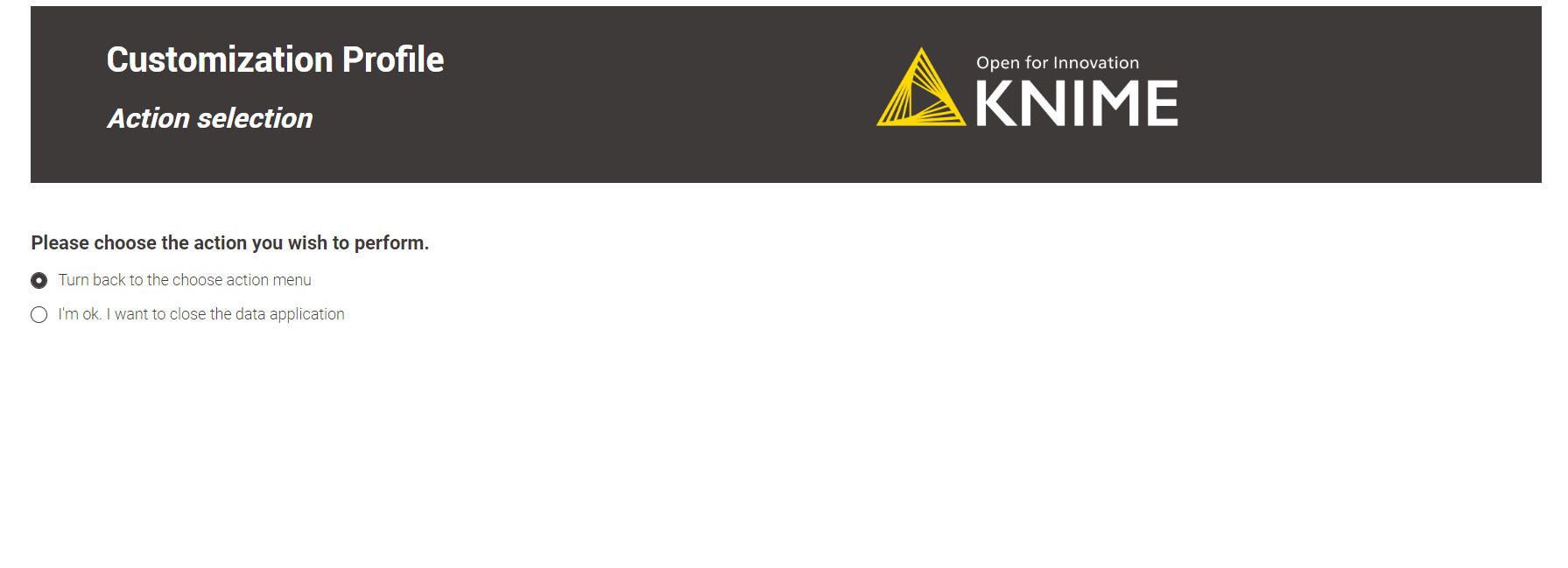

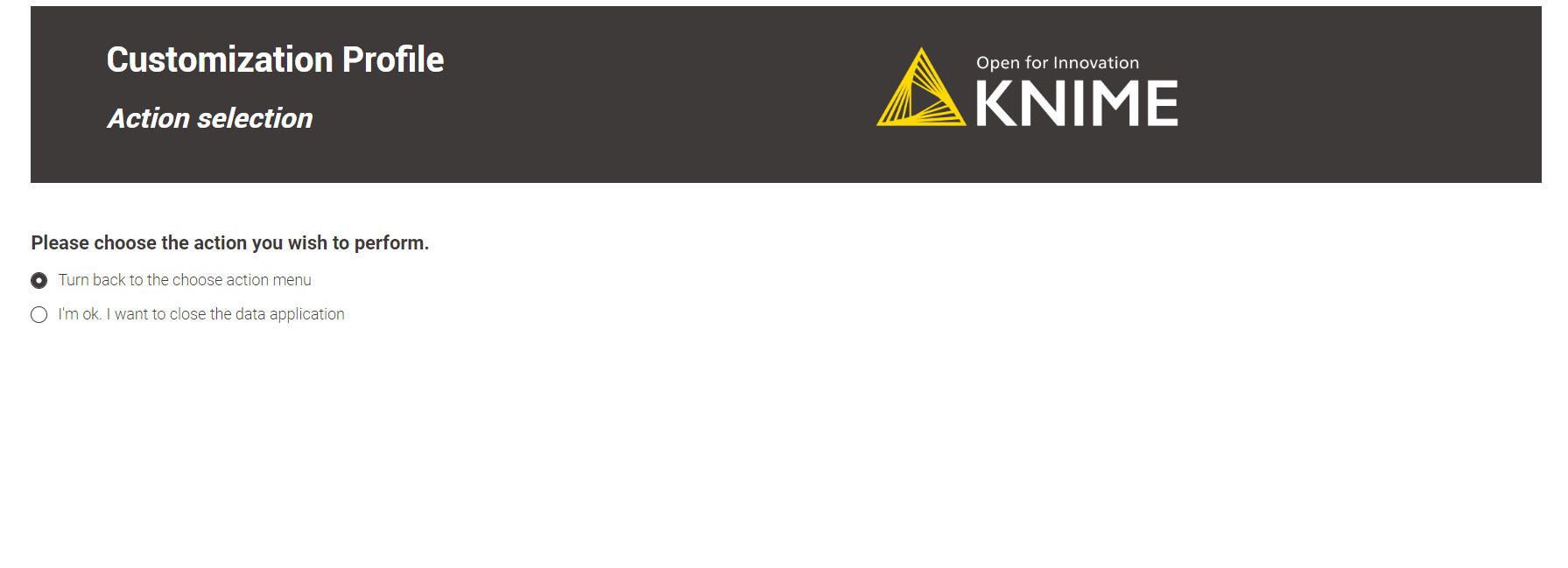

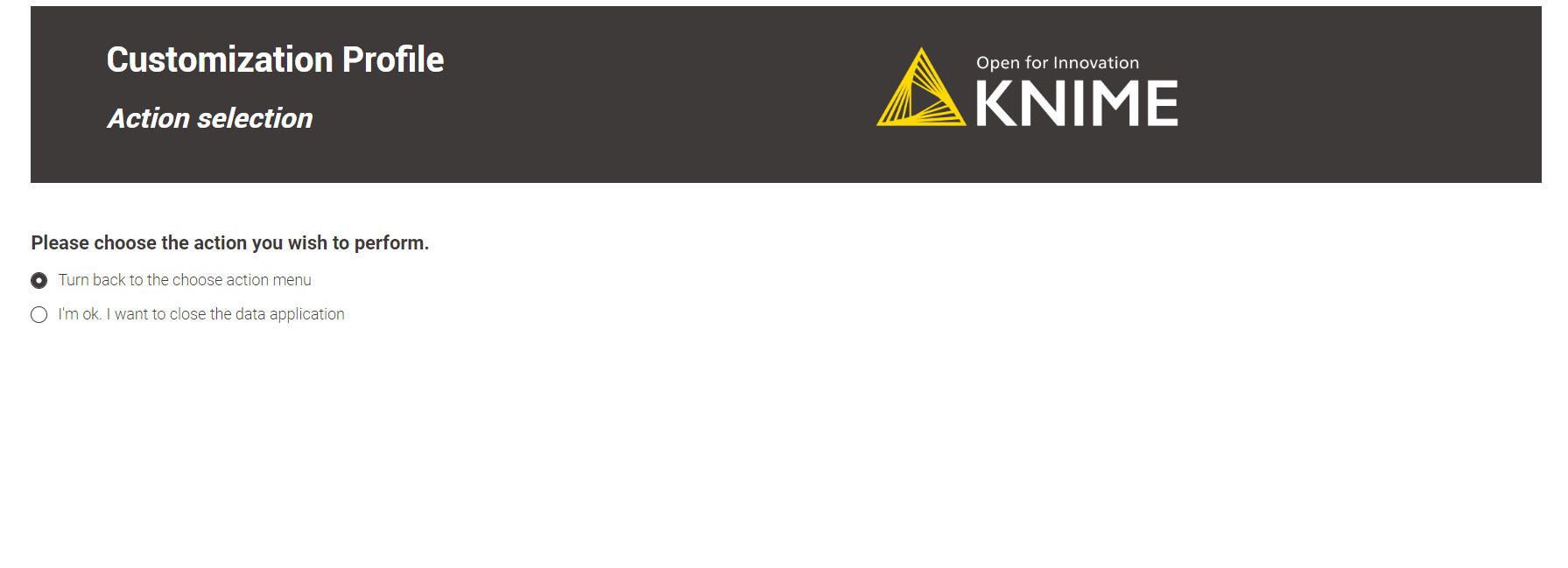

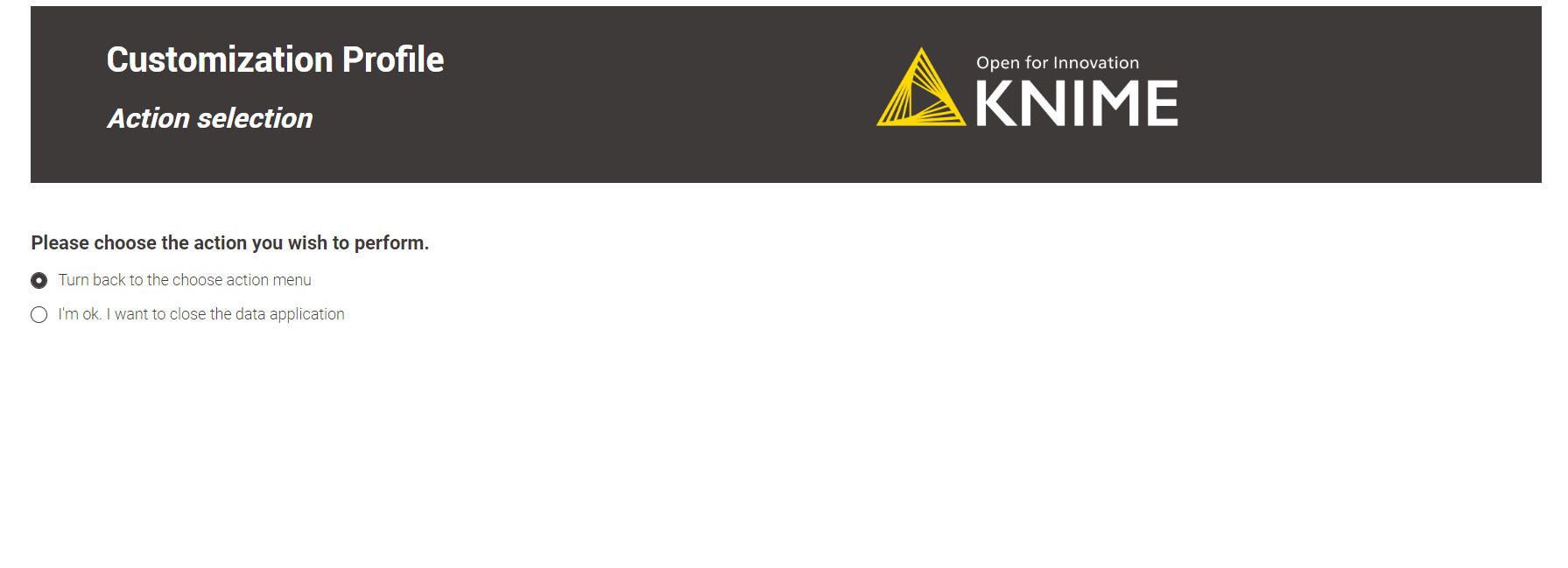

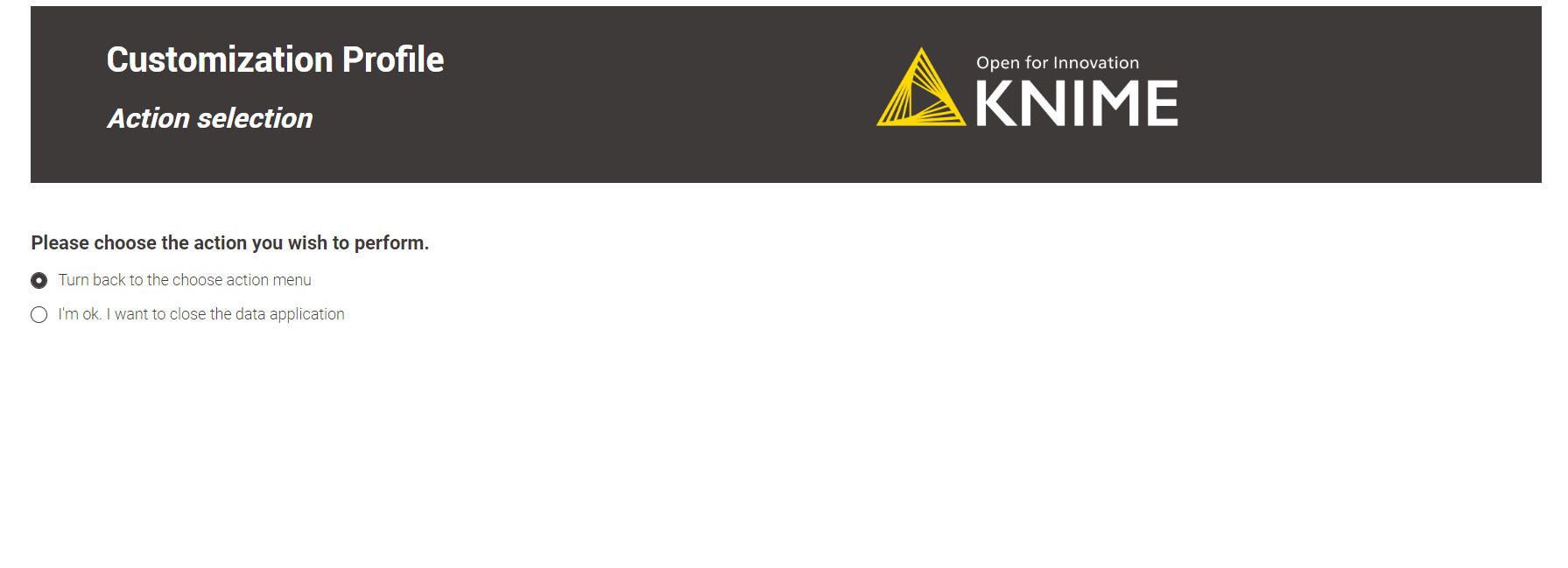

If you encounter an error message, click Next and select the Turn back to the choose action menu option. Repeat the operation, fixing the error.

Figure 15. Turn back to the action menu and repeat the operation.

Figure 15. Turn back to the action menu and repeat the operation.

-

-

Click Next to finish the operation. You can return to the Action Selection menu to perform additional actions or close the data application directly.

Upload via REST request

-

If you have not done so already, on KNIME Business Hub, create an application password for the user uploading the profile.

-

For global customization profiles, use a global admin user.

-

For team’s customization profiles, the user can be either a global admin user or a team admin user.

-

-

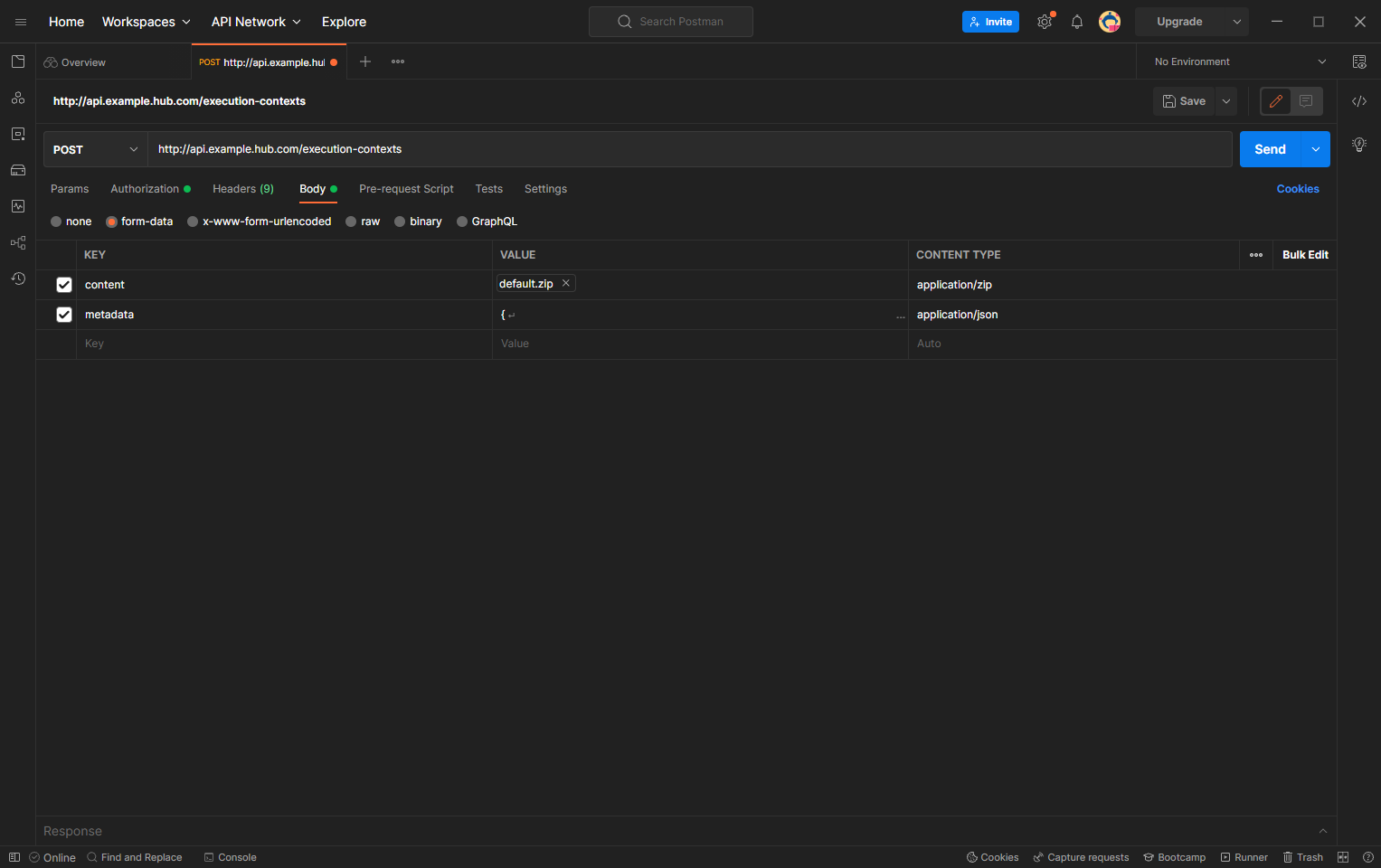

Send a

POSTrequest tohttps://api.<base-url>/execution/customization-profileswith the following set up:-

Authorization: select Basic Auth, using username and password from the created application password

-

Body: select form-data as request body type and add the following entries:

-

Add a new key, set content to “File”. Name of the key needs to be “content”. The value of the key is the

.zipfile containing the profile, and the content type isapplication/zip. -

Add a new key, set content to “Text”. Name of the key needs to be “metadata”.

-

The value of the key is:

For global customization profiles enter:

{ "name": "<profile_name>", "scope": "hub:global" }

For team’s customization profiles you first need to obtain the

<team_ID>of the team you want to upload the customization profile to. To do so you can use the followingGETrequest:GET api.<base-url>/accounts/identity

You will find the

<team_ID>in the body response underteams:... "teams": [ { "id": "account:team:<team_ID>", "name": "<team_name>", ... } ] ...

Then the value of the key is:

{ "name": "<profile_name>", "scope": "account:team:<team_ID>" }

-

Set the content type for the second key to

application/json

-

-

-

When using Postman there is no need to manually adjust the headers

-

-

Send the request

Figure 16. Set up Postman for a REST call to upload a customization profile to a KNIME Hub instance

Figure 16. Set up Postman for a REST call to upload a customization profile to a KNIME Hub instance -

If successful, you will receive a 201 status response. Make note of the created

<profile_ID>, as this will be used to refer to the profile when requesting it.

|

You can refer to the API documentation at the following URL for more details about the different calls you can make to the Customization Profiles endpoint. http://api.<base-url>/api-doc/execution/#/Customization%20Profiles |

Apply a customization profile

Apply a customization profile to KNIME Hub executor

Apply via Data App

To apply a customization profile to all executors running in a KNIME Business Hub execution context, we can use the data application. However, the type of user logged in affects the operations that can be performed. Refer to the table below for a comprehensive overview.

| User type | Eligible customization profile | Customization profile type | Eligible Execution Context |

|---|---|---|---|

Global Admin |

All uploaded within the KNIME Business Hub instance |

Global |

Shared and any team specific execution context |

Team-scoped |

Any team execution contexts |

||

Team admin |

Only those uploaded in teams in which the user has team admin rights |

Team-scoped only |

Only the team execution contexts where the customization profile was uploaded. Shared execution contexts are not eligible |

To provide a better understanding of the table, here are some examples to demonstrate its functionality:

-

A Global Admin can choose one team-scoped customization profile and apply it to any team-scoped execution context within the KNIME Business Hub instance. For instance, the Global Admin can apply Team A’s customization profile to the execution context of Team B, i.e., execution context B.1.

-

A Global Admin can also select a Global customization profile and apply it to any shared and team-scoped execution context within the KNIME Business Hub instance.

-

A team admin can only choose team-scoped customization profiles uploaded within the teams with admin rights. For example, they can only apply a customization profile uploaded in Team A within the Team A execution contexts.

-

Learn how to download the data app from Community Hub, upload and deploy it in KNIME Business Hub, and authenticate with your application password in the Upload a customization profile section.

-

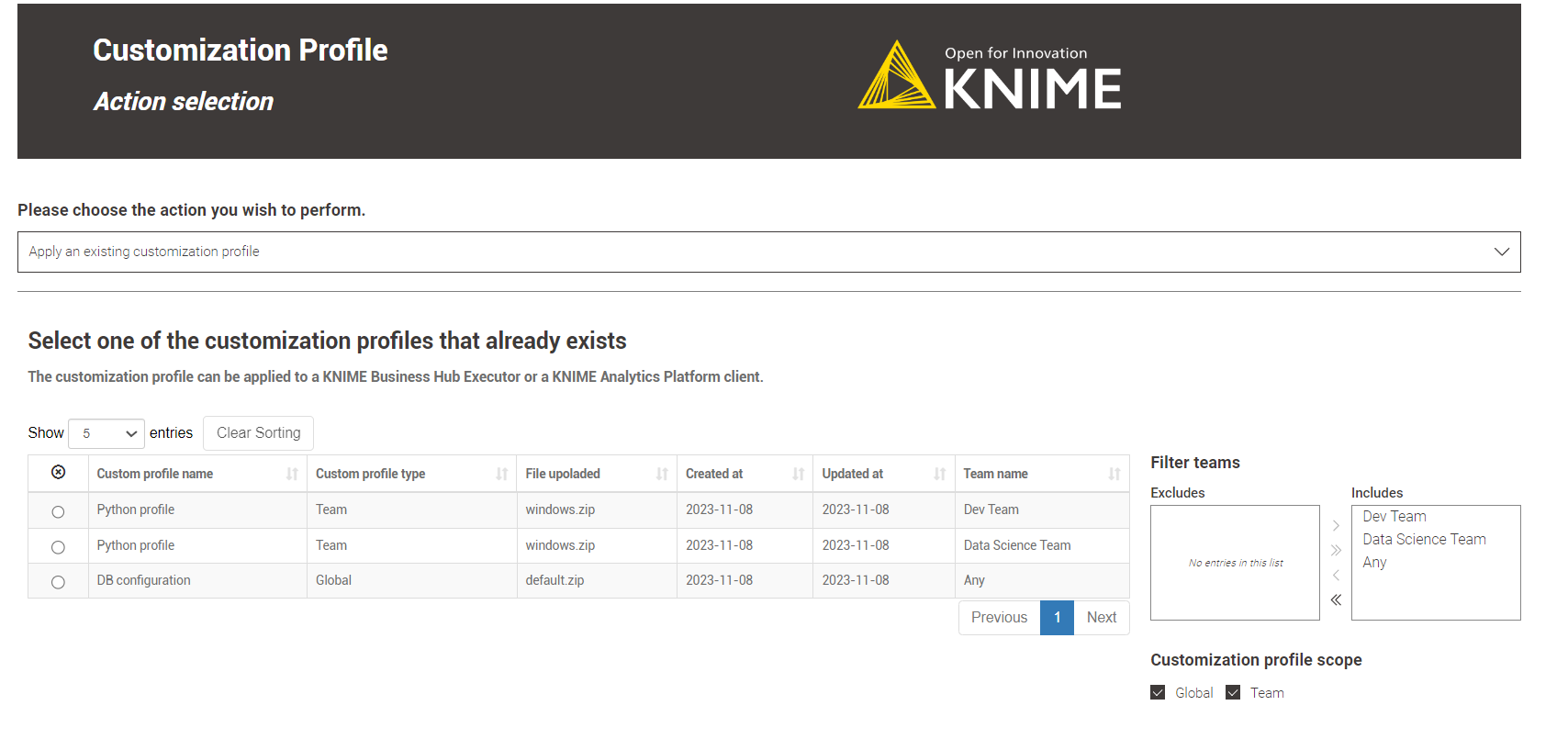

Select Apply an existing Customization Profile in the Action Selection menu to apply a Customization Profile.

-

Choose the desired customization profile from the table and click Next. In the table, if the Team name column has a value of Any, the customization profile has a global scope and can be applied to any team.

Figure 17. A Global Admin could select a global or team-scoped customization profile.

Figure 17. A Global Admin could select a global or team-scoped customization profile.It is only possible to apply one customization profile at a time. To apply multiple customization profiles, repeat the operation. -

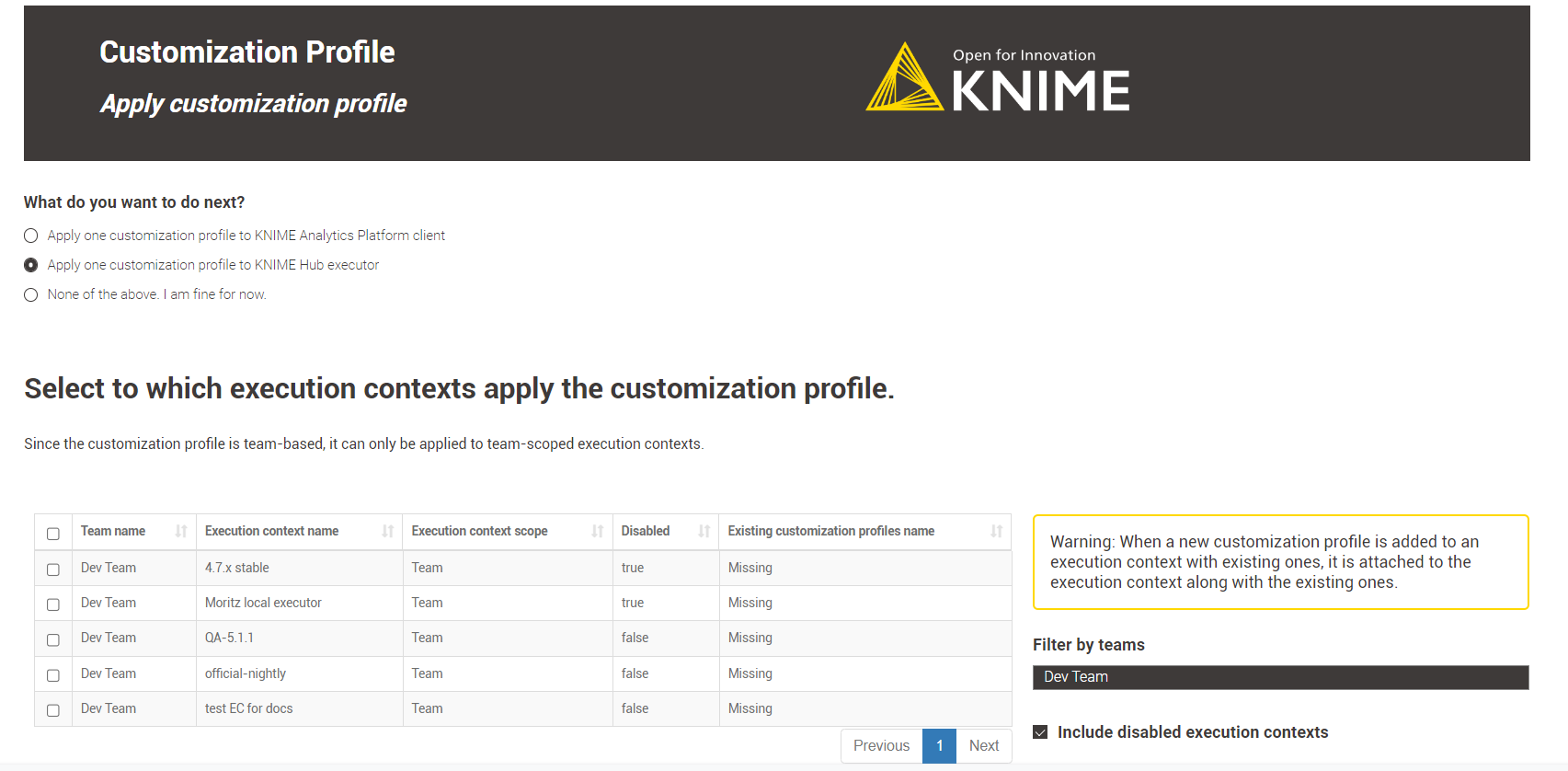

When you reach the Apply customization profile step, choose the option Apply one customization profile to KNIME Hub Executor. You’ll see a table that shows the available execution contexts based on your user type and the previously selected customization profile scope.

Applying a new customization profile with the same name as an existing one to an execution context causes an internal error. Check the "Existing customization profiles name" column to avoid this. Select one or more execution contexts from the table where you want to apply the customization profile and click Next.

Figure 18. The team admin can apply a customization profile uploaded to the Dev Team to the team-scoped execution contexts.

Figure 18. The team admin can apply a customization profile uploaded to the Dev Team to the team-scoped execution contexts. -

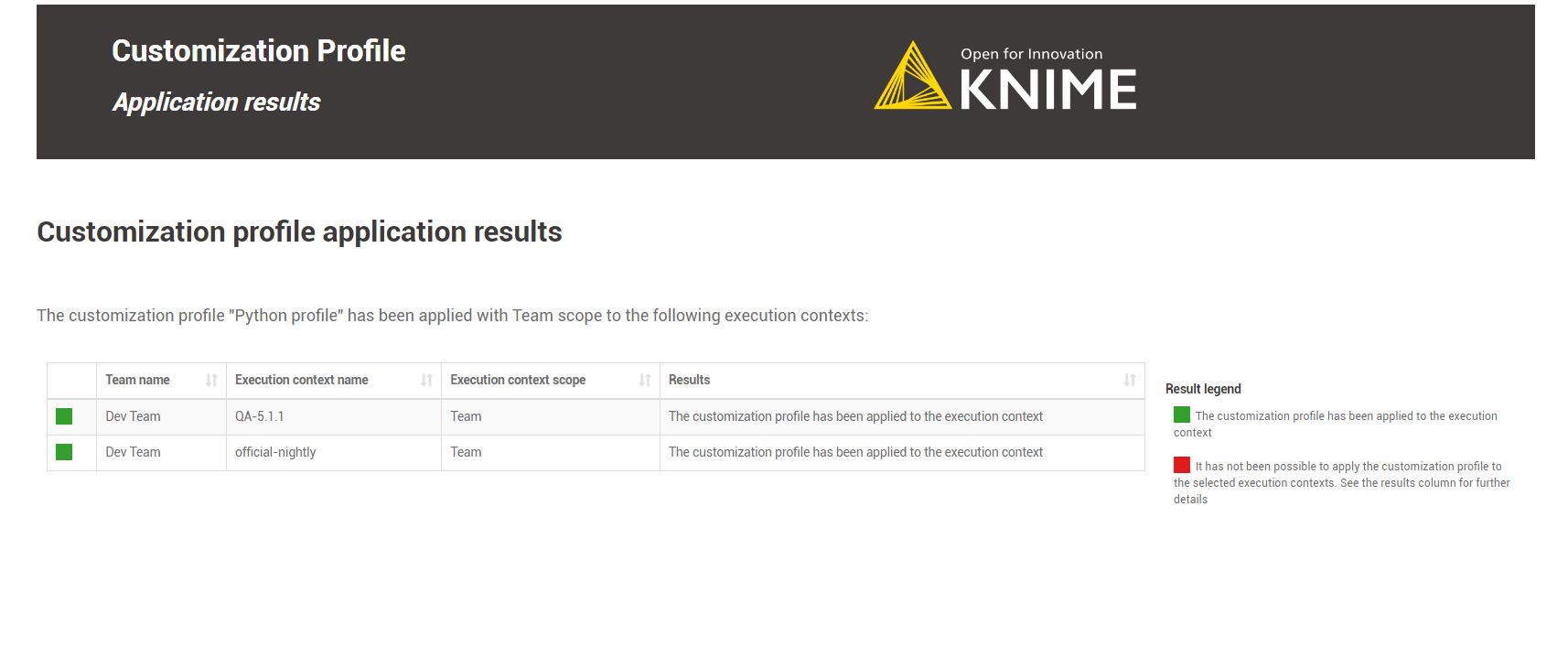

In the Application Results step, a table displays the results of the customization profile application operation, along with a legend.

If everything goes well, the green light indicates that your customization profile has been successfully applied to KNIME Business Hub executors for the selected execution contexts.

Figure 19. The team admin has applied the Python profile to two different execution contexts for the same team.

Figure 19. The team admin has applied the Python profile to two different execution contexts for the same team. -

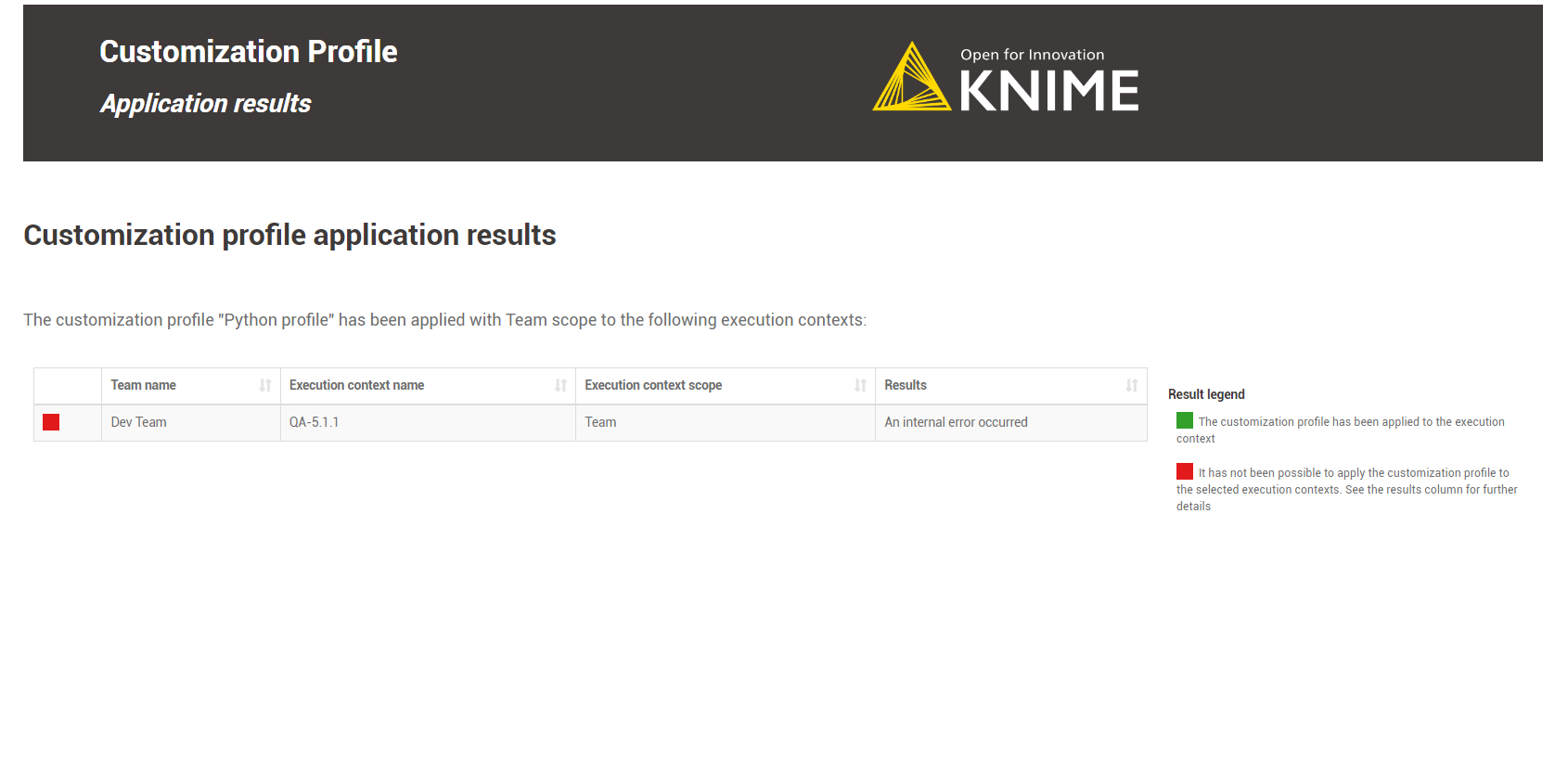

Error Handling: The data application handles two types of errors for the application branch.:

-

No customization profiles were uploaded to the KNIME Business Hub.

-

Attempting to apply a customization profile to an execution context with a customization profile with the same name.

Figure 20. A view of the error.

Figure 20. A view of the error. -

No customization profile was selected.

-

When no execution contexts were chosen to apply the customization profile.

If you encounter an error message, click Next and select the Turn back to the choose action menu option. Repeat the operation, fixing the error.

Figure 21. Turn back to the action menu and repeat the operation.

Figure 21. Turn back to the action menu and repeat the operation.

-

-

Click Next to finish the operation. You can return to the Action Selection menu to perform additional actions or close the data application directly.

Apply via REST request

In order to apply a customization profile to all executors running in a KNIME Business Hub execution context, you will need to send a request to the execution context endpoint.

First you need to get the execution context ID. To do so you can use the following GET request to get a list of all

the execution contexts that are owned by a specific team:

GET api.<base-url>/execution-contexts/account:team:<team_ID>

If you are a global admin you can also GET a list of all the execution contexts available on the Hub instance with the call GET api.<base-url>/execution-contexts/.

|

Now you can apply the new customization profile to the selected execution context.

You will need to obtain the <profile_ID> using the following GET request:

-

For global customization profiles:

GET api.<base-url>/execution/customization-profiles/hub:global

-

For team’s customization profiles:

GET api.<base-url>/execution/customization-profiles/account:team:<team_ID>

Refer to the above section to find out how to get the <team_ID>.

Then you need to update the execution context by using the following PUT request:

PUT api.<base-url>/execution-contexts/<execution-context_ID>

You need to select Body > raw > JSON and add the following to the request body:

{ "customizationProfiles": [ "<profile_ID>" ] }

This will cause a restart of all executor pods of this execution context, after which the profile will be applied.

| At present, this will also terminate all jobs currently running on the executor. |

Update a customization profile

It is possible to update customization profiles uploaded to KNIME Business Hub. This can be done even if the customization profile has already been applied to the KNIME Business executor via execution context. You can update two features:

-

The customization profile name.

-

The customization profile zip file can be overwritten. Refer to the create a customization profile section to learn how to make one.

Update via Data Application

Users can use the customization profile data app to update customization profiles previously uploaded to the KNIME Business Hub.

Depending on the user’s role as a Global Admin or a Team Admin, they can access and update specific customization profiles:

-

Global Admin: can update all uploaded customization profiles within the current KNIME Business Hub instance, either team or global-scoped customization profiles.

-

Team Admin: can only update team-scoped customization profiles from their own teams.

-

Learn how to download the data app from Community Hub, upload and deploy it in KNIME Business Hub, and authenticate with your application password in the Upload a customization profile section.

-

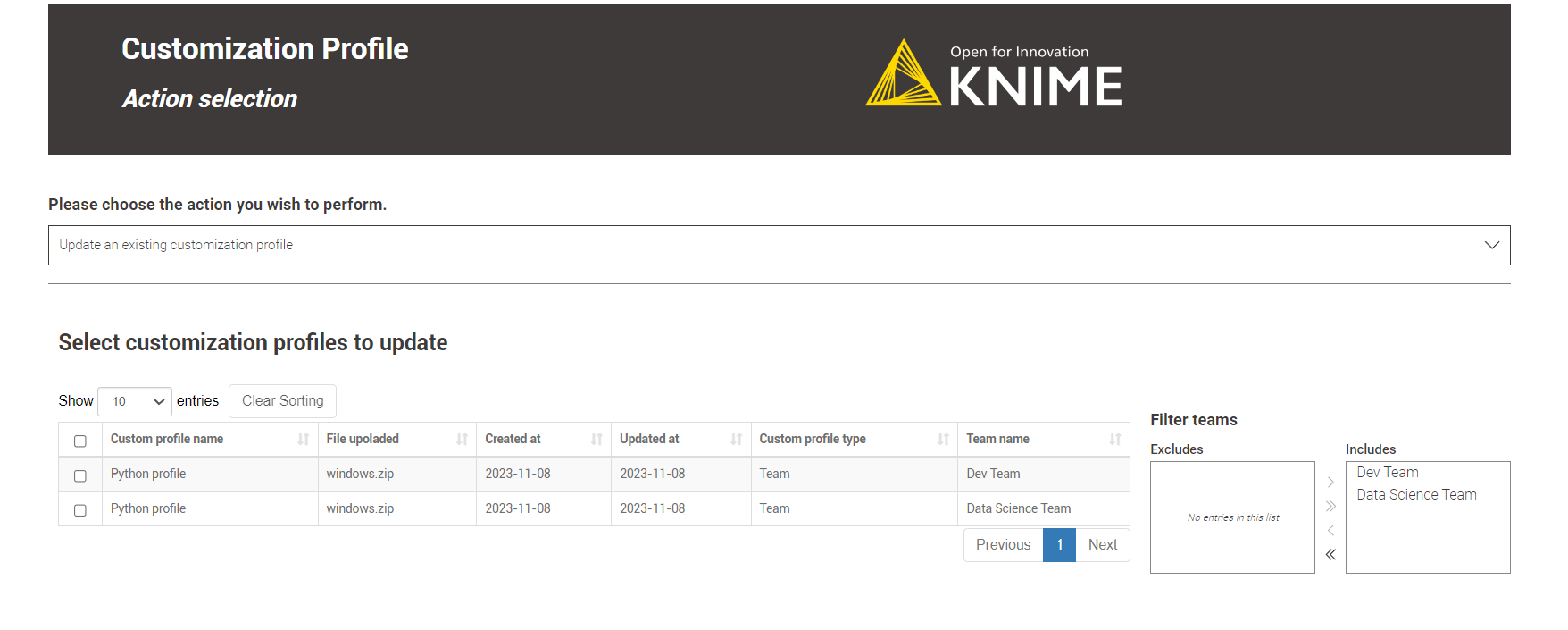

Select Update an existing Customization Profile in the Action Selection menu to update a Customization Profile. Choose the desired customization profiles from the table and click Next.

Figure 22. A team admin could select only team-scoped customization profiles.

Figure 22. A team admin could select only team-scoped customization profiles.You can choose to update multiple customization profiles at the same time. -

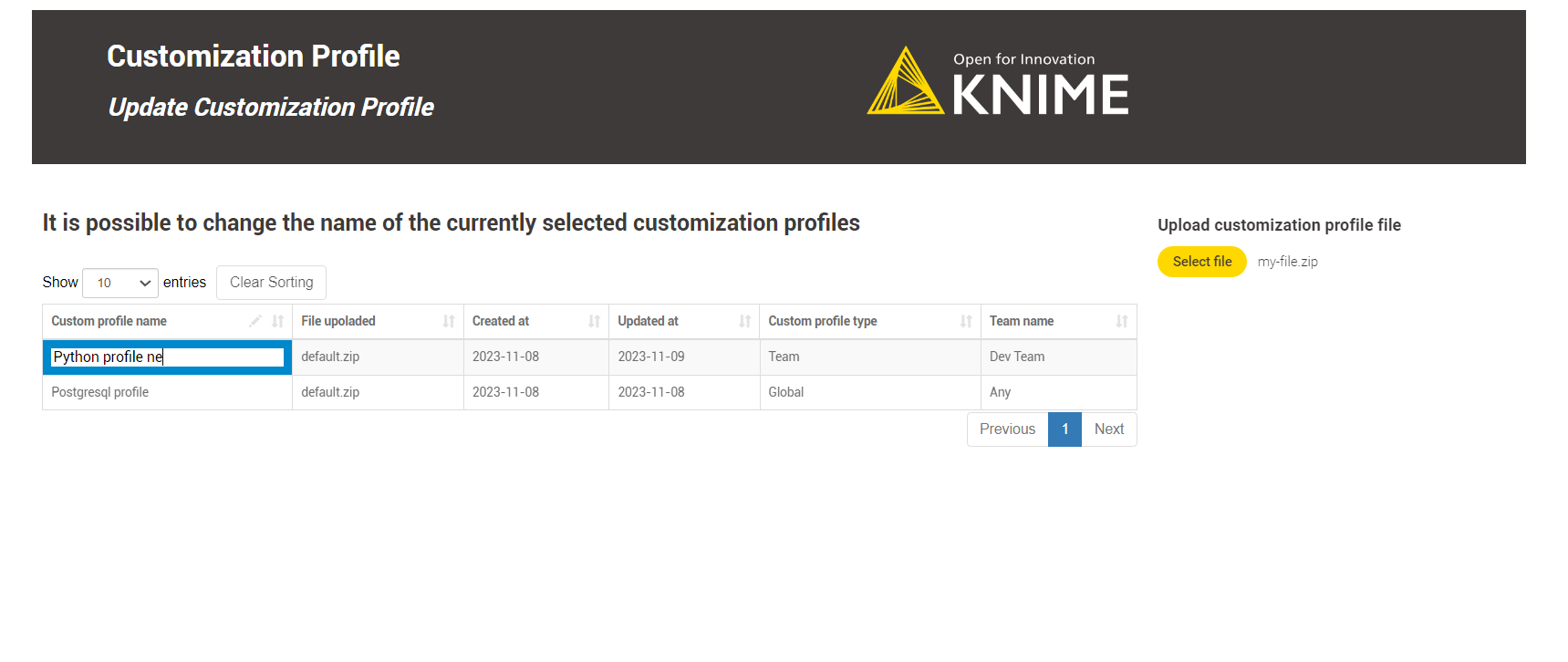

In the Update Customization Profile step, you can change the customization profile name by double-clicking the corresponding table cell and uploading a new customization profile to overwrite the existing one.

Refer to the "File uploaded" column for the currently assigned customization profile file.

Figure 23. A Global Admin updating two customization profiles at once.

Figure 23. A Global Admin updating two customization profiles at once.Updating multiple customization profiles at once is possible, but it’s important to note that you can only upload a single customization profile file for all the customization profiles. Although each customization profile can be renamed, they will all share the same customization profile file. -

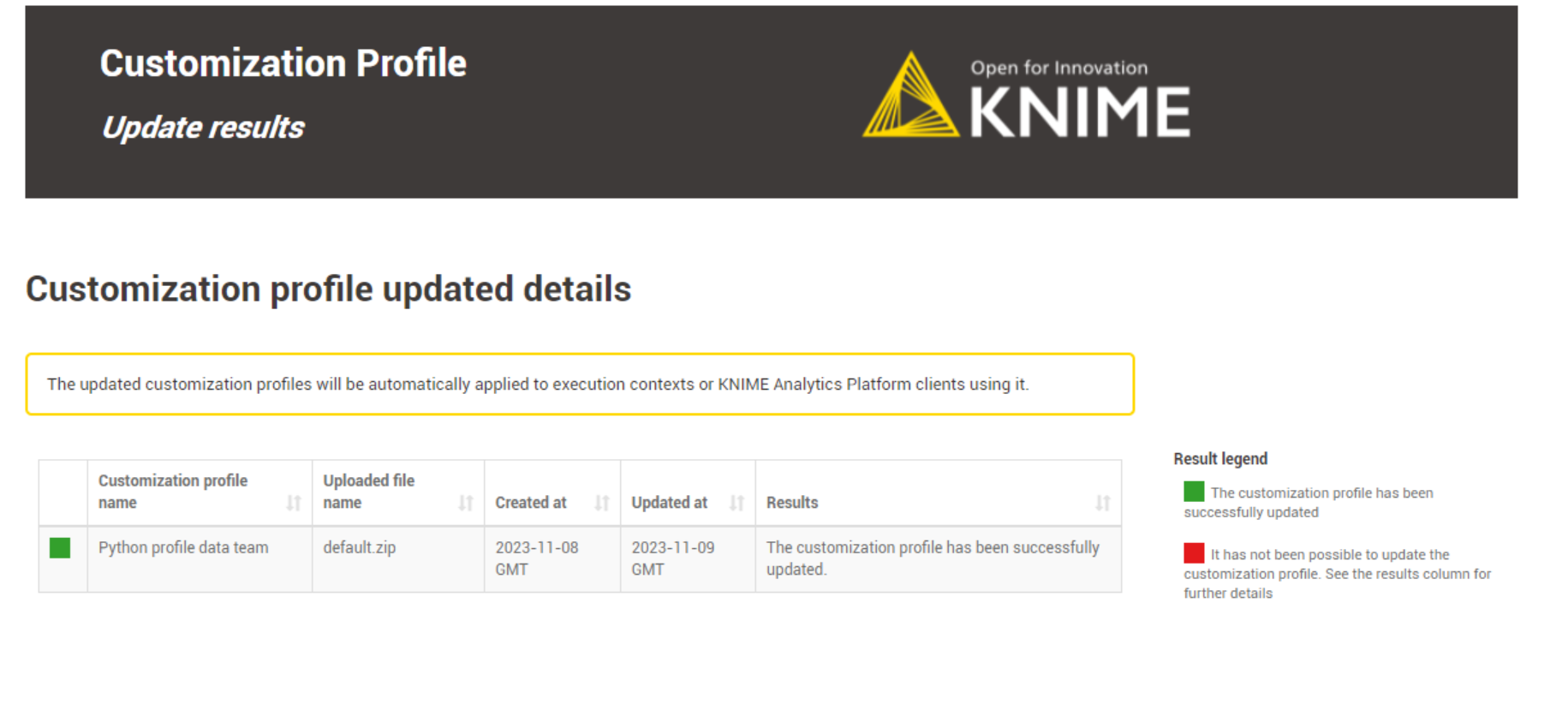

In the Update Results step, a table displays the results of the customization profile updating operation, along with a legend.

If everything goes well, the green light indicates that your selected customization profiles have been successfully updated with new names and a new customization profile file.

Figure 24. The outcome of updating two customization profiles.

Figure 24. The outcome of updating two customization profiles.The updated customization profiles will be automatically applied to execution contexts or KNIME Analytics Platform clients using it. -

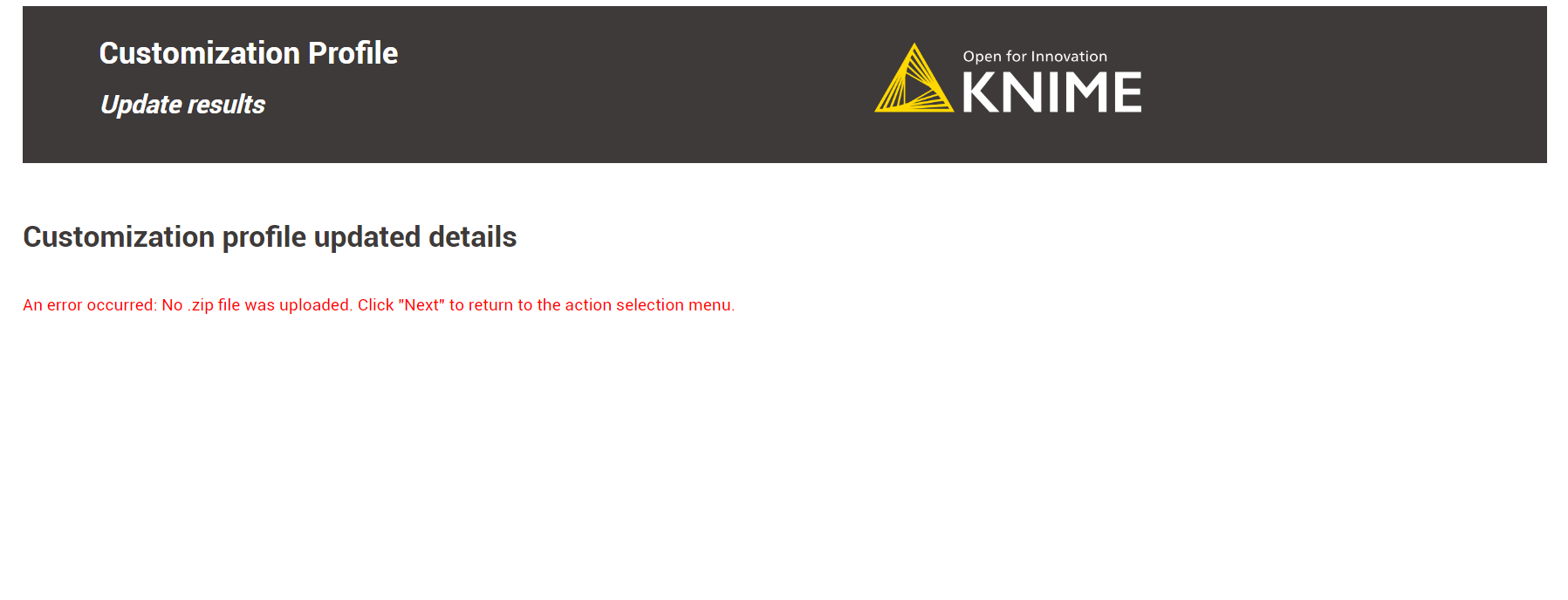

Error Handling: The data application handles two types of errors for the update option:

-

An error will occur if the user does not upload a new customization profile file during the Update Customization Profile step.

Figure 25. A view of the error.

Figure 25. A view of the error. -

No customization profiles were selected in the Update Customization Profile step.

If you encounter an error message, click Next and select the Turn back to the choose action menu option. Repeat the operation, fixing the error.

Figure 26. Turn back to the action menu and repeat the operation.

Figure 26. Turn back to the action menu and repeat the operation.

-

-

Click Next to finish the operation. You can return to the Action Selection menu to perform additional actions or close the data application directly.

Update via REST request

Updating a customization profile is like replacing an existing profile with a new file and name.

If you want to update a customization profile via REST request, the process is similar to the uploading process. The only difference is that instead of a POST Request, you need to perform a PUT Request and specify the ID of the customization profile.

-

If you have not done so already, on KNIME Business Hub, create an application password for the user uploading the profile.

-

For global customization profiles, use a global admin user.

-

For team’s customization profiles, the user can be either a global admin user or a team admin user.

-

-

To begin, you will need to get the list of all uploaded customization profiles on KNIME Business Hub:

You can obtain the

<profile_ID>using the followingGETrequest:For global customization profiles:

GET api.<base-url>/execution/customization-profiles/hub:global

For team’s customization profiles:

GET api.<base-url>/execution/customization-profiles/account:team:<team_ID>

Refer to the above section to learn how to get the <team_ID>. -

Updating a customization profile is similar to uploading it via a REST Request. However, unlike uploading, we only need to provide the name and file of the customization profile for updating. So, we don’t need to provide the scope as it remains the same.

-

Send a

PUTrequest tohttps://api.<base-url>/execution/customization-profiles/<customization-profile-ID>with the following set up: -

Authorization: select Basic Auth, using username and password from the created application password

-

Body: select form-data as request body type and add the following entries:

-

Add a new key, set content to “File”. Name of the key needs to be “content”. The value of the key is the

.zipfile containing the profile, and the content type isapplication/zip. -

Add a new key, set content to “Text”. Name of the key needs to be “metadata”.

-

The value of the key is the same for global and team-scoped customization profiles:

{ "name": "<profile_name>" }

-

Set the content type for the second key to

application/json

-

-

-

When using Postman there is no need to manually adjust the headers

-

-

Send the request

Detach a customization profile

It’s important to know that detaching a customization profile is not the same as deleting it. When you detach a customization profile, it isn’t removed from KNIME Business Hub. Instead, it just means that the customization profile won’t be applied to the KNIME Analytics Platform or the KNIME Business executors.

However, the customization profile is still available in KNIME Business Hub, so it can be reused again whenever needed.

Detach a customization profile from a KNIME Business Hub executor

Detaching a customization profile applies only to those customization profiles applied to a KNIME Business Hub executor via execution context. Separating a customization profile from its execution context is the prerequisite step to deleting a customization profile from a KNIME Business Hub instance.

Detaching a customization profile from an execution context can also be done if the user no longer wants to apply the customization to the executors.

Detach via Data Appliation

The data application allows users to detach customization profiles applied to execution contexts in KNIME Business Hub.

For instructions on how to apply a customization profile to an execution context, refer to this section.

The customization profiles available for detachment depend on the user type:

-

Global Admin: can detach all applied customization profiles within the current KNIME Business Hub instance, either team or global-scoped customization profiles.

-

Team Admin: can only detach team-scoped customization profiles from their own teams.

-

Learn how to download the data app from Community Hub, upload and deploy it in KNIME Business Hub, and authenticate with your application password in the Upload a customization profile section.

-

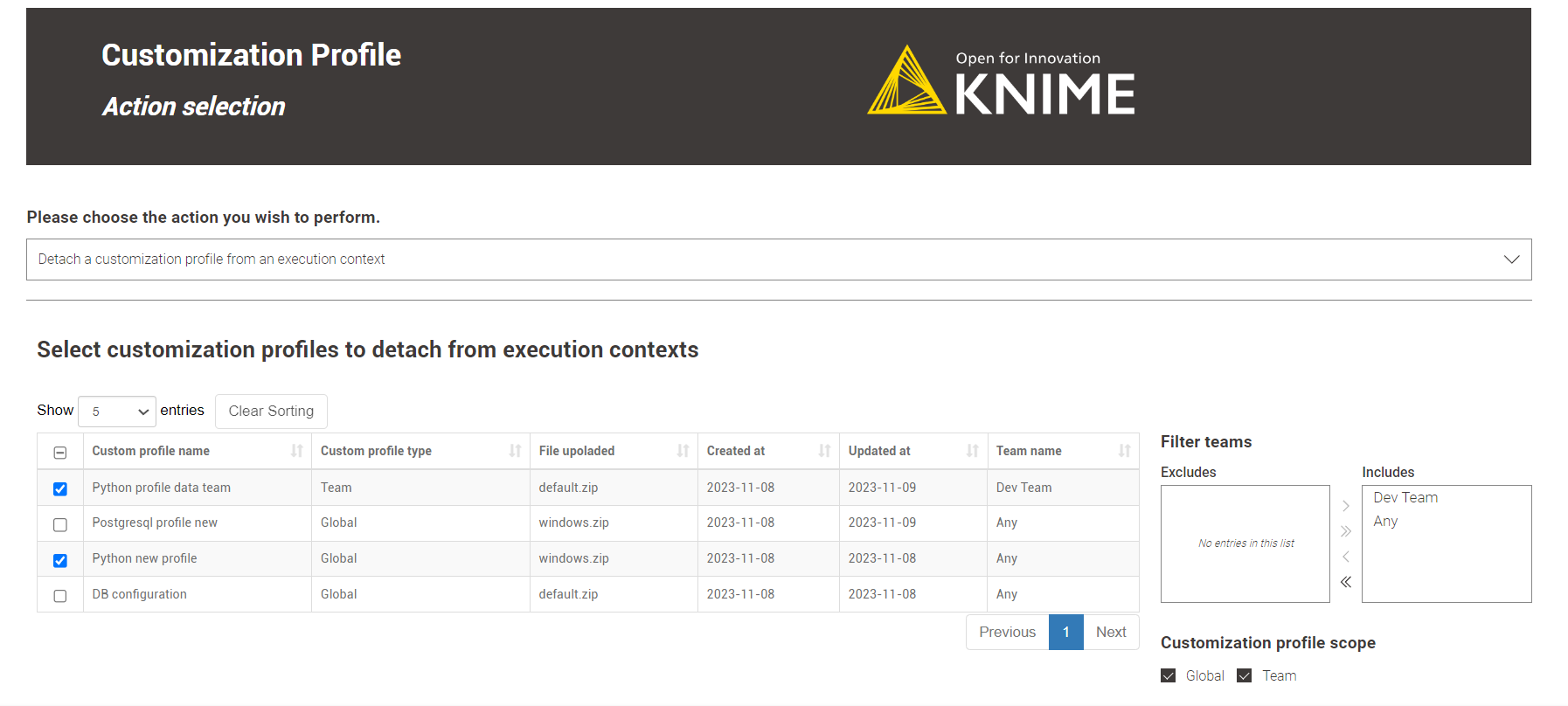

Select Detach a customization profile from an execution context in the Action Selection menu to detach a customization profile. Choose the desired customization profiles from the table and click Next.

Figure 27. A Global admin is able to detach either team or global-scoped customization profiles.

Figure 27. A Global admin is able to detach either team or global-scoped customization profiles.You can choose to detach multiple customization profiles at the same time. -

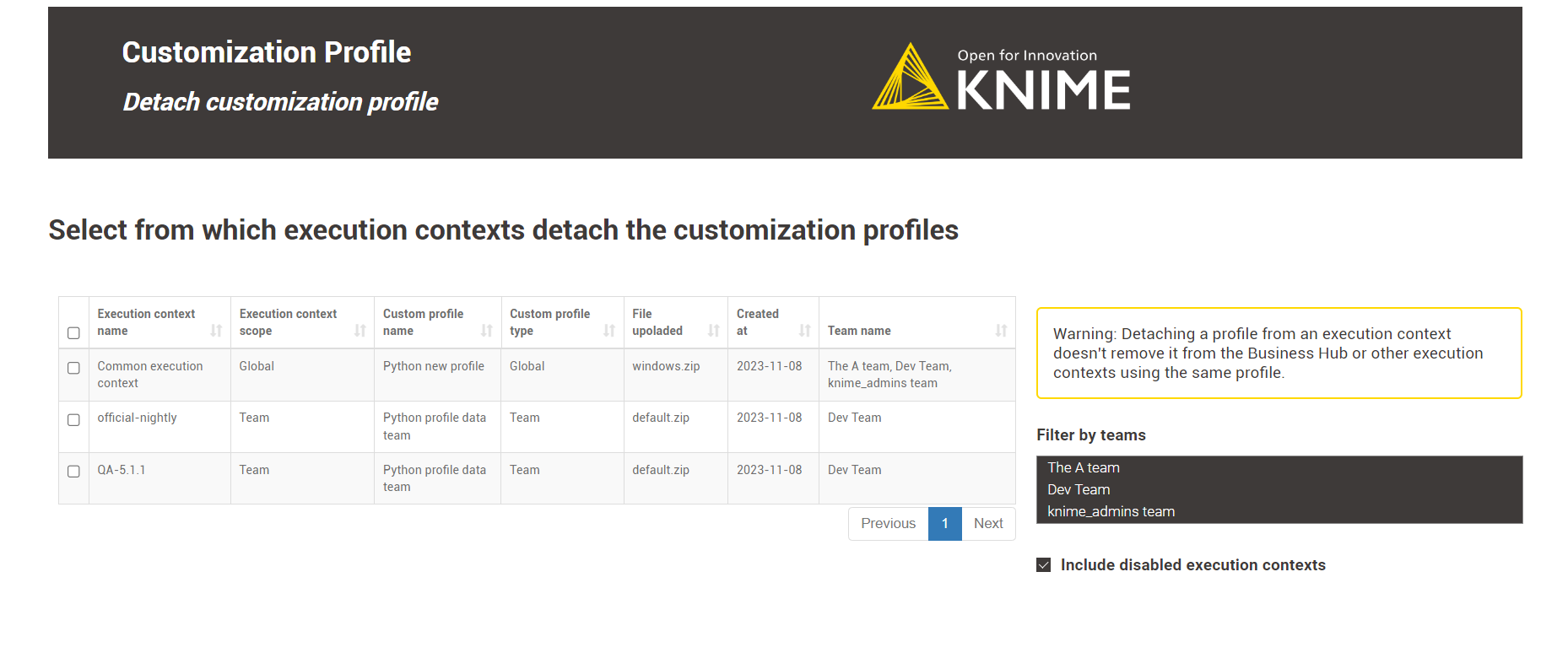

Once you click on Next, a table will be displayed showing all the execution contexts where the selected customization profiles have been applied.

-

At this point, you can choose multiple execution contexts to detach the customization profile.

-

Notice that for execution contexts with

globalscope, all teams where they are in use are listed in the column Team name. By detaching the customization profile from such an execution context, you will detach it from all the teams where the execution is being applied. Figure 28. Here’s an example of a global execution context shared between three teams.

Figure 28. Here’s an example of a global execution context shared between three teams. -

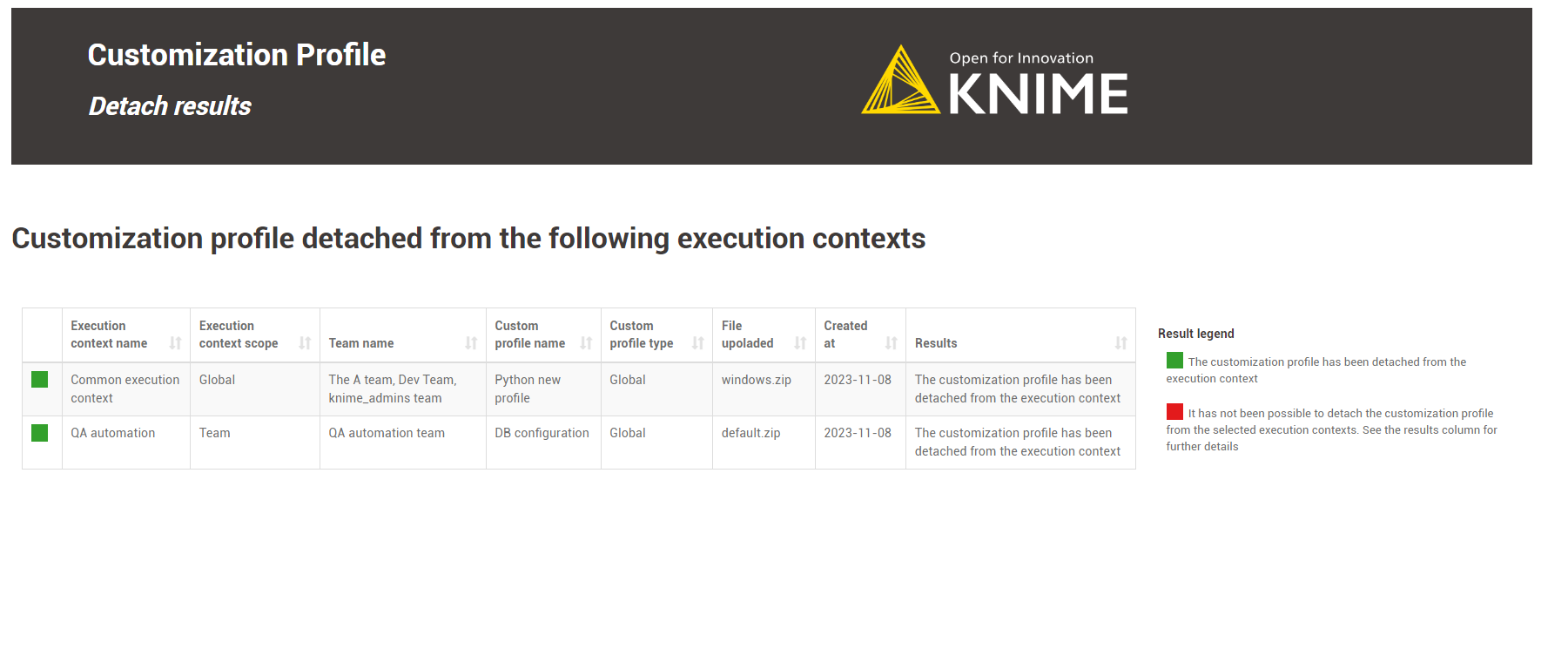

After you perform the Detach Results step, a table will appear showing you the customization profile detach operation results.

If everything goes well, the green light indicates that your selected customization profiles have been successfully detached from the selected execution contexts.

Figure 29. The outcome of detaching two customization profiles.

Figure 29. The outcome of detaching two customization profiles.Detaching a customization profile from an execution context doesn’t remove it from the Business Hub or other execution contexts using the same profile. -

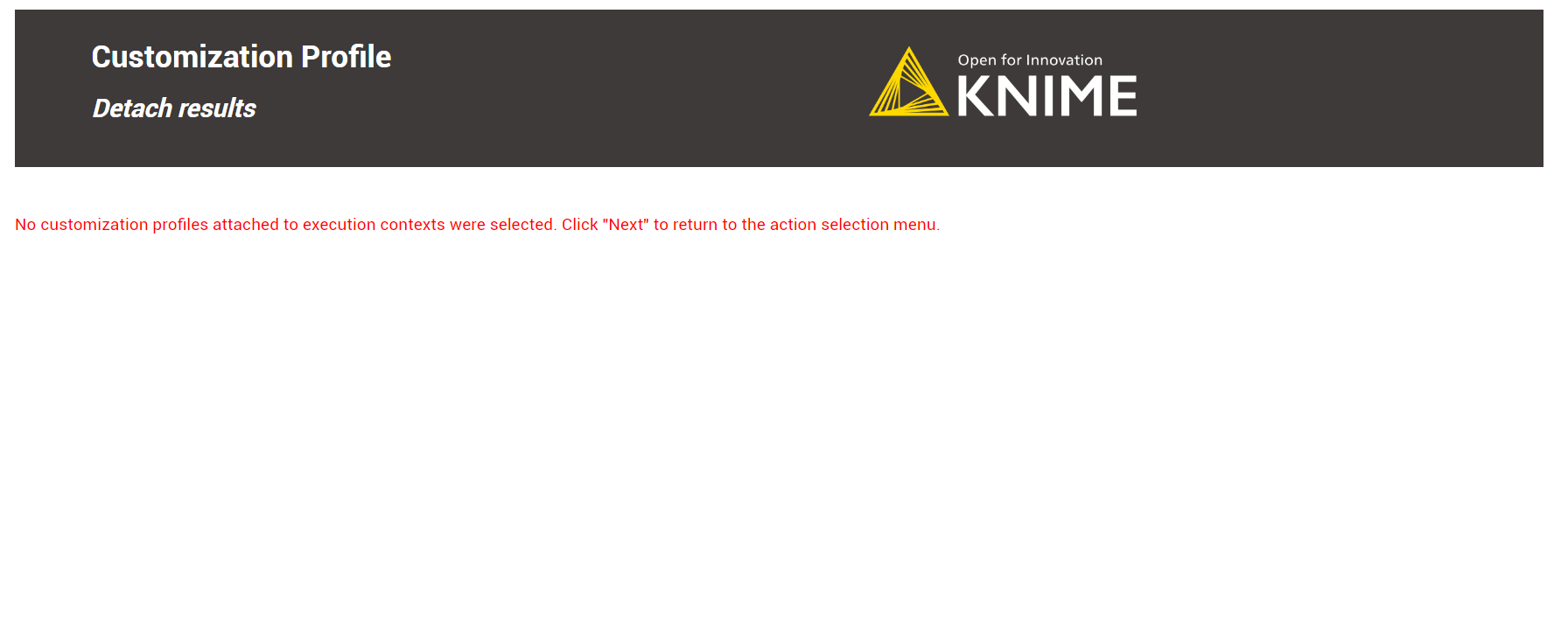

Error Handling: The data application handles three types of errors for the detach option:

-

An error will occur if no customization profiles are applied to any KNIME Business Hub execution context. In this case, you must select the option to Apply an existing customization profile.

-

An error is raised if the user:

-

Does not select any customization profile to detach.

-

If there are no execution contexts selected from where to detach the customization profiles.

Figure 30. A view of one of the possible errors.

Figure 30. A view of one of the possible errors.If you encounter an error message, click Next and select the Turn back to the choose action menu option. Repeat the operation, fixing the error.

Figure 31. Turn back to the action menu and repeat the operation.

Figure 31. Turn back to the action menu and repeat the operation.

-

-

-

Click Next to finish the operation. You can return to the Action Selection menu to perform additional actions or close the data application directly.

-

Detach via REST request

You can detach a customization profile from a KNIME Business Hub execution context, which is the inverse of applying it. The steps for detaching a customization profile are similar to applying one.

To detach a customization profile from all executors running in a KNIME Business Hub execution context, you must send a request to the execution context endpoint, not including the customization profile ID that you want to detach.

-

If you have not done so already, on KNIME Business Hub, create an application password for the user uploading the profile.

-

For global customization profiles, use a global admin user.

-

For team’s customization profiles, the user can be either a global admin user or a team admin user.

-

-

First, you need to get the execution context ID. To do so, you can use the following

GETrequest to get a list of all the execution contexts that are owned by a specific team:GET api.<base-url>/execution-contexts/account:team:<team_ID>

If you are a Global Admin you can also GETa list of all the execution contexts available on the Hub instance with the callGET api.<base-url>/execution-contexts/. -

Retrieve the existing customization profiles in the execution context from the above Get Request response. Look for a key in the JSON body similar to:

"customizationProfiles" : [ "<customization-profile-ID_1>",<customization-profile-ID_2>" ]

-

Now, you can detach the target customization profile from the selected execution context. To do so, you need to update the execution context by using the following

PUTrequest:PUT api.<base-url>/execution-contexts/<execution-context_ID>

-

To detach a customization profile, e.g.

<customization-profile-ID_1>, from the target execution context, follow these steps. SelectBody > raw > JSONand ensure you do not include the customization profile you wish to remove. Use the syntax of the request body shown below:{ "customizationProfiles": [ "<customization-profile-ID_2>" ] }

-

If the target execution context has only one customization profile attached, you can detach it by doing an empty request.

{ "customizationProfiles": [] }

Delete a customization profile

Deleting a customization profile from KNIME Business is a straightforward operation, and you can do it either through a REST request or the data application. Please follow the step-by-step guide below to understand how it works.

Please note that the type of user determines which customization profiles they can delete:

-

Global Admin: can delete all customization profiles within the current KNIME Business Hub instance, either team or global-scoped customization profiles.

-

Team Admin: can only delete team-scoped customization profiles from their own teams.

| Deleting a customization profile from KNIME Business Hub requires first detaching it from any execution context where it was applied. |

Please refer to the Detach a customization profile from a KNIME Business Hub executor section to understand how to detach a customization profile.

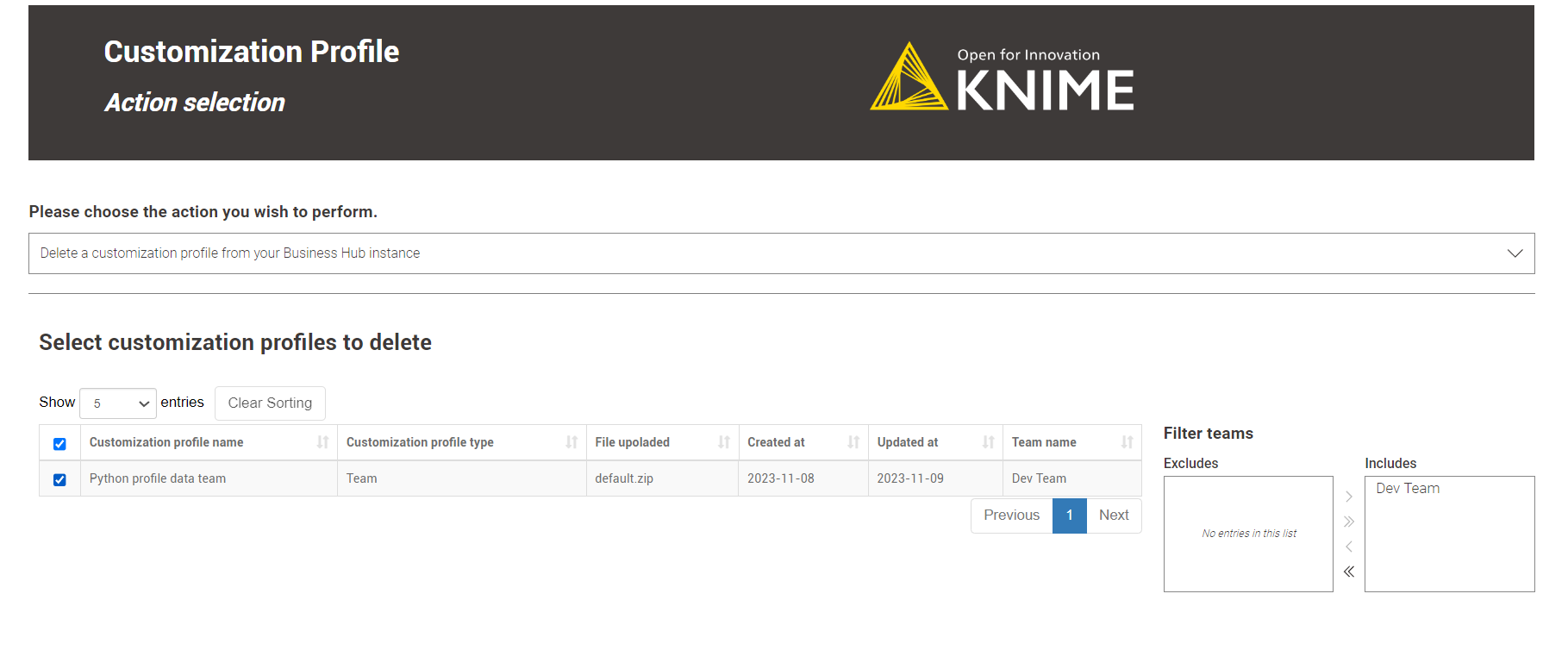

Delete via Data Application

-

Learn how to download the data app from Community Hub, upload and deploy it in KNIME Business Hub, and authenticate with your application password in the Upload a customization profile section.

-

Select Delete a customization profile from your Business Hub instance in the Action Selection menu to delete a customization crofile. Choose the desired customization profiles from the table and click Next.

Figure 32. Team admins can only delete customization profiles from teams they are admin of.

Figure 32. Team admins can only delete customization profiles from teams they are admin of.You can choose to delete multiple customization profiles at the same time. -

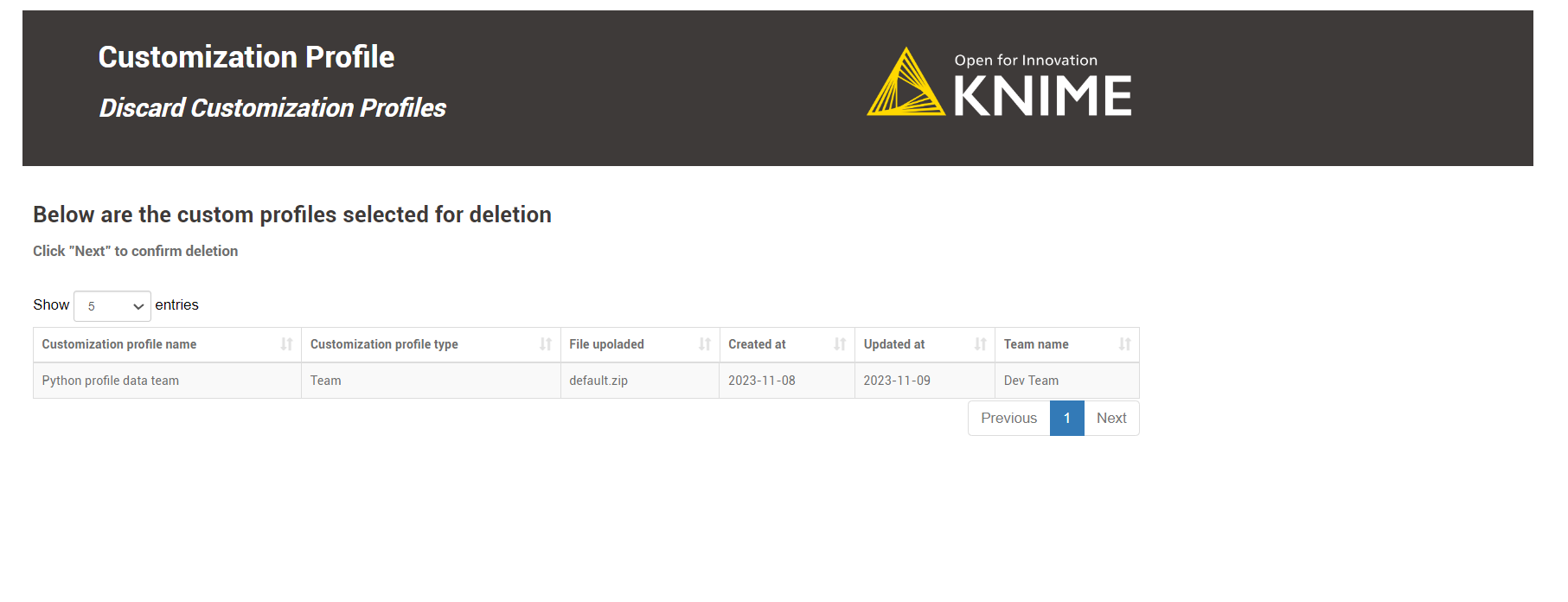

As deleting is a delicate operation, in the next step, we offer a preview of the selected customization profiles to be deleted to ensure the user is performing the desired action.

Figure 33. Preview of the selected customization profile.

Figure 33. Preview of the selected customization profile.Clicking Next will delete the customization profile directly. -

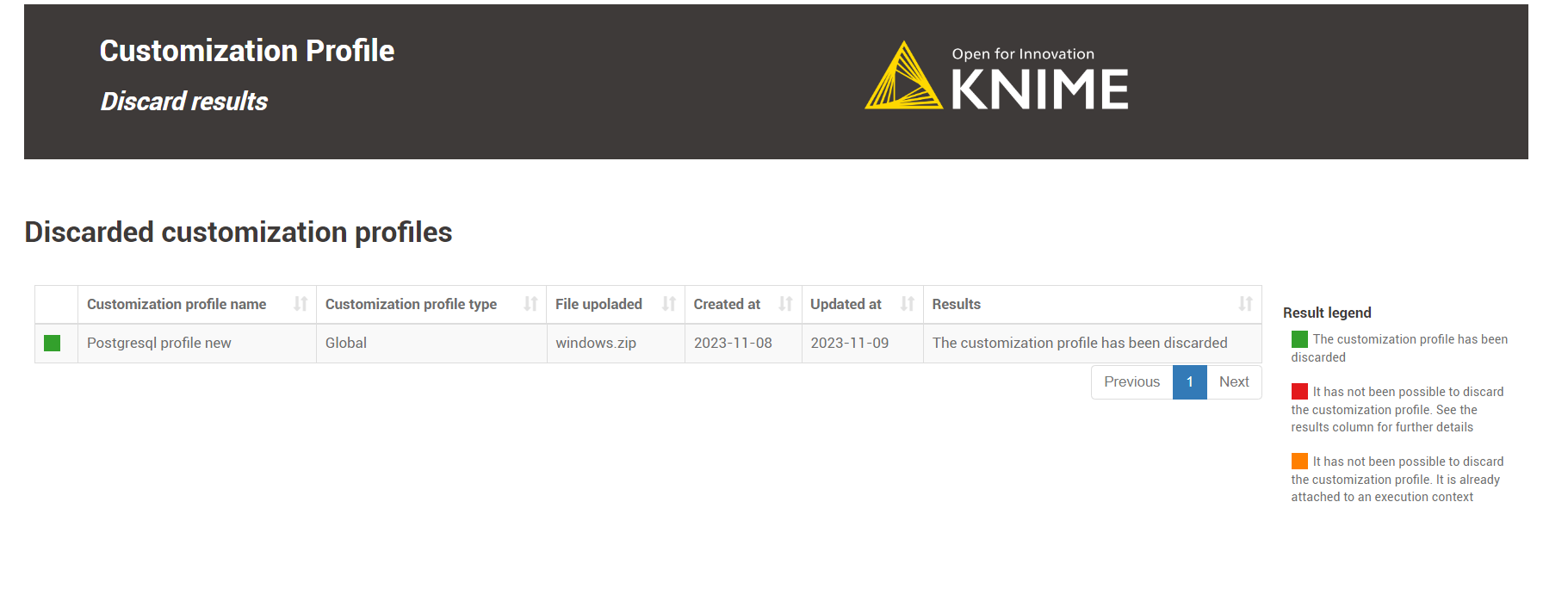

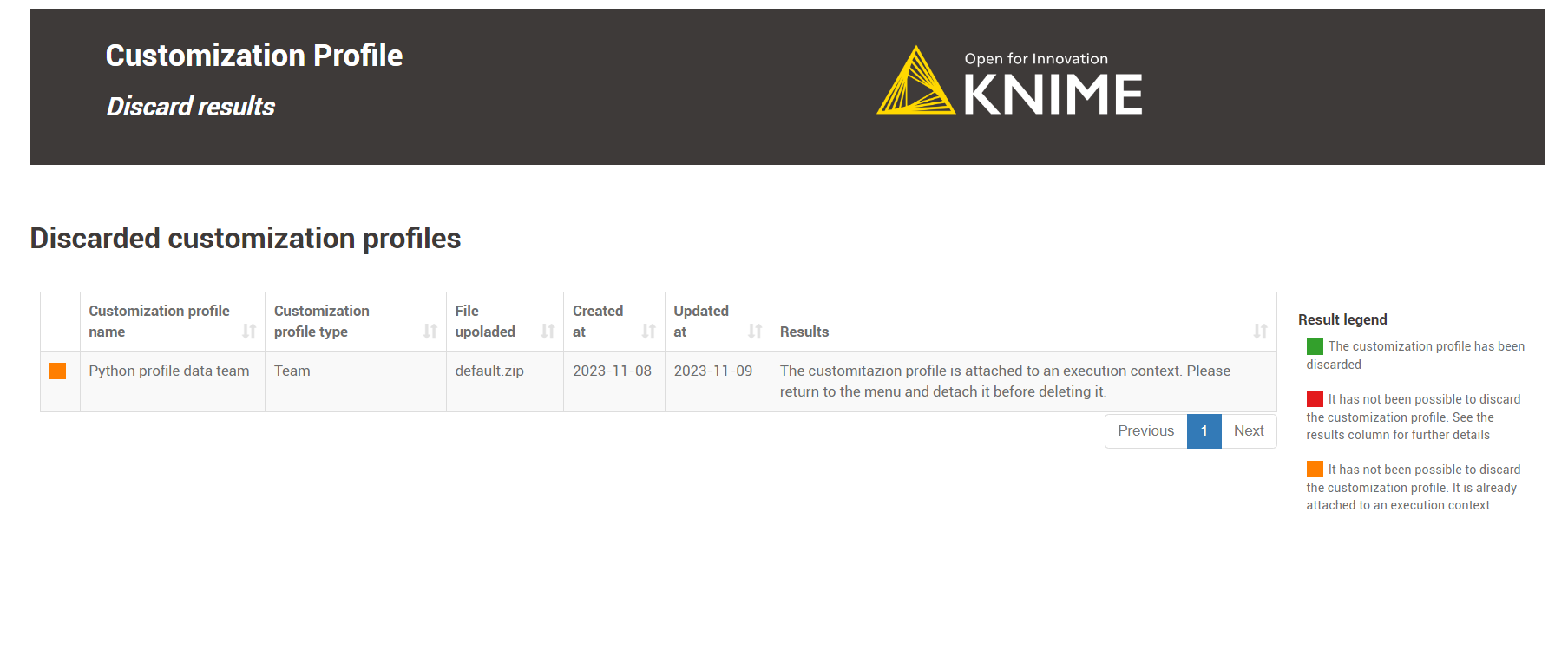

The Discard results section displays a table with a legend indicating the success or failure of deleting a customization profile. Green means success, red means failure, and orange means you need to detach the customization profile from the execution contexts first.

Figure 34. The deletion of a customization profile from KNIME Business Hub was successful.

Figure 34. The deletion of a customization profile from KNIME Business Hub was successful. -

Error Handling: The data application handles two types of errors for the delete option:

-

If the user doesn’t select any customization profile.

-

When deleting a customization profile still attached to an execution context, reference the Detach a customization profile from a KNIME Business Hub executor section for resolution.

Figure 35. A view of one of the possible errors.

Figure 35. A view of one of the possible errors.If you encounter an error message, click Next and select the Turn back to the choose action menu option. Repeat the operation, fixing the error.

Figure 36. Turn back to the action menu and repeat the operation.

Figure 36. Turn back to the action menu and repeat the operation.

-

-

Click Next to finish the operation. You can return to the Action Selection menu to perform additional actions or close the data application directly.

Delete via REST request

Deleting a customization profile from KNIME Business Hub is possible via a REST request.

Below are the steps to accomplish this:

-

If you have not done so already, on KNIME Business Hub, create an application password for the user uploading the profile.

-

For global customization profiles, use a global admin user.

-

For team’s customization profiles, the user can be either a global admin user or a team admin user.

-

-

To start, you need to retrieve the list of the uploaded customization profiles to KNIME Business Hub:

You can obtain the

<customization_profile_ID>using the followingGETrequest:For global customization profiles:

GET api.<base-url>/execution/customization-profiles/hub:global

For team’s customization profiles:

GET api.<base-url>/execution/customization-profiles/account:team:<team_ID>

Refer to the above section to find out how to get the <team_ID>. -

Once you have the

<customization-profile-ID>that you want to delete, perform a DELETE Request.-

Send a

DELETErequest tohttps://api.<base-url>/execution/customization-profiles/<customization-profile-ID>with the following set up:-

Authorization: select Basic Auth, using username and password from the created application password

After a successful deletion, you will receive a 20* status code.

-

-

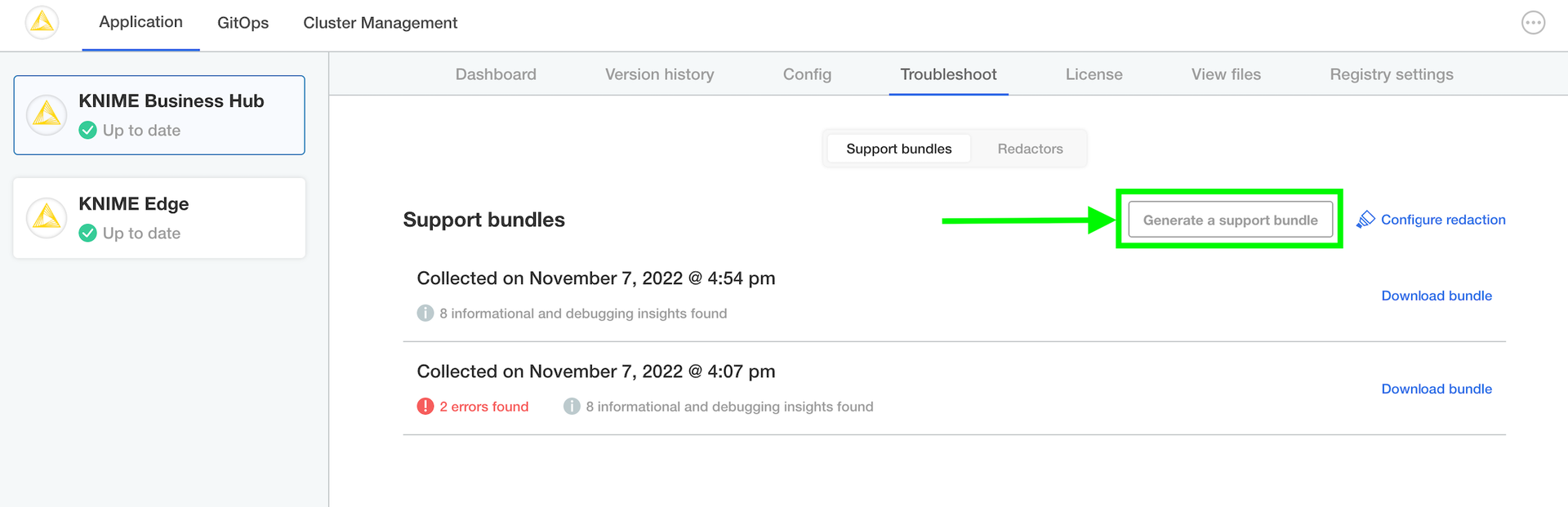

Advanced configuration

This section covers some of the configuration settings that are available for your KNIME Business Hub instance.

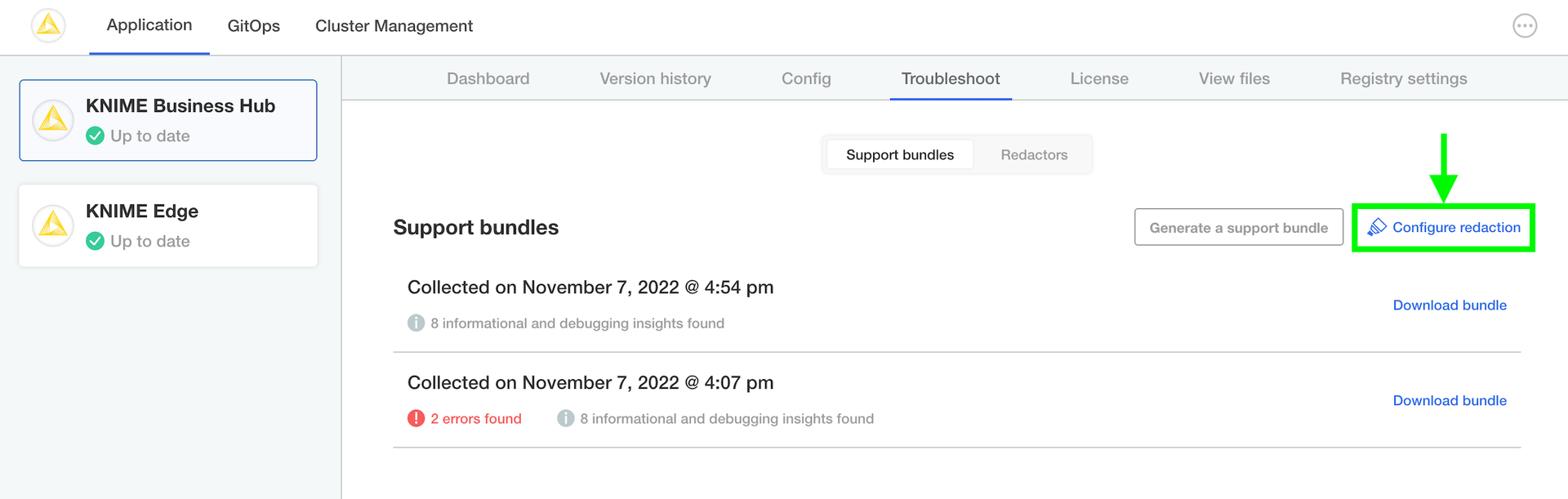

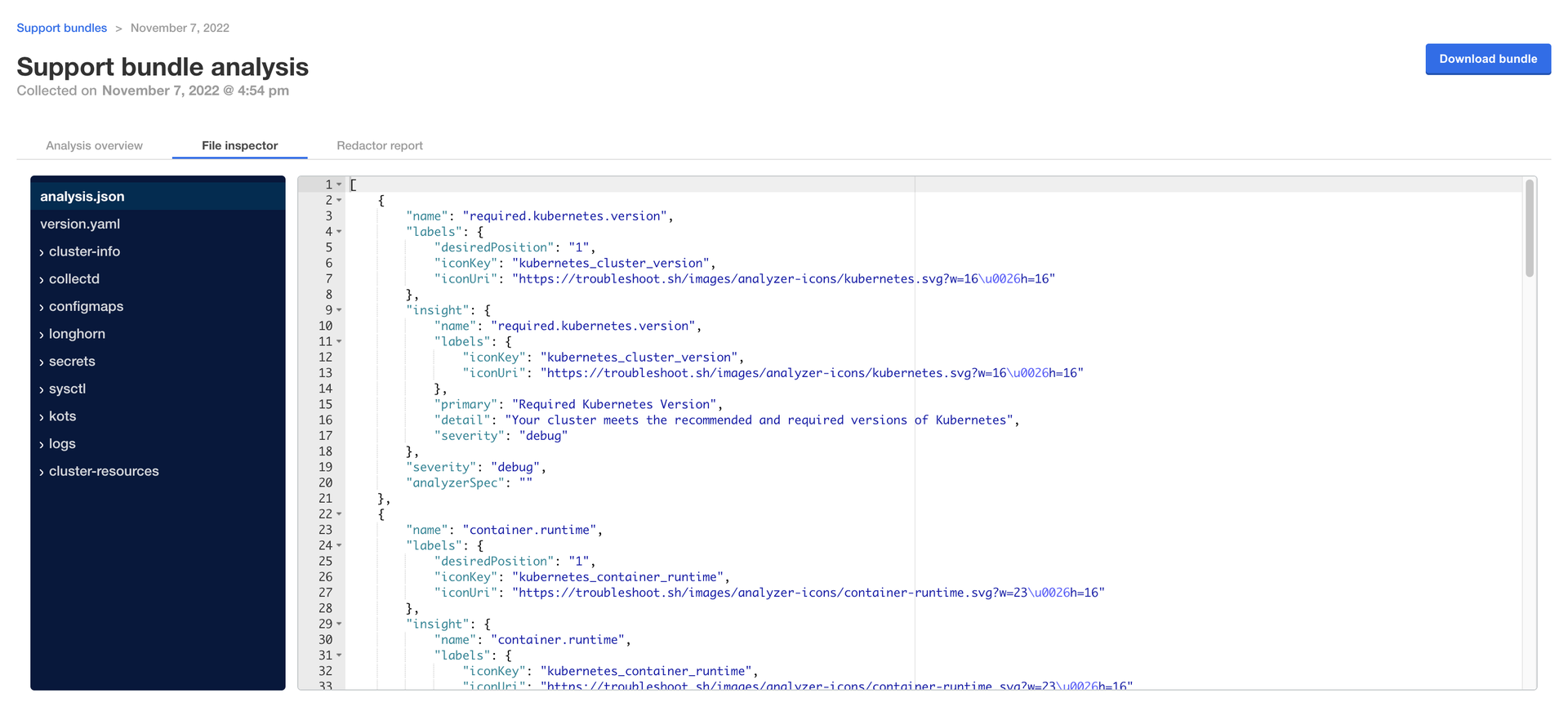

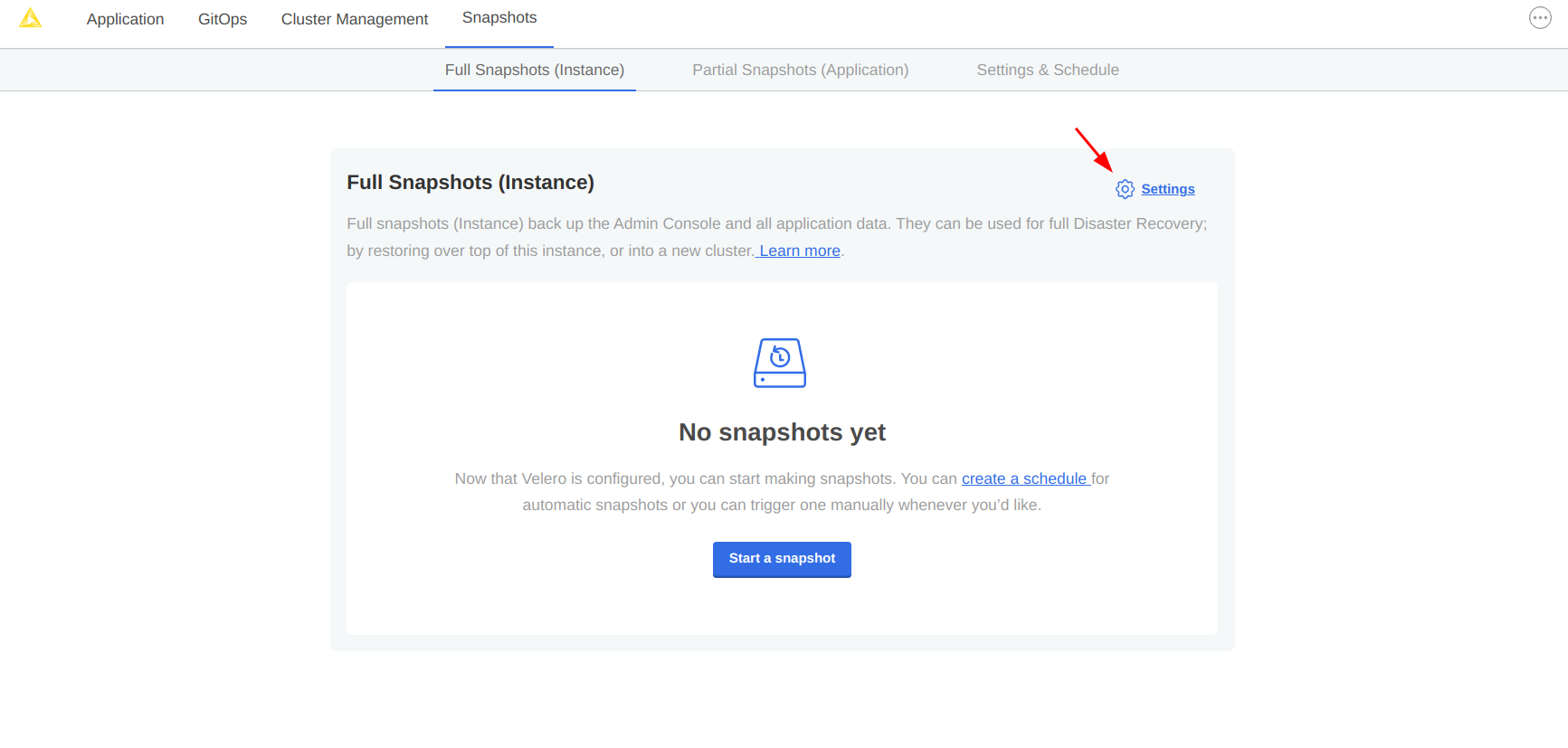

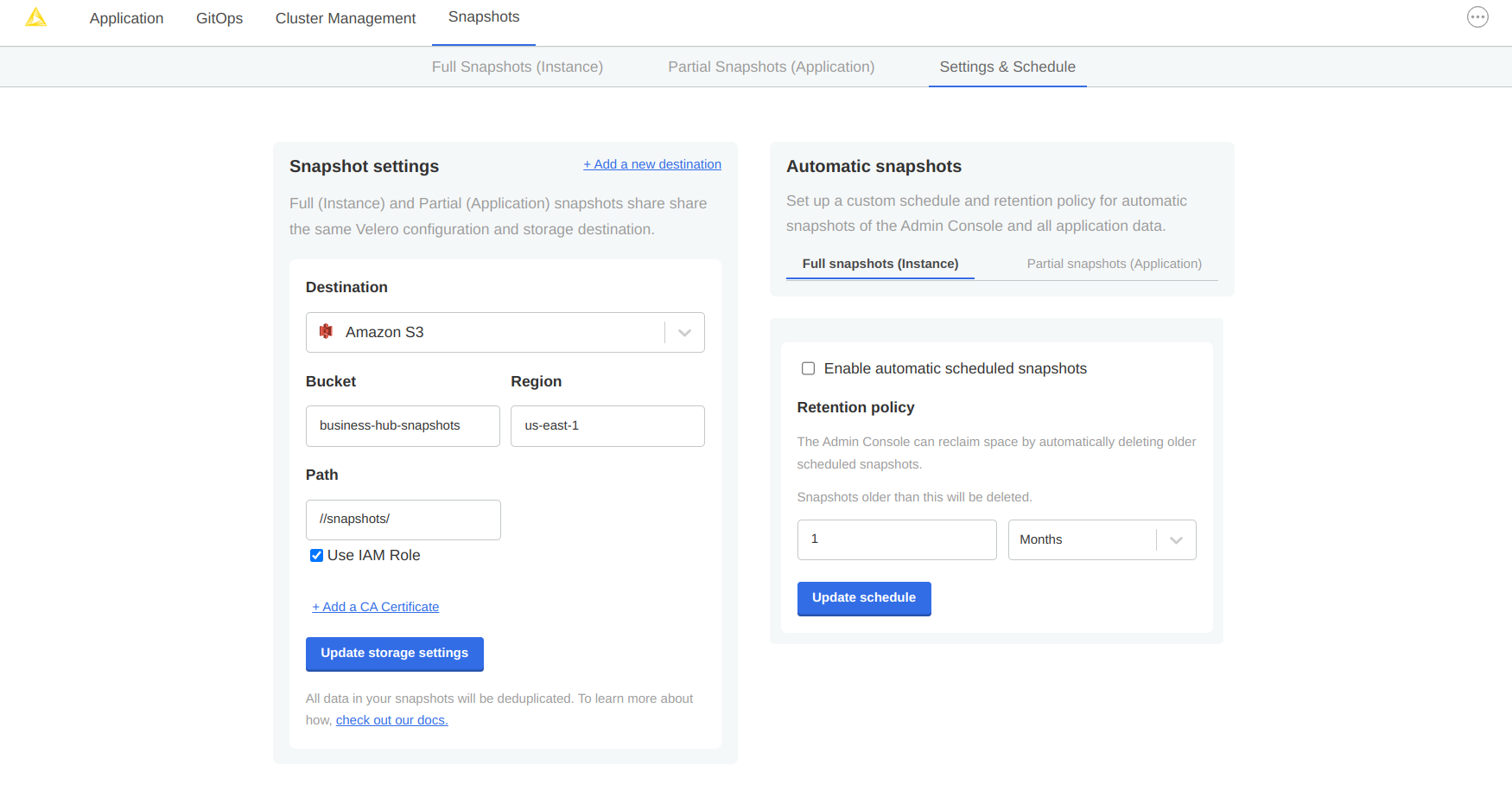

The following configurations are available in the KOTS Admin Console and can be changed after the installation and first minimal configuration steps are concluded successfully.

You can access the KOTS Admin Console via the URL and password you are provided in the output upon installation.

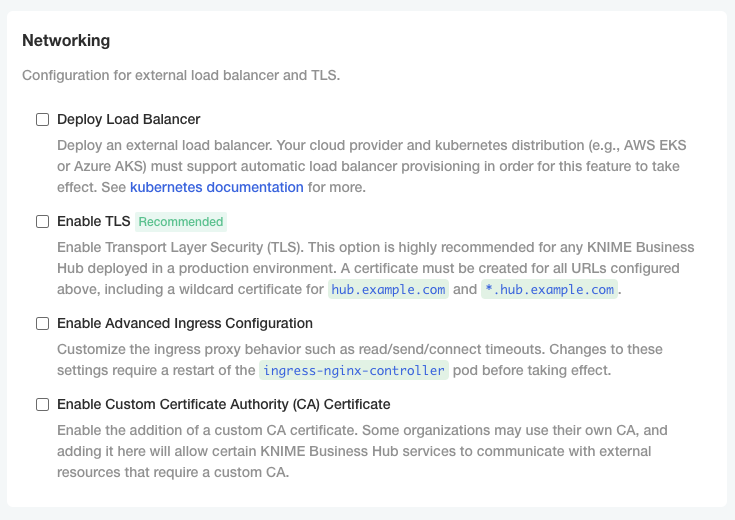

Configure networking

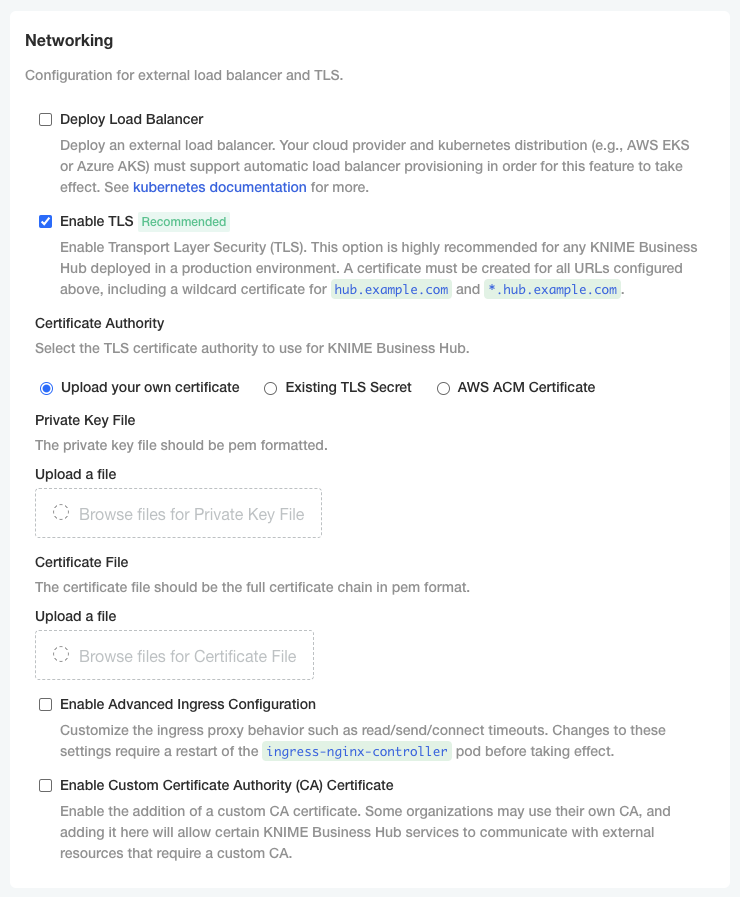

In the "Networking" section of the KOTS Admin Console you can:

-

Deploy an external load balancer for traffic ingress: this feature takes effect only if your cloud provider and kubernetes distribution support automatic load balancer provisioning.

-

Enable Transport Layer Security (TLS): the encryption protocol that provides communications security is highly recommended especially for KNIME Business Hub instances deployed in a production environment.

Please, be aware that if TLS is not enabled some HTTPS-only browser’s features will not be available. For example, it will not be possible for a user to copy generated application passwords. -

Enable advanced ingress configuration: you can customize the ingress proxy behavior, for example configuring the read/send/connect timeouts.

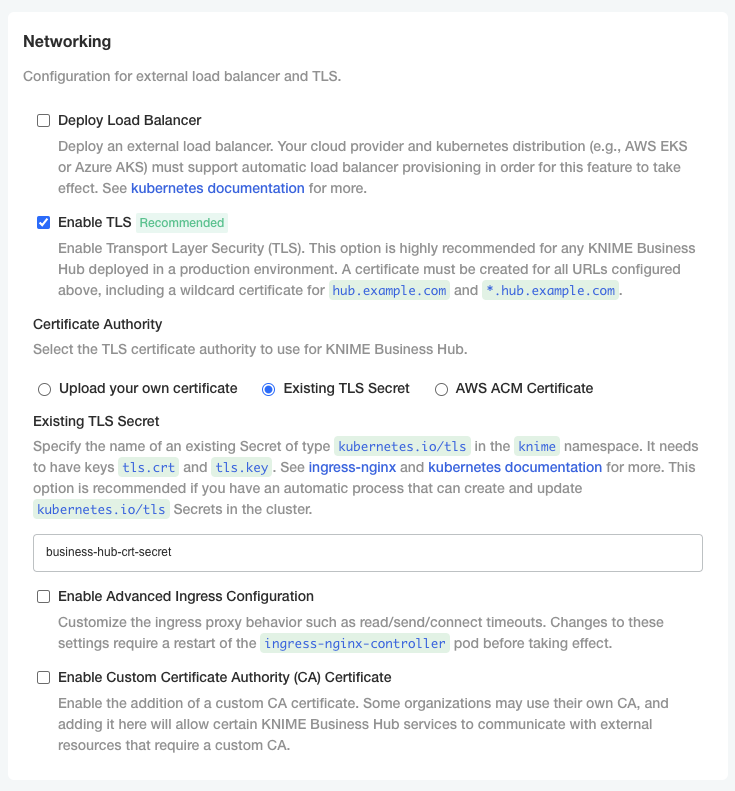

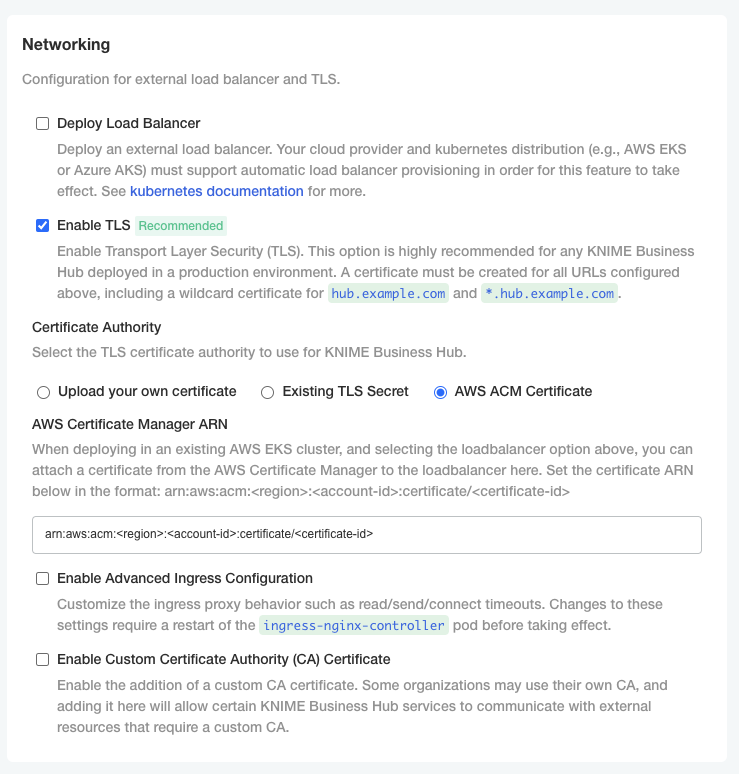

Configure TLS

If you enable the Transport Layer Security (TLS) you need to have a certificate that is valid for all the URLs defined during the installation.

We recommend to create a wildcard certificate for <base-url> and *.<base-url>, e.g. hub.example.com and *.hub.example.com.

Check Enable TLS in the "Networking" section of the KOTS Admin Console.

-

Upload your own certificate: Select Upload your own certificate to be able to upload the certificate files.

You will need an unencrypted private key file and a certificate file that contains the full certificate chain. In the certificate chain the server certificate needs to be the first in the PEM file, followed by the intermediate certificate(s). You usually can get a certificate from your company’s IT department or Certificate Authority (CA).

Another possibility, if you have a public domain name, is to use

letsencryptto obtain a certificate.Both certificates need to be PEM formatted as requested by the

ingress-nginx-controller(see the relevant documentation here).

-

Existing TLS Secret: Select Existing TLS Secret to specify the name of of an existing Secret of type

kubernetes.io/tlsin theknimenamespace. It needs to have keystls.crtandtls.key, which contain the PEM formatted private key and full chain certificate.This option is recommended if you have an automatic process that can create and renew

kubernetes.io/tlsSecrets in the cluster, like the cert-manager project.See ingress-nginx and kubernetes documentation on TLS secrets for more details.

-

Select AWS ACM Certificate if, instead, you have deployed an AWS Elastic Load Balancer (ELB). In this case you can use AWS Certificate Manager (ACM) and set the certificate as an annotation directly on the loadbalancer. You can find more information in AWS documentation for ACM here.

Once you obtained the certificate Amazon Resource Name (ARN) in the form

arn:aws:acm:<region>:<account-id>:certificate/<certificate-id>, insert the ARN in the corresponding field as shown in the image below.

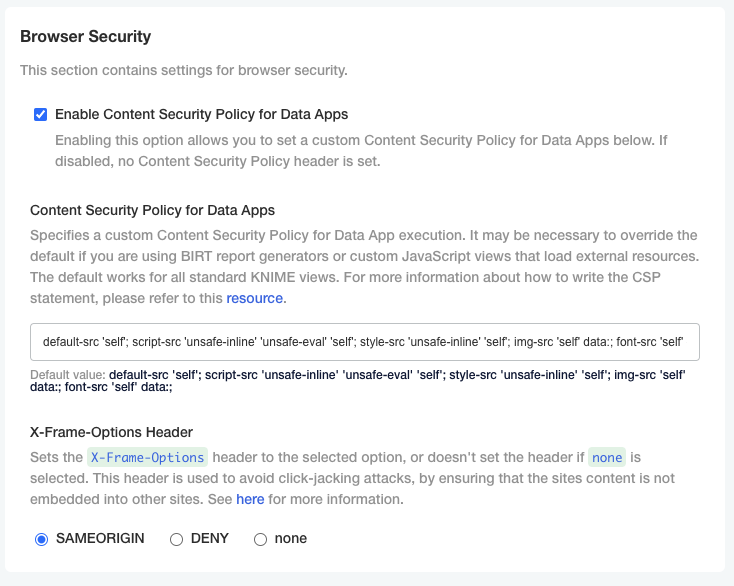

Configure Browser Security

In the "Browser Security" section of the KOTS Admin Console you can:

-

Specify a custom Content Security Policy for Data App execution. It may be necessary to override the default if you are using custom JavaScript views that load external resources. The default works for all standard KNIME views. For more information about how to write the CSP statement, please refer to this resource.

-

Configure the X-Frame-Options header being set by webapps. This header is used to avoid click-jacking attacks, by ensuring that the sites content is not embedded into other sites. See here for more information.

Node affinity

Node affinity makes it possible to ensure that cluster resources intended for a specific task, e.g. execution resources, run on a specific set of nodes. There are two roles that each pod is grouped

into: core and execution. Pods in the core group consist of KNIME Business Hub control plane resources, and pods in the execution group relate to execution contexts.

In order to use the node affinity feature in your KNIME Hub cluster, you can apply one or both of the following labels to nodes within your cluster:

-

hub.knime.com/role=core -

hub.knime.com/role=execution

To label a node, you can execute the following command (where <node-name> is the name of the node you want to label):

kubectl label node <node-name> hub.knime.com/role=core

| For more information about labeling nodes, see the Kubernetes documentation. |

Pods will have to be restarted in order to be rescheduled onto labeled nodes. You can use the following example commands to restart the pods in a live cluster:

-

kubectl rollout restart deployment -n istio-system -

kubectl rollout restart deployment -n hub -

kubectl rollout restart deployment -n knime -

kubectl delete pods --all --namespace hub-executionThis command will restart all execution context pods.

There are a few things to note about the behavior of this feature:

-

Node affinity uses a "best effort" approach to pod scheduling.

-

If one or both of the

hub.knime.com/rolelabels are applied, cluster resources will attempt to be scheduled onto the nodes based on their role. -

If no nodes have a

hub.knime.com/rolelabel, pods will be scheduled onto any available node. -

If labeled nodes reach capacity, pods will be scheduled onto any available node.

-

If a labeled node is shut down, pods will be rescheduled onto other nodes in the cluster with a preference towards using nodes that have a matching label.

-

Node affinity for KNIME Business Hub uses the

preferredDuringSchedulingIgnoredDuringExecutionapproach (see the Kubernetes documentation for more details).

-

-

It is possible to use only one of the labels above, e.g. labeling nodes for the

executionrole but not specifying any node labels for thecorerole.

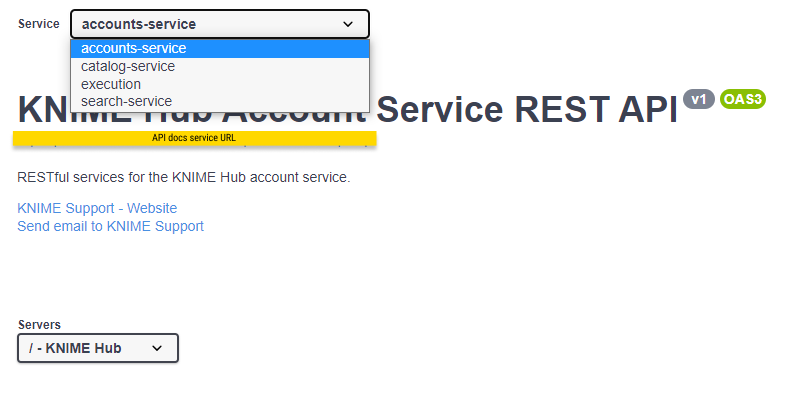

Create a collection

It is possible to create collections on your KNIME Business Hub instance.

KNIME Collections on KNIME Hub allow upskilling users by providing selected workflows, nodes, and links about a specific, common topic.

One example of a collection can be found on KNIME Community Hub here.

| This is a feature of KNIME Business Hub - Enterprise edition. |

In order to create a new collection page you need to be a global admin of your KNIME Business Hub instance.

The creation of a collection is possible via REST API, and a description of the different configurations can be found in your KNIME Business Hub API doc at the following URL:

api.<base-url>/api-doc/?service=catalog-service#/Collections

e.g. api.hub.example.com/api-doc/?service=catalog-service#/Collections.

In order to create a collection the items (i.e. workflows and nodes) that are collected need to be stored and accessible on the same KNIME Business Hub instance where collection is created.

To create the collection you will need then to build a json file with the schema that is available in the API doc in the Collections section, under the POST /collections request description.

The following is an example that would allow you to build a collection, similar to the one available on KNIME Community Hub here.

In the first section you can for example set up a title, a description, a so-called hero, which is the banner image at the top right of the example collection page, and tags:

{ "title": "Spreadsheet Automation", "description": "On this page you will find everything to get started with spreadsheet automation in KNIME", "ownerAccountId": "account:user:<global-admin-user-id>", "hero": { "title": "New to KNIME?", "description": "Get started with <strong>KNIME Analytics Platform</strong> to import all the examples and nodes you need for spreadsheet automation right now!", "actionTitle": "Download", "actionLink": "https://www.knime.com/downloads" }, "tags": [ "Excel", "XLS" ],

Next you can add different sections and subsections, each with a title and a description, choose a layout, and select the itemType such as Space, Component, Workflow, Node, Extension, or Collection. For each of these items you will need to provide the id under which they are registered in your Business Hub installation.

The id for workflows, spaces, components, and collections can be build by taking the last part of their URL, after the ~, and adding a * at the beginning.

For example, the following workflow on the KNIME Community Hub has URL https://hub.knime.com/-/spaces/-/latest/~1DCip3Jbxp7BWz0f/ so its id would be *1DCip3Jbxp7BWz0f.

The id for node and extensions instead needs to be retrieved with a REST call, for example to the search endpoint of your KNIME Business Hub instance.

"sections": [ { "title": "Workflow examples", "description": "Some subtitle text here. Can have <strong>bold format</strong>", "iconType": "Workflow", "subsections": [ { "title": "How to do basic spreadsheet tasks in KNIME", "description": "Some examples on how to do common things", "layout": "SingleColumn", "numberOfTeaseredItems": 2, "items": [ { "title": "Click Here!", "itemType": "Link", "absoluteUrl": "https://knime.com" }, { "id": "*SJW5zSkh1R3T-DB5", "itemType": "Space" }, { "id": "*vpE_LTbAOn96ZOg9", "itemType": "Component" }, { "id": "*MvnABULBO35AQcAR", "itemType": "Workflow" }, { "showDnD": true, "id": "*yiAvNQVn0sVwCwYo", "itemType": "Node" }, { "id": "*bjR3r1yWOznPIEXS", "itemType": "Extension" }, { "id": "*QY7INTkMW6iDj7uC", "itemType": "Collection" } ] } ] } ] }

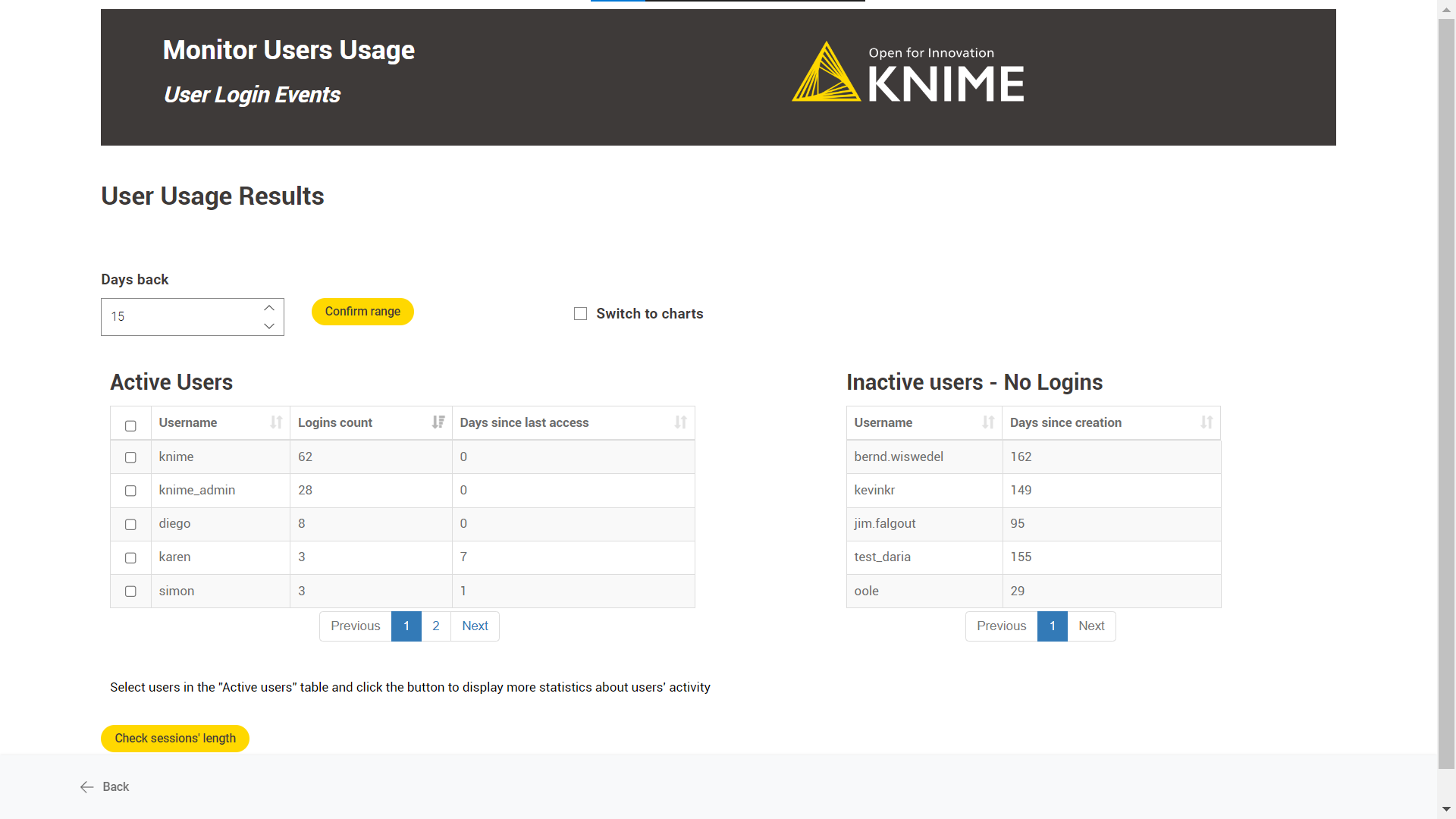

Administrator workflows

The workflows described in this section of the documentation aim to support KNIME Business Hub administrators, or heavy KNIME Business Hub users, to clean up, monitor and better administrate their Business Hub instance.

The functionalities provided are a time saver for monitoring or administrating KNIME Business Hub, eliminating the need for manual work and centralizing information from various applications.

Workflows overview

The user can access the workflows on the KNIME Community Hub in a public space owned by KNIME. Additionally, the user can find them on a dedicated collection page. To use them, download the workflows from the Community Hub and upload them into an existing team space in your KNIME Business Hub installation.

Business Hub has three types of user roles (global admin, team admin, and team member). All the users with access to the “Admin Space” can run the workflows. The user’s role defines their allowed actions when running the different workflows.

The workflows can be run as data applications on-demand or directly scheduled using the Business Hub UI. First, you must deploy the workflows as a Data app or Schedule.

Below the list of workflows within the “Admin Space”, click on them to read further details:

Requirements and prerequisites

Requirements

-

The user needs to exist and be at least a Team member (no matter the user’s role) where the “Admin Space” is located

-

Also, the user needs at least view access to the “Admin Space.”

Prerequisites

-

The user should be familiar with new concepts on the Hub. See the KNIME Business Hub User Guide.

-

The user needs to create an application password specific to her account on KNIME Business Hub that most applications will use.

Discard Failed Jobs

Overview

This workflow aims to keep "clean" the KNIME Business Hub installation by discarding failed jobs from any kind of execution run by the KNIME Business Hub users.

Workflow specs

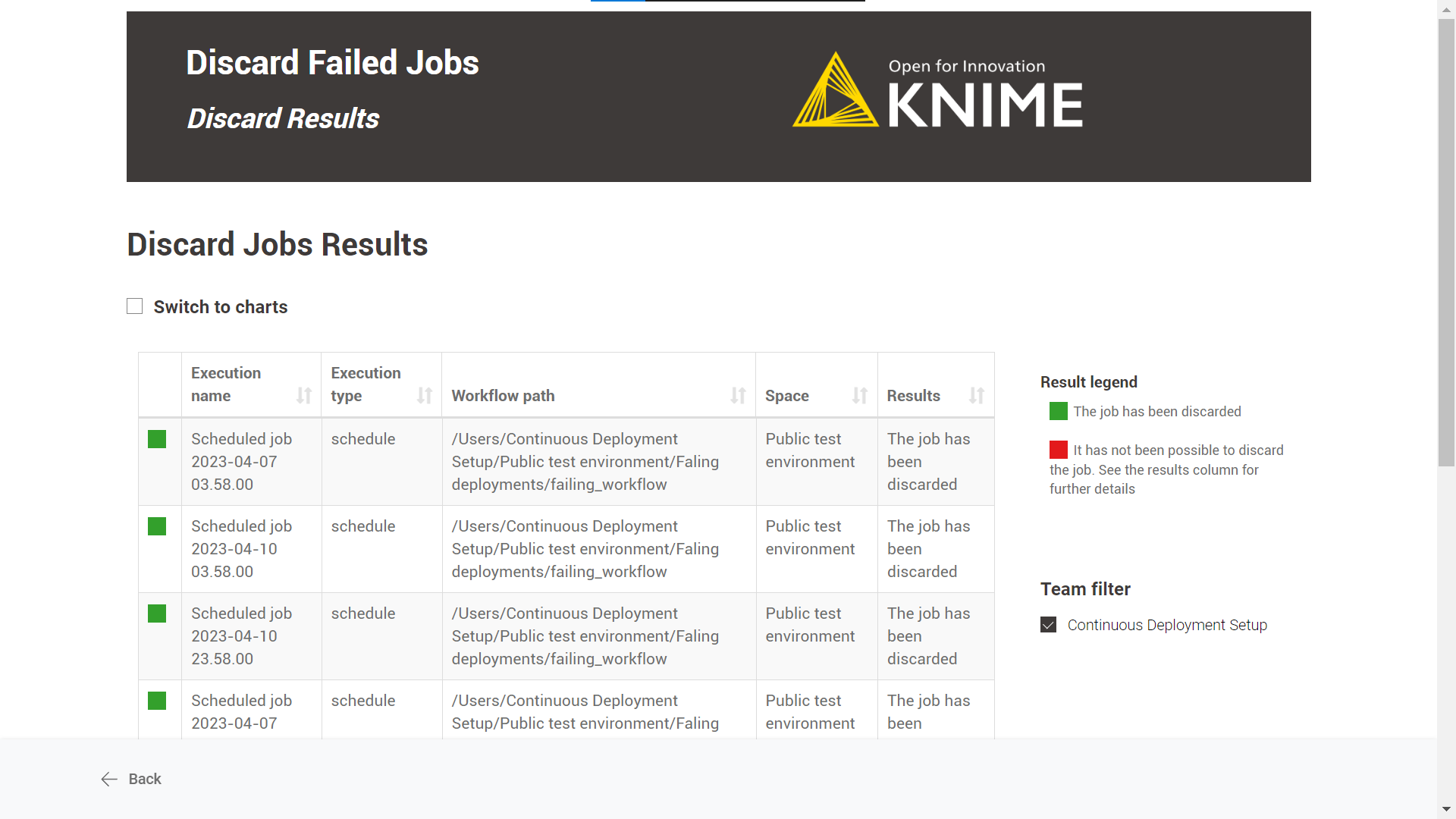

Without applying any time range, the workflow discards all failed jobs for the following execution types: ad-hoc executions, triggers, data apps, schedules or shared deployments.

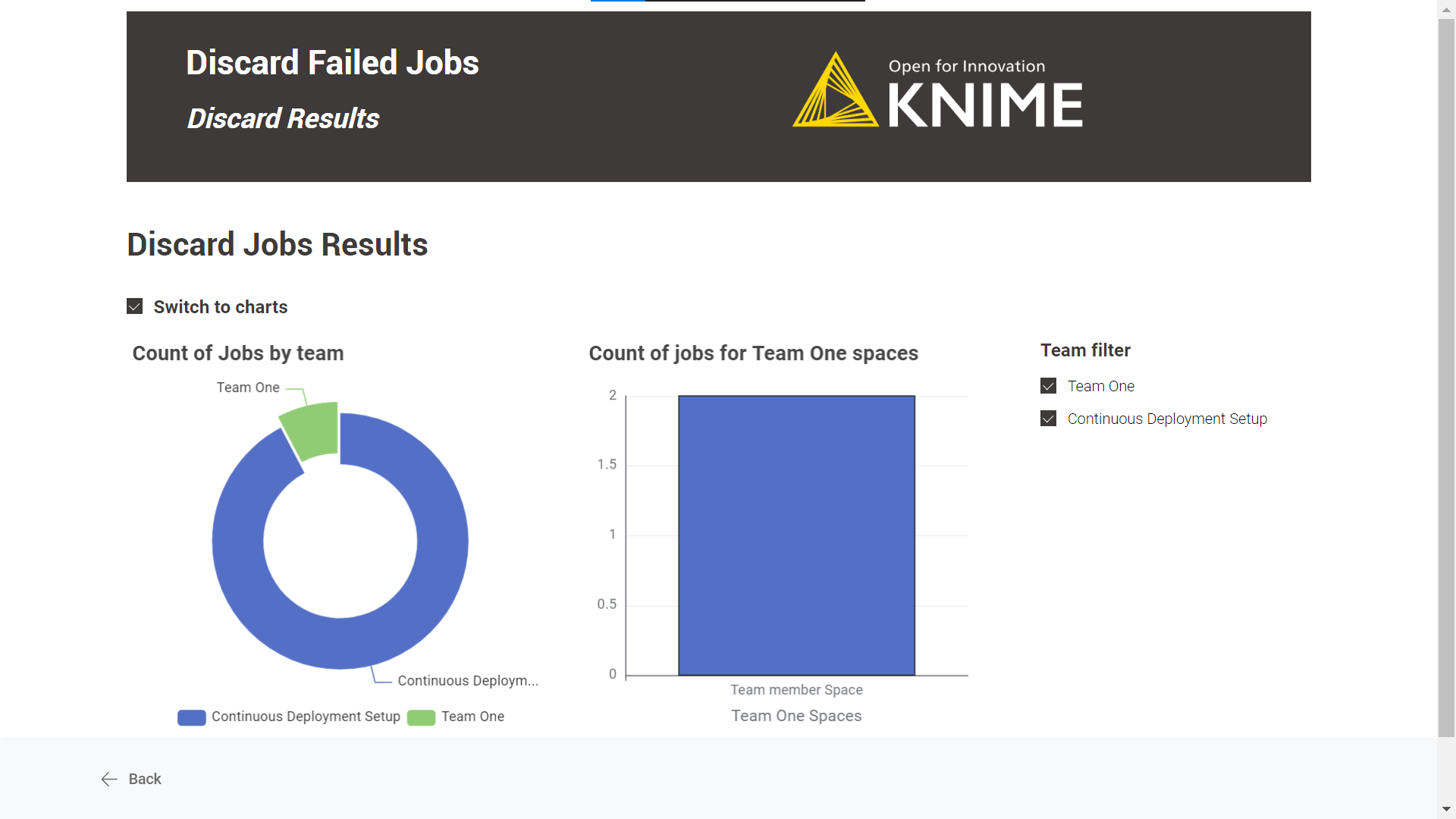

We consider as failed jobs all those with any of the following states after execution: “Execution_Failed”, “Execution_Failed_With_Content”, “Execution_Canceled”, or “Vanished”.

The failed jobs a user can discard depend on the role of the user running the workflow:

Global admin: can discard all failed jobs in any team and space from any execution type.

Team admin: can discard all failed jobs of the teams of which it is an admin from any execution type.

Team member: can discard only self-generated failed jobs from any execution type, any team and space of which it is a member (no matter the user’s right’s on space items). It also includes deployments shared with the user from teams where the user is not a member.

Deployment configuration

In both cases, you can provide the following information to deploy the workflow:

-

Hub URL: The URL of your KNIME Business Hub instance, e.g. “https://my-business-hub.com”.

-

Application Password ID: User-associated application password ID.

-

Application Password: User-associated application password.

If you want to know how to create an application password, follow these steps.

Data app

After deploying the workflow as a Data App, you can run it. To do so, follow these instructions.

Below are the steps for the data app:

-

Business Hub connection: you need to connect to the KNIME Business Hub instance through the previously generated application password.

-

Select Job State: it is possible to customize which types of failed jobs you want to discard. Max 4 job states should be available: “Execution_Failed”, “Execution_Failed_With_Content”, “Execution_Canceled”, or “Vanished”.

-

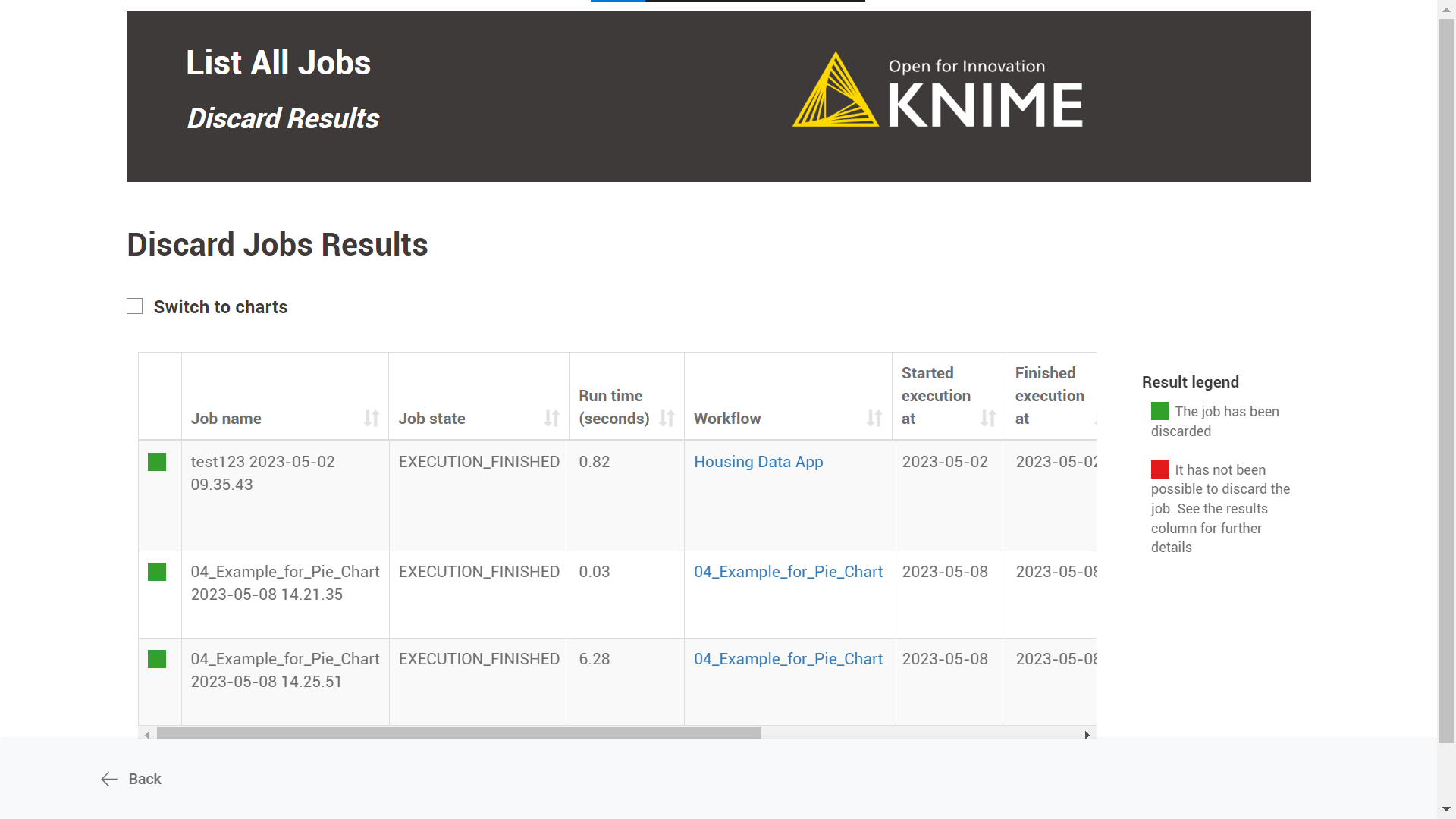

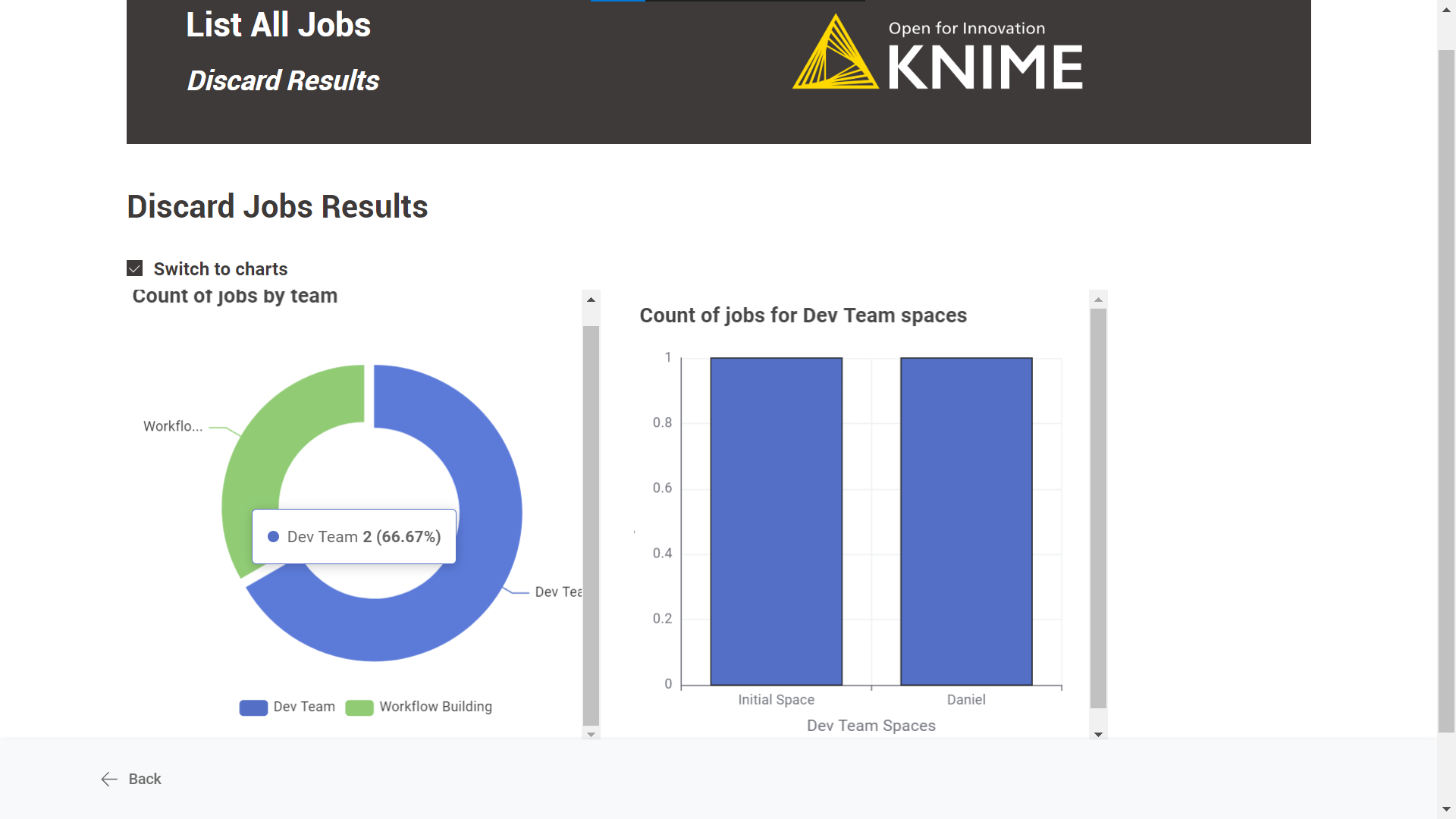

Discard Results: A table with the discard jobs results is displayed by default. There is also the possibility to see an illustrated version of the table by selecting the “Switch to charts” option.

Schedule

-

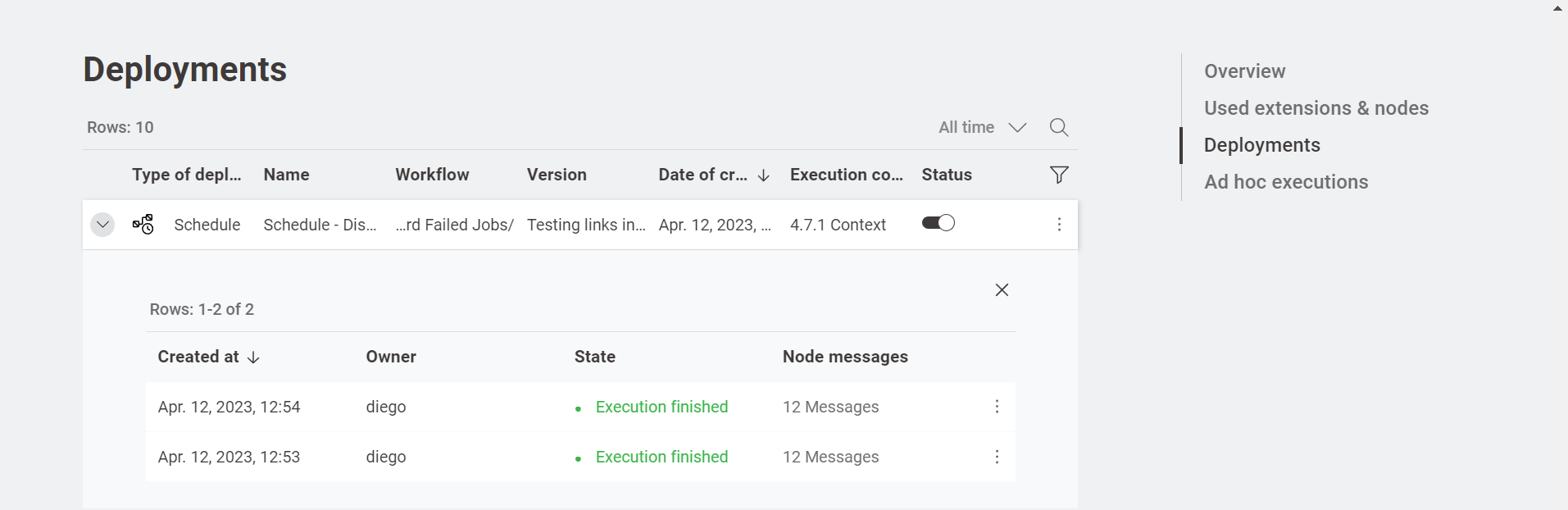

Define when the workflow should be executed through the schedule options. For more information, follow this guide.

-

Ensure the Schedule Deployment is active (Status column).

-

In the Team Deployments page or the workflow page, Deployments section, you can check the number of executions and their status.

List All Jobs

Overview

The workflow scope is to list all the jobs (excluding the failed ones) accessible to the user.

Additionally, the user can use the workflow to easily pinpoint any irregularities in the workflow’s execution or sort jobs that have been in an execution state for an extended period.

Furthermore, it is possible to select the jobs and discard them.

Workflow specs

Without applying any time range, the workflow lists all jobs for the following execution types: ad-hoc executions, triggers, data apps, schedules or shared deployments.

We only consider not failed jobs. This means we exclude any jobs with states such as: “Execution_Failed”, “Execution_Failed_With_Content”, “Execution_Canceled”, or “Vanished”.

The job information a user could retrieve depends on the user role running the deployed workflow:

Global admin: can recover all workflow jobs in any team and space.

Team admin: can recover all workflow jobs within the team where it is an admin.

Team member: can recover workflow jobs from any team and space where is a member (no matter the user’s right’s on space items). It also includes shared deployments from teams where the user is not a member.

The jobs a user can discard depend on the role of the user running the deployed workflow:

Global admin: can discard all jobs in any team and space from any execution type.

Team admin: can discard all jobs of the teams of which it is an admin from any execution type.

Team member: can discard only self-generated jobs from any execution type, team and space of which it is a member (no matter the user’s right on space items). It also includes deployments shared with the user from teams where the user is not a member.

Deployment configuration

This workflow can be deployed as a data app.

You can provide the following information to deploy the workflow:

-

Hub URL: The URL of your KNIME Business Hub instance, e.g. “https://my-business-hub.com”.

-

Application Password ID: User-associated application password ID.

-

Application Password: User-associated application password.

If you want to know how to create an application password, follow these steps.

Data app

After deploying the workflow as a Data App, you can run it. To do so, follow the instructions.

Below are the steps for the data app:

-

Business Hub connection: you need to connect to the KNIME Business Hub instance through the previously generated application password.

-

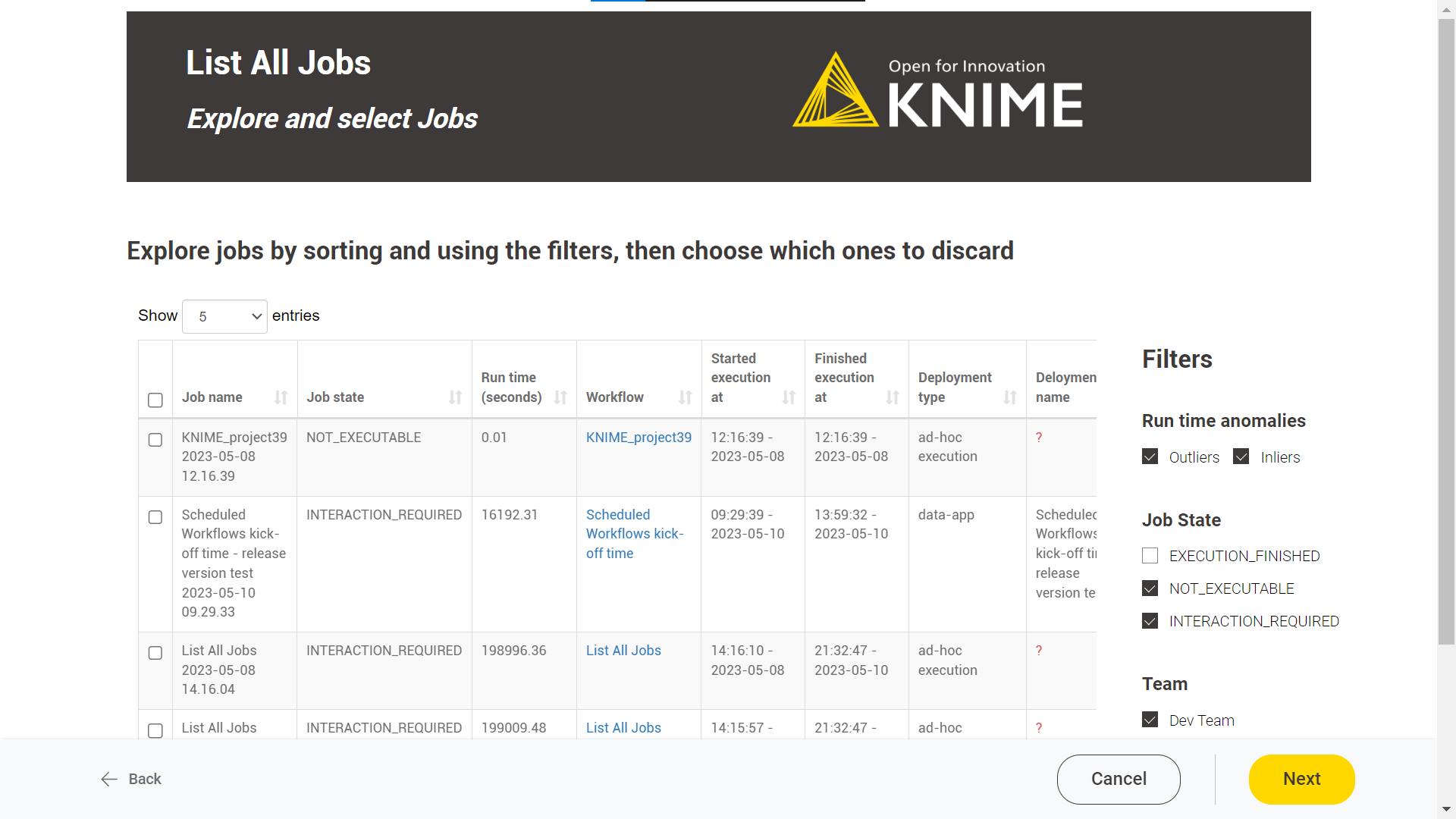

Explore and select Jobs: this feature displays a table of all available jobs for the user. Each job is listed with its name, state, runtime information, and corresponding workflow deployment.

Two exceptions related to the job’s deployment information:

-

When ad-hoc executions generate jobs, the deployment name is not available.

-

If the workflow is executed when a deployment that has generated a job is not available anymore in the KNIME Business Hub (because it has been discarded or, in the case of schedules, the deployment has ended), retrieving the deployment information is not possible. It displays a message in the "Deployment name" column: "This deployment is no longer available."

On the right side, the user can find three filters:

-

Run time anomalies: it detects outliers using the Numeric Outliers node. As a user, you can focus on "Outliers", which will help you identify jobs that take significantly longer or shorter to execute than others within the same workflow deployment.

-

Job state: It allows filtering by specific job states. The available job states will be shown based on the currently listed jobs.

-

Team: the user can filter by team.

-

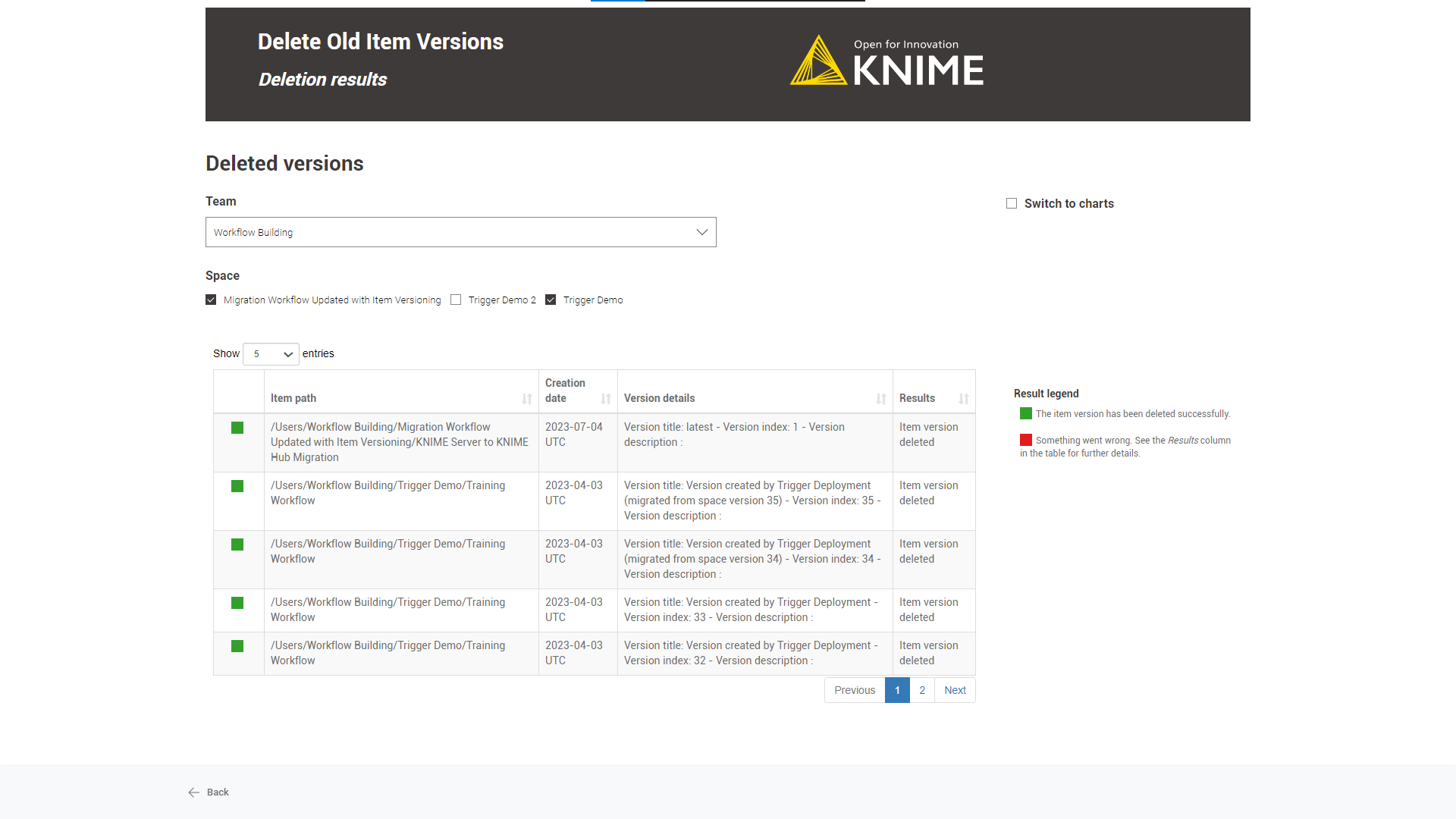

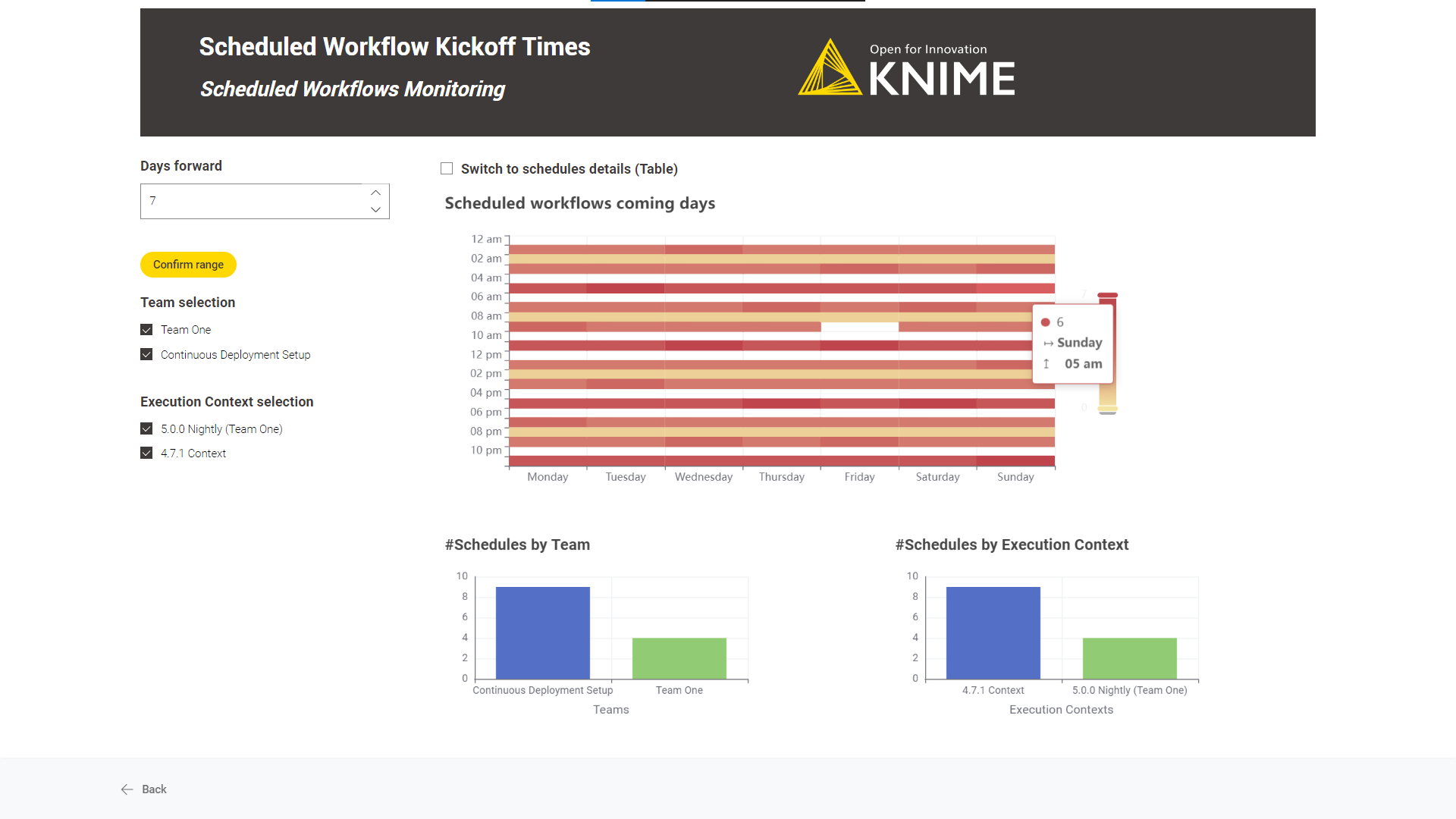

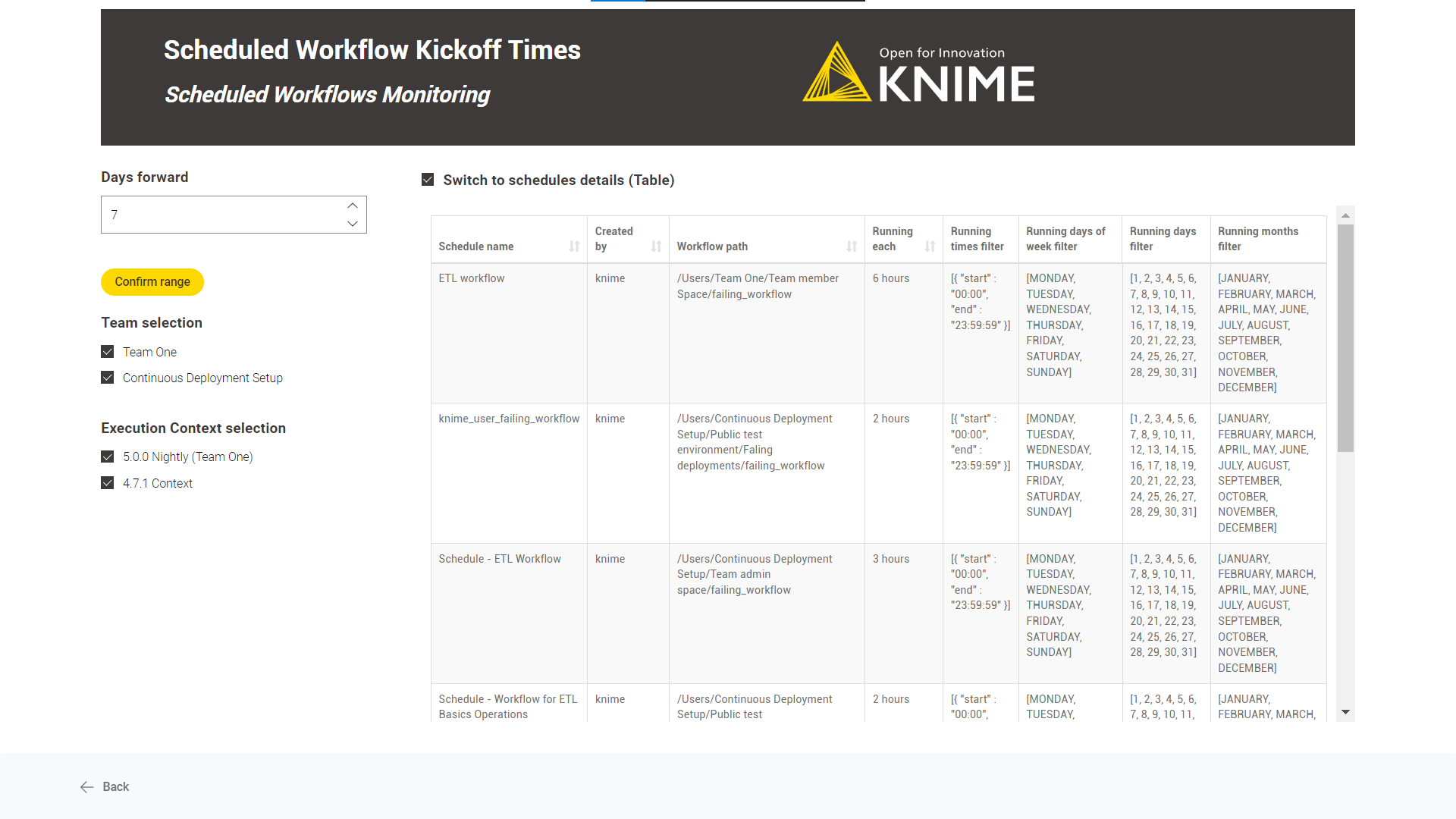

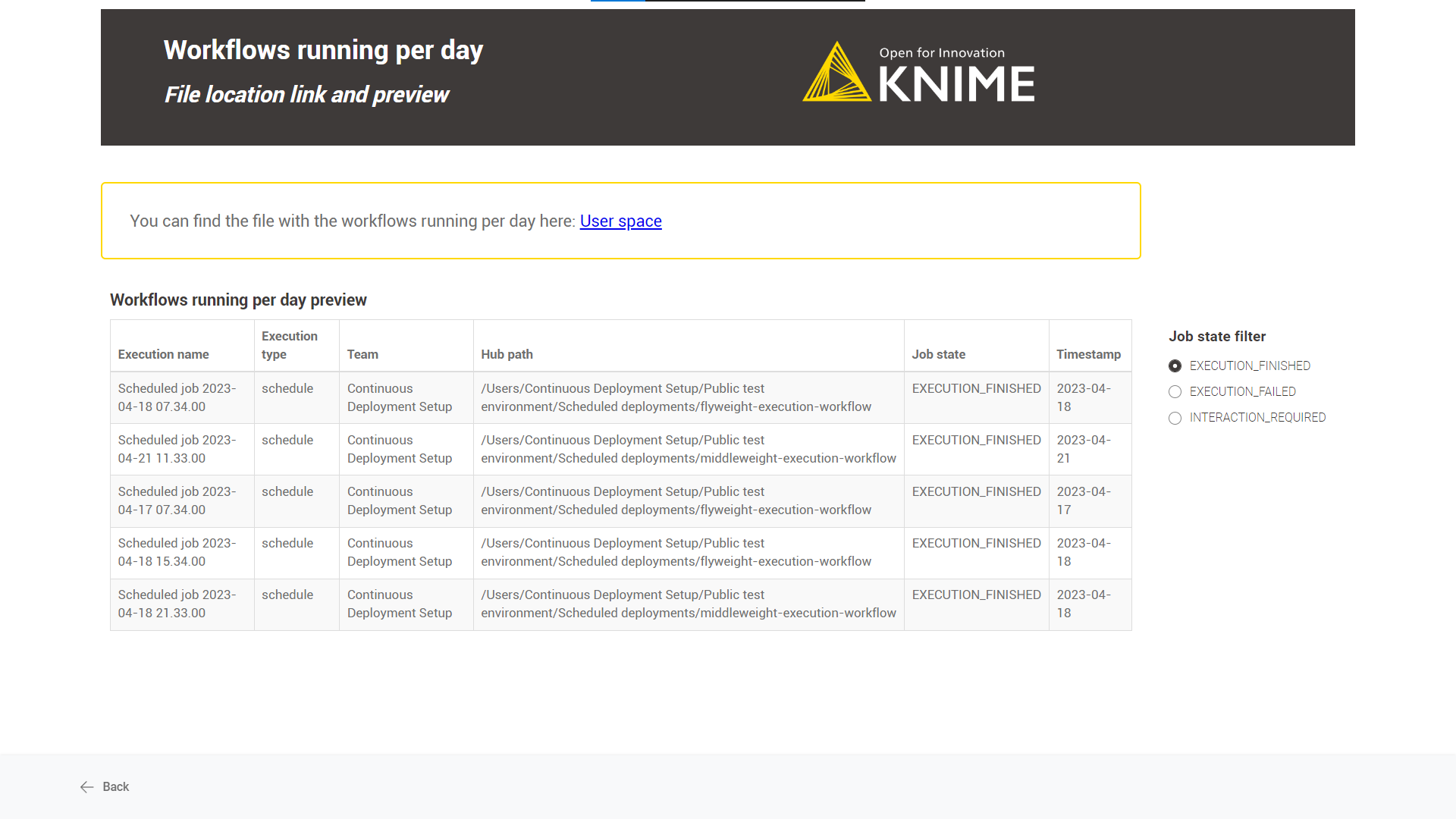

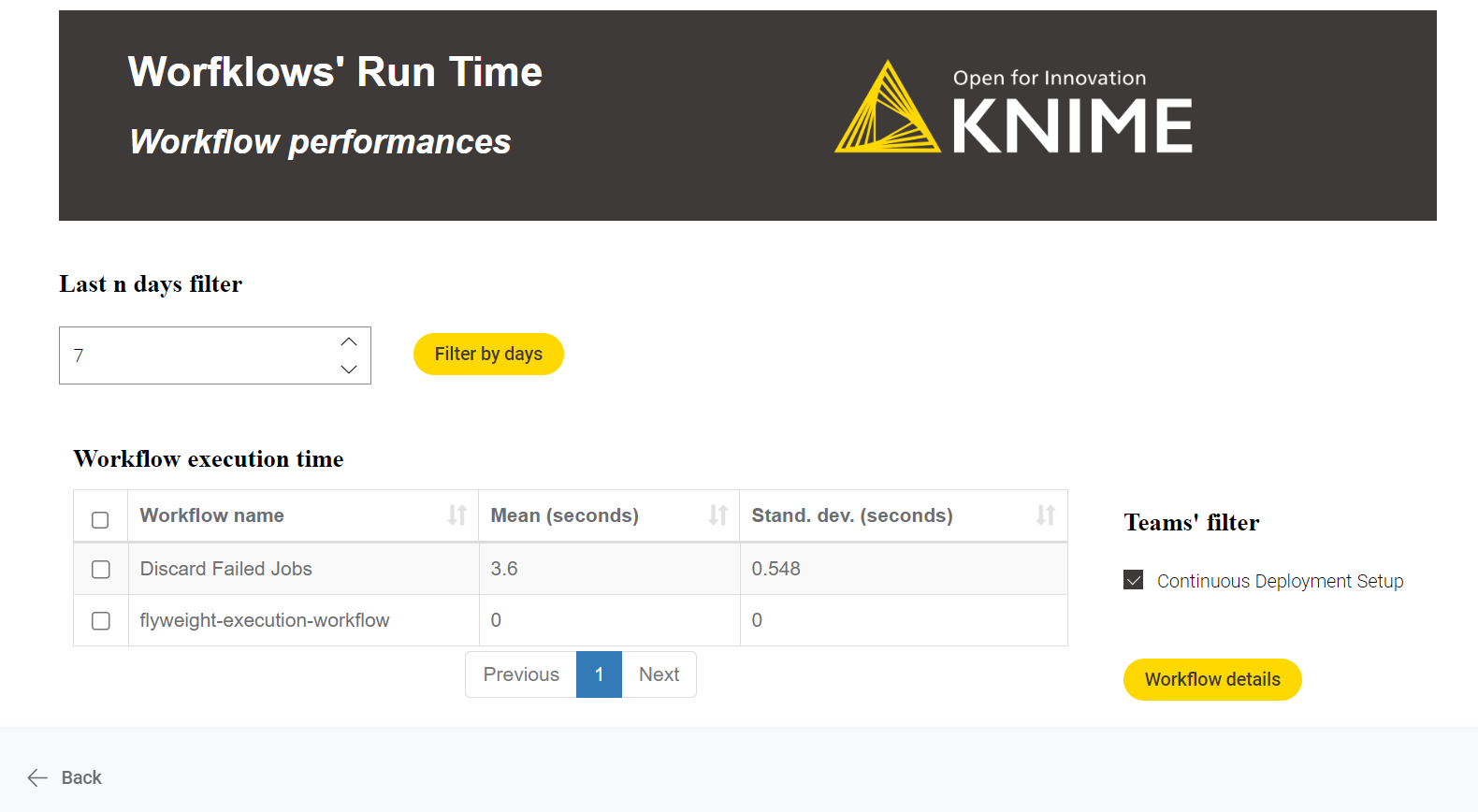

-