Collaborate with KNIME Team

KNIME Team

KNIME Team is a paid service offered on KNIME Community Hub.

This allows you to create a team on KNIME Hub to collaborate on a project with your colleagues. Additionally, you can add execution capabilities to your team. This will allow the team members to schedule and automate the execution of workflows on KNIME Community Hub.

A team is a group of users that work together on shared projects. Specific Hub resources can be owned by a team (e.g., spaces and the contained workflows, files, or components) so that the team members will have access to these resources.

You can purchase a team and choose the size of the team, meaning the number of users that will be able to access the team resources, and the disk space that you need to save your team workflows, data, and components.

The team can own public or private spaces. For more details, see the section Team owned spaces.

Create a team

To create a team, you need to subscribe to a KNIME Team. To do so, sign in with your KNIME Hub account and navigate to Pricing in the top right corner of the page, or go to KNIME Hub pricing page. Here you can proceed with the subscription to the KNIME Team.

To subscribe to a KNIME Team, you need to have a KNIME user account.

You will be asked to provide the details of the account and your payment information. Then, you can choose your subscription plan. You can choose how much disk storage and how many users you would like to purchase. The basic plan consists of 30GB of disk storage and a total of 3 users. Please note that the subscription will be automatically renewed every month if not canceled, and that users and disk storage can be adjusted later. Your usage will be prorated for the next billing cycle. To change or cancel your subscription, follow the instructions in the section Manage team subscription.

Once you have successfully purchased a team, you can assign it a name and start adding members to your team. The number of members that can be added to the team is limited to the number of users that you purchased.

There are two types of roles a user can be assigned when part of a team:

Administrator. A team administrator can:

Member. A team member can:

- View the team page, with members list and spaces

- Create private spaces

- Modify and delete public and private spaces

- Upload/download items to/from public and private spaces

- Delete items from public and private spaces

The team creator is automatically assigned the administrator role and can promote any of the team members to administrators. In order to do so, please follow the instructions in the section Manage team members.

Team owned spaces

A team can own an unlimited number of both public and private spaces.

Team owned public spaces: The items that are stored in a team's public space will be accessible by everyone and be presented as search results when searching on KNIME Community Hub. Only team members will have upload rights to the public spaces of the team.

Team owned private spaces: Only the team members have read access to the items that are stored in a team's private space. This will then allow KNIME Community Hub users that are part of a team to collaborate privately on a project.

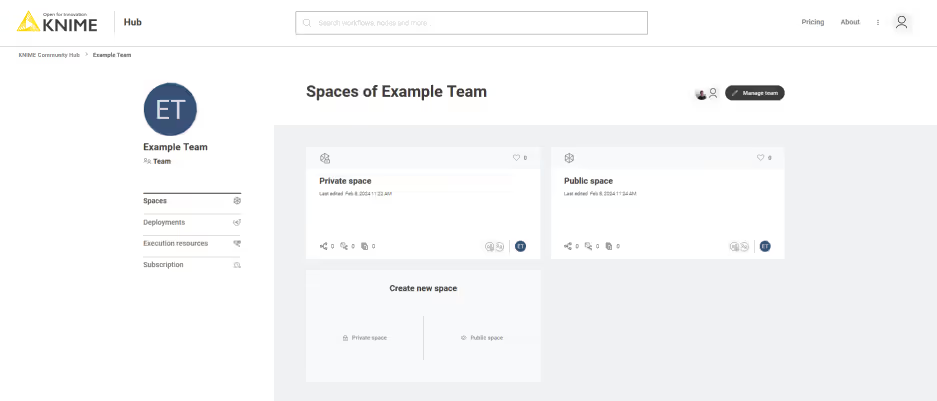

You can create a new space by going to the team's profile. To do so, click your profile icon in the top right corner of KNIME Hub and select your team. In the tile Create new space, click Private space to create a private space for your team, or Public space to create a public space. You can then change the name of the space, or add a description. You can change or add these also later by going to the relative space page and clicking the space name or Add description button to add a description for the space.

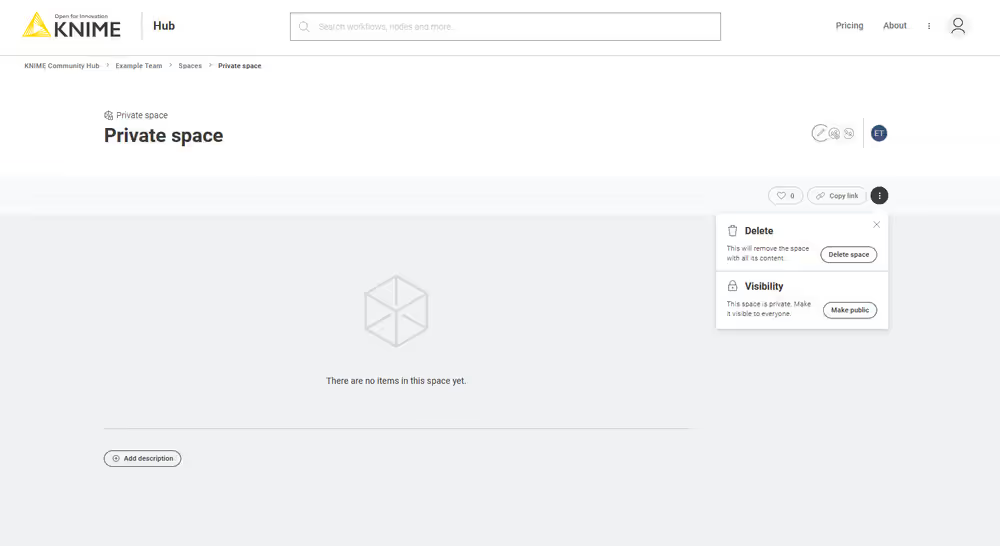

Furthermore, you can change the visibility of the space from private to public and vice versa, or delete the space. To do so, from the space page, click the menu icon, as shown in the image below.

Manage space access

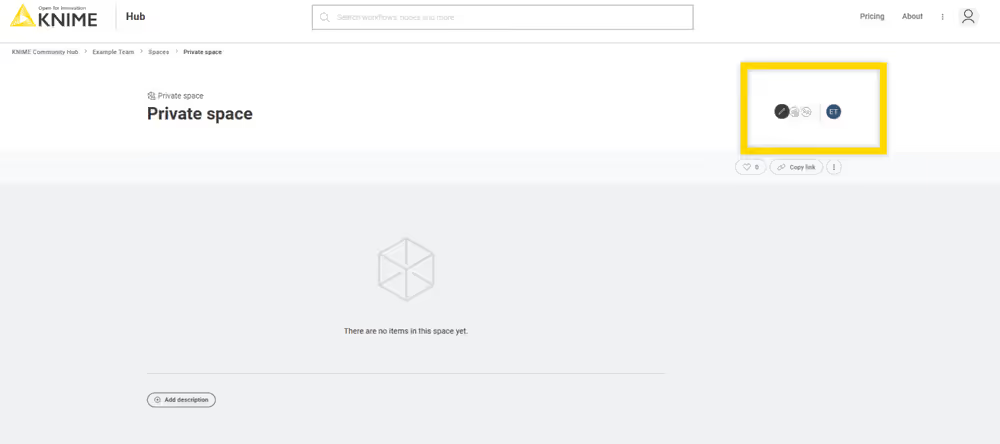

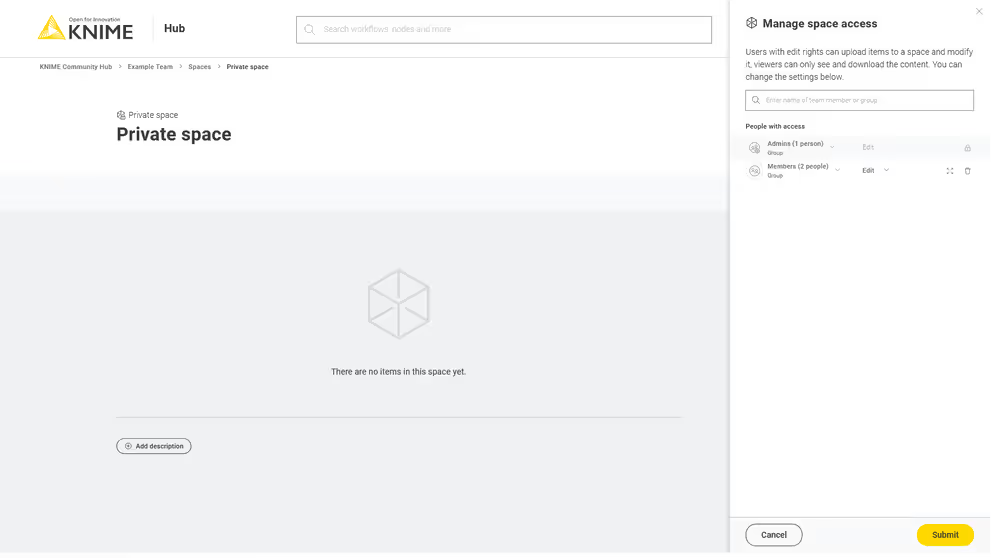

You can also manage the access to a specific space. To do so, navigate to the space and click the pencil icon.

In the Manage space access side panel that opens, you can add other team members. You can change the rights they have on the items in the space - e.g. you can grant them Edit rights or View rights for this space.

Click the expand icon to inspect the individual team members and their rights separately.

Manage team members

You can manage your team by going to the team's profile. To do so, click your profile icon in the top right corner of KNIME Hub.

![]()

In the dropdown menu that opens, you will see your teams. Select the team you want to manage to go to the corresponding team's profile page.

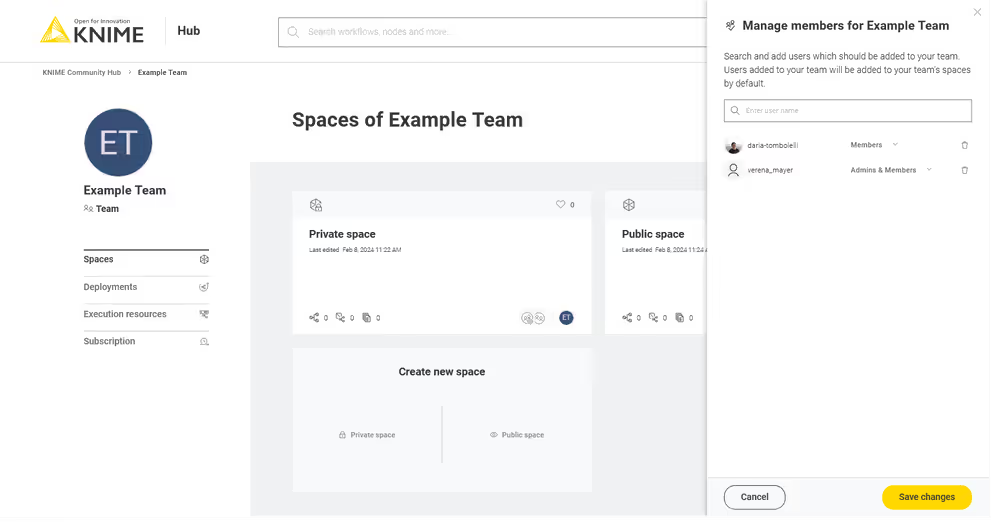

Here, you can click the Manage team button to open the Manage members side panel, as shown in the image below.

You will see here a list of the team members and their assigned roles.

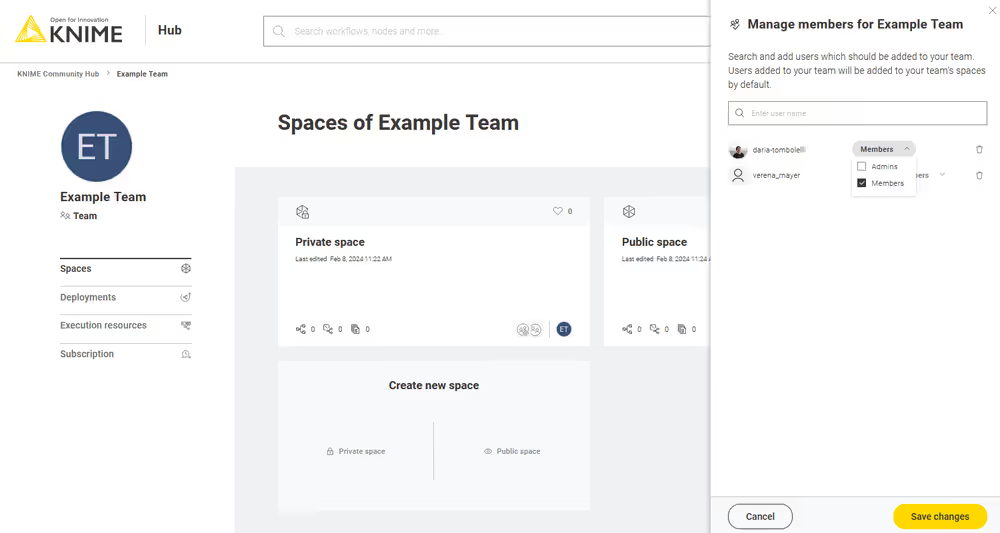

From here, a team admin can change the roles of the team members. To do so, click the drop down arrow close to the name and select the roles you want to assign to each user.

Then click the Save changes button to apply your changes.

Add members to a team

To add a new member, enter the username of the users that you want to add to the team in the search bar in the Manage members panel, then click the Save changes button to apply your changes.

For the user to show up in the search bar, the user needs to have logged in at least once to the KNIME Hub.

When the maximum number of users allowed by purchase is reached, you will be notified with a message in the Manage members panel. Click the Manage subscription button to purchase more users. Alternatively, you can remove previously added users in order to add new ones.

Delete members from a team

To delete a member, go to the Manage members panel and click the trash icon for the user you want to delete. Then click the Save changes button to apply your changes.

Change team name

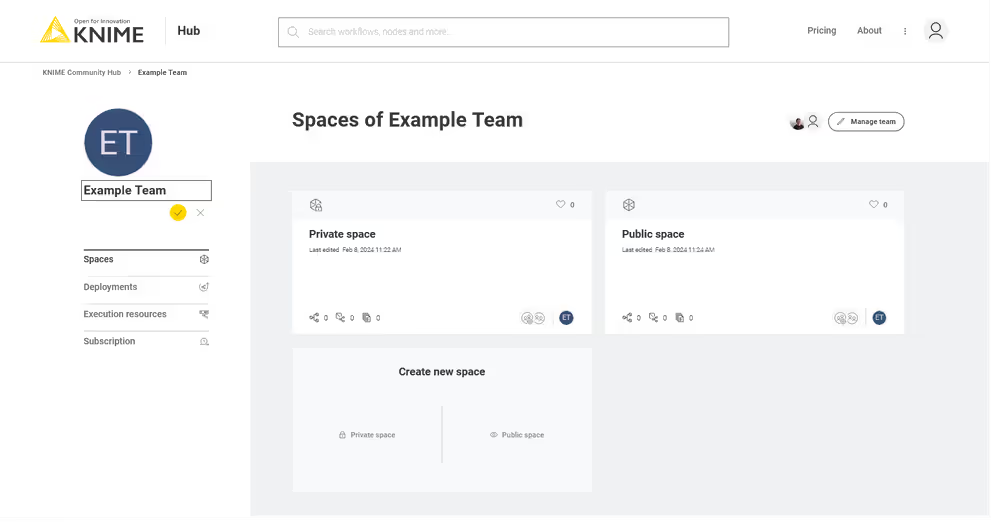

On the team's profile page, you can also change the name of the team. To do so, double-click the name of the team under the team logo on the left side of the page.

Insert the new name, and click the check button to confirm.

Change team profile icon

On the team's profile page, hover over the team profile icon and click on Upload new to select an image from your local computer.

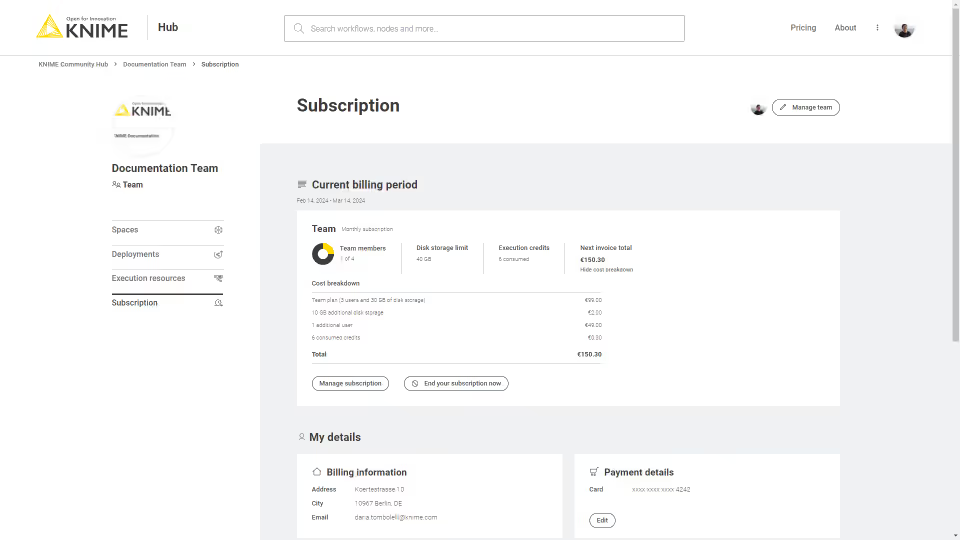

Manage team subscription

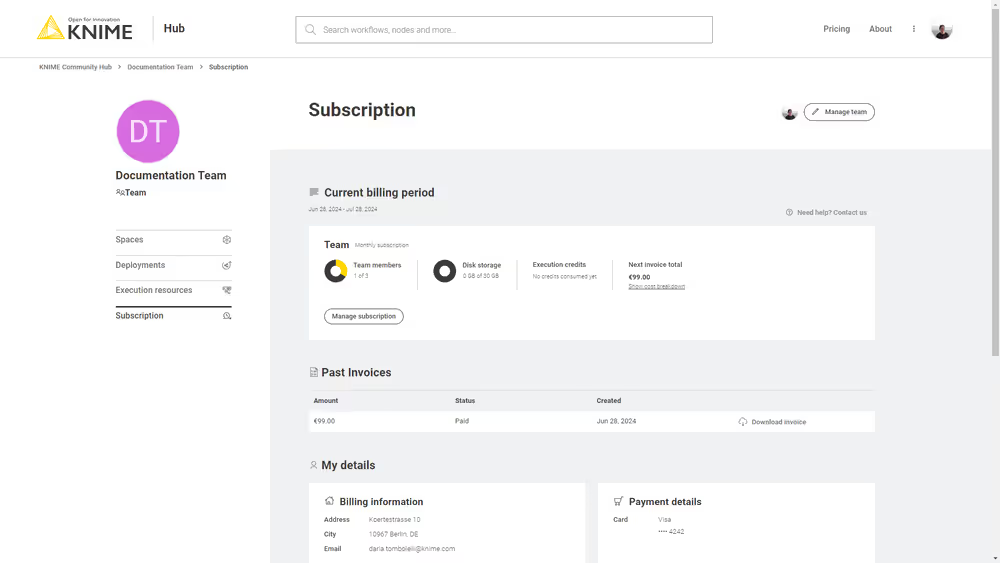

You can manage your subscription on the Subscription page. On the team's profile page, select Subscription on the side menu to access it.

Here you can:

Show the cost breakdown: Click Show cost breakdown to see the amount to be paid for your KNIME Team and the consumed credits for execution.

Manage subscription: Click Manage subscription and change the number of Users or the amount of Disk storage available for your team.

End subscription: In the Manage subscription side panel, click End your subscription now. This action will take effect after the end of your current billing cycle. Your data will be kept for 30 days and you will be able to re-activate your account in that time period. After 30 days we will delete all your data so it is not recoverable anymore. Once you confirm with Cancel subscription, your team and its spaces will be deleted.

To re-activate your KNIME Team please get in contact with us.

Past invoices: Here you can see an overview of all the invoices and download them by clicking Download invoice.

Inspect your billing information and edit your payment details.

Connect to KNIME Community Hub and upload workflows

As a member of a team on KNIME Community Hub you will be able to upload items, for example workflows or components, to the spaces of your team.

This will allow you and all the other members of the team to have access to the items, version them so that you can collaborate on projects.

To do this the process is the same as for simple KNIME Community Hub users, that are not part of a team, as explained in the KNIME Community Hub User Guide.

In the following sections you will find the fundamental steps that you need to do in order to connect, upload and version your workflows on the KNIME Community Hub.

The following steps assume that you have already created an account on KNIME Community Hub and that you a member of a team.

Upload items to KNIME Hub

You can upload items to KNIME Hub:

- From the web UI of KNIME Community Hub, if the items are saved on your local computer.

- From a local KNIME Analytics Platform instance, if the items are on your KNIME Analytics Platform local space.

Upload from KNIME Community Hub web UI

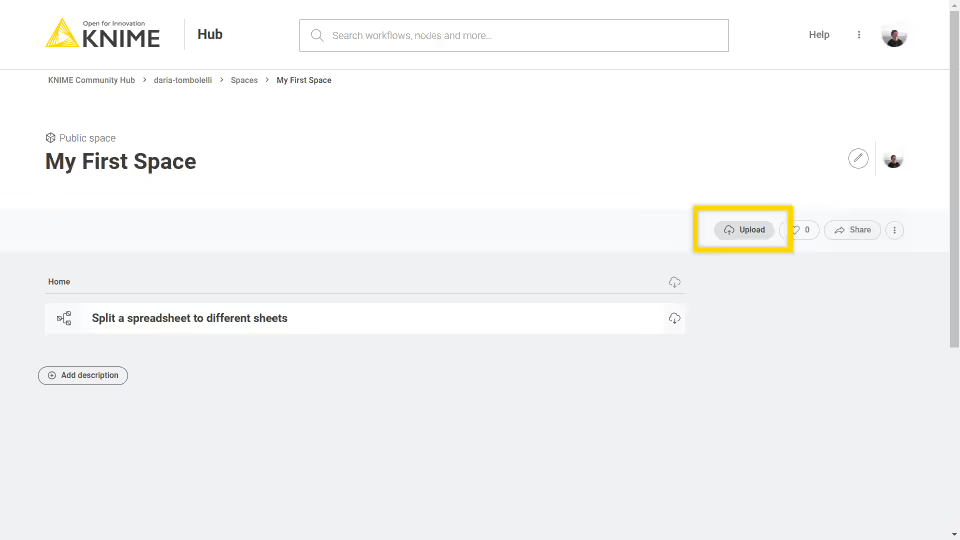

Go to KNIME Community Hub, sign in with your username and password, then navigate to the space where you want to upload your workflow (.knwf file) or file to. Click the Upload button and select the file you want to upload to the space from your local filesystem.

Upload from KNIME Analytics Platform

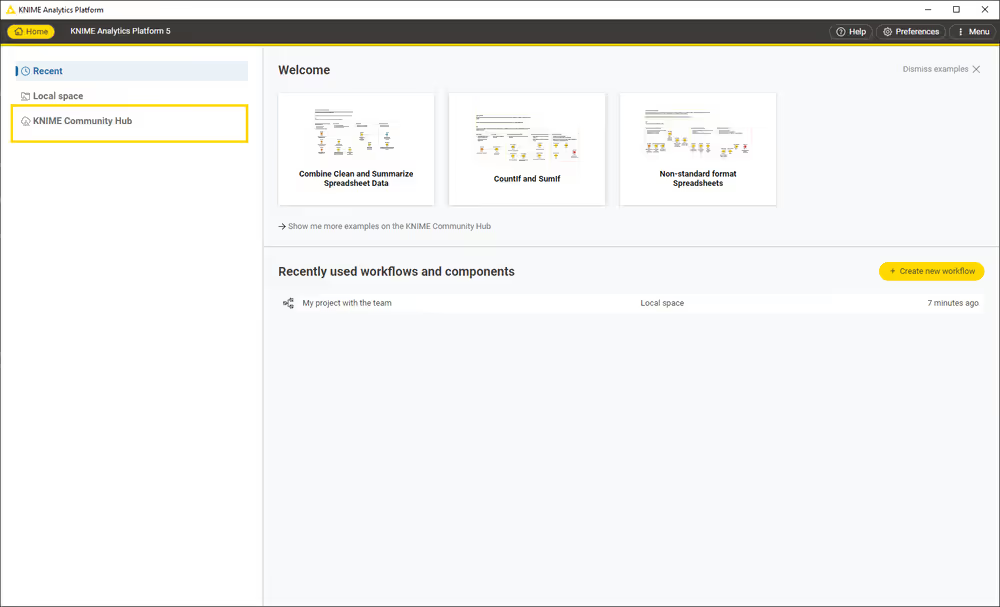

- Connect to the KNIME Community Hub from KNIME Analytics Platform: The first step is to connect to your KNIME Community Hub account on KNIME Analytics Platform. To do so, go to the Home page of KNIME Analytics Platform and sign in to KNIME Community Hub.

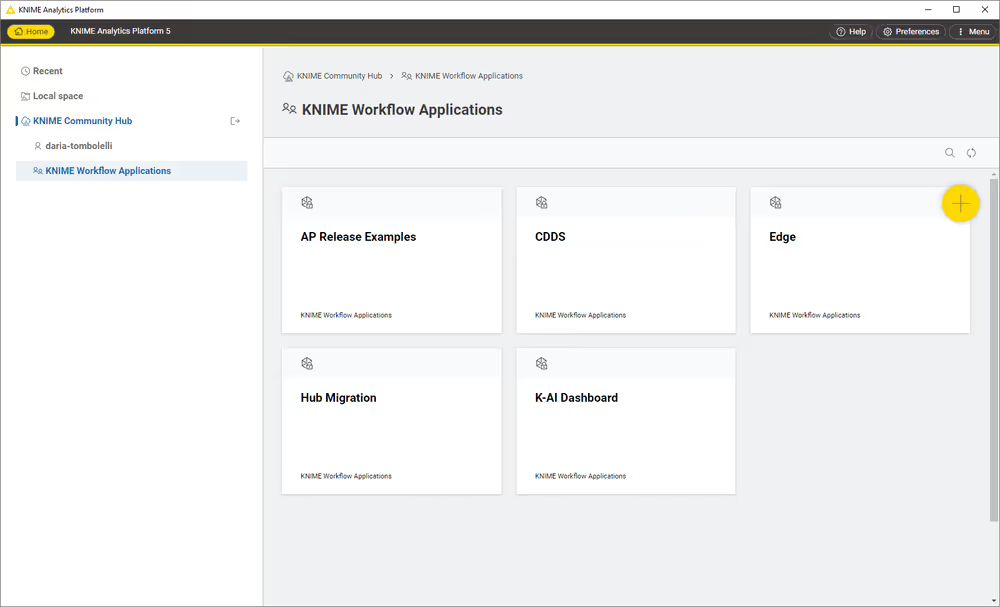

You will be redirected to the sign-in page if you are not connected in the current session already. Once you are signed in, you will see all your spaces and the spaces of your team.

Click on a space to access the items within the space. You can perform different types of operations on them:

Upload items to your KNIME Hub spaces, download, duplicate, move, delete, or rename your items. More information about this functionality is provided in the next section.

Open workflows as local copies or on KNIME Hub. You can find the respective buttons in the toolbar on top.

Create folders to organize your items in the space. Click the Create folder button in the toolbar on top.

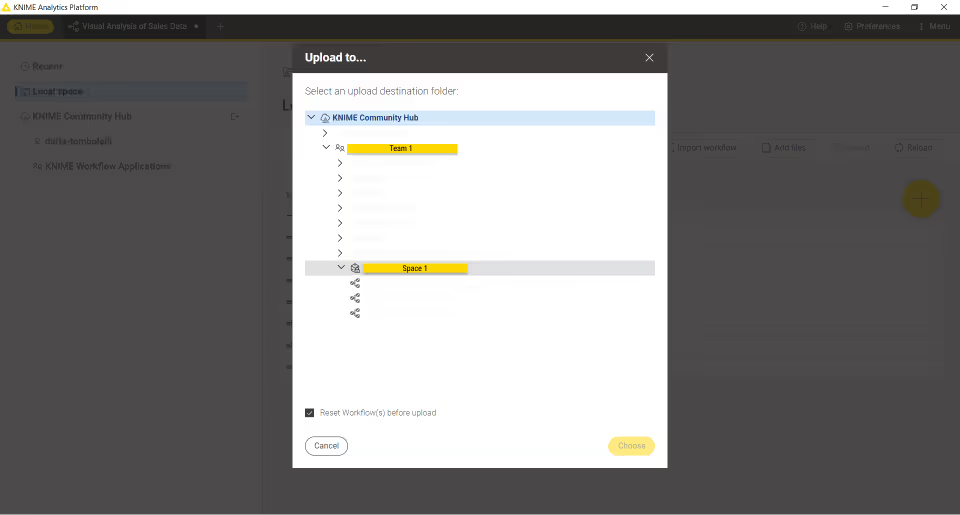

Once you are connected to your KNIME Hub account from KNIME Analytics Platform, you can upload the desired items to your KNIME Hub spaces. You can upload workflows or components to any of your spaces by right-clicking the item from the space explorer in KNIME Analytics Platform and selecting Upload from the context menu. A window will open where you will be able to select the location where you want to upload your workflow or component.

Items that are uploaded to a public space will be available to everyone. Hence, be very careful with the data and information (e.g., credentials) you share.

Version items

When uploading items to a space on KNIME Hub, you will be able to keep track of their changes. Your workflow or component will be saved as a draft until a version is created.

When you create versions of the items, you can then go back to a specific saved version at any point in time in the future to download the item in that specific version.

Once a version of the item is created, new changes to the item will show up as draft.

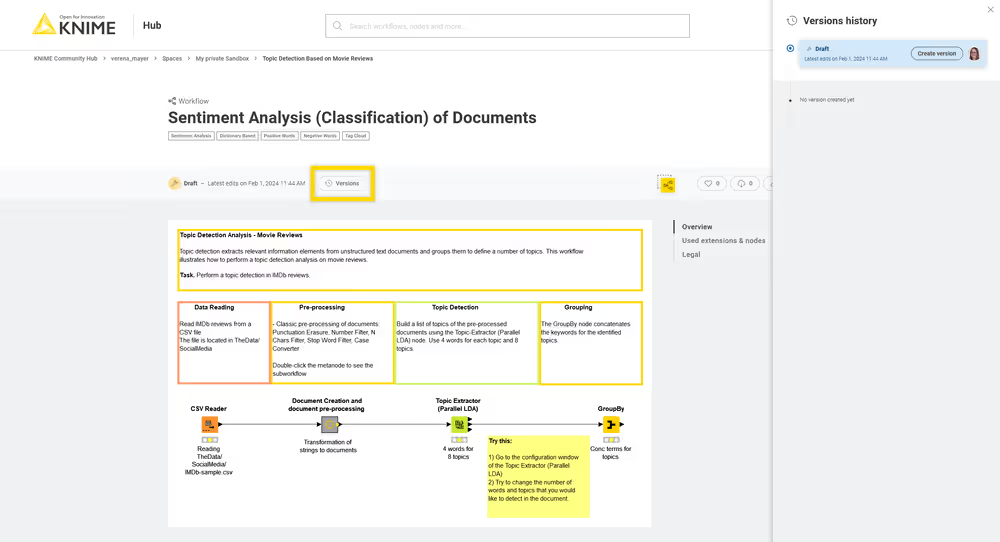

Create a version of an item

- Go to the item you want to create a version of

- Click Versions

- Click Create version in the Versions history panel on the right side of the page

- A side panel opens where you can give the version a name and add a description

- Click Create to create the version

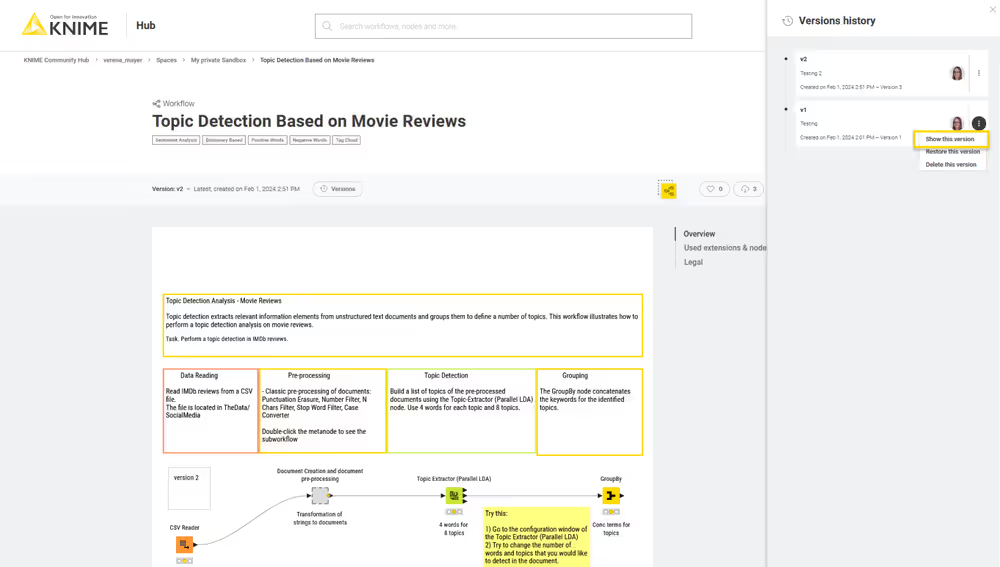

Show a version

In the Versions history panel, click the version you want to see, or click icon and select Show this version. You will be redirected to the item in that specific version.

To go back to the latest state of the item, click the selected version to unselect it.

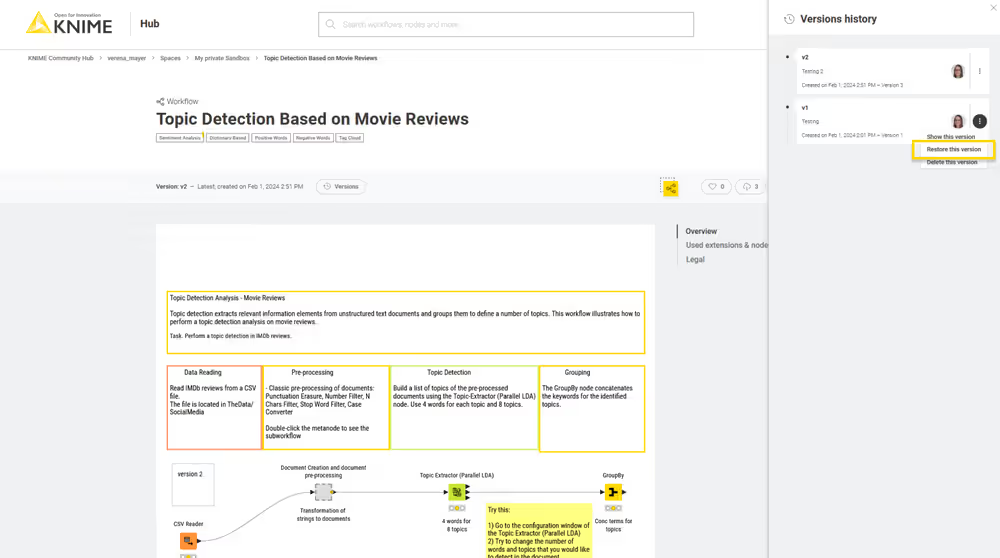

Restore a version

To restore a version that you created, click the icon in the version tile from the item history and select Restore this version.

The version will be restored as a draft.

Delete a version

The possibility to delete created versions is available only for the team admins, for workflows that are uploaded to a team space.

In the History panel, click the icon for the version you want to delete and click Delete.

Move items

You can move items that you have uploaded to KNIME Hub to a new location within the space that contains the item, or to a different space that you have access to. To do this, you need to be connected to the KNIME Hub on KNIME Analytics Platform. If you want to move items within the same space, drag the item in the space explorer, for example, to a subfolder. To move items from one space to another, right-click the item and select Move to. In the Destination window that opens, select the space to which you want to move the item to.

These changes will automatically apply to the space on KNIME Hub.

Delete items from KNIME Hub

You can also delete items that you uploaded to KNIME Hub.

To do so you can:

Connect to KNIME Hub on KNIME Analytics Platform. Right-click the item you want to delete and select Delete from the context menu.

From KNIME Hub, sign in with your account and go to the item you want to delete. Click the

icon on the top right of the page and select Move to bin. This will move the item to the bin, where it will stay for 30 days before being permanently deleted.

You can:

Permanently delete the item from the bin by going to the profile page, selecting Recycle bin from the left-hand menu, and clicking

Delete permanently next to the item you want to delete.

Restore the item from the bin within the 30 days time frame by going to the profile page, selecting Recycle bin from the left-hand menu, and clicking

Restore next to the item you want to restore.

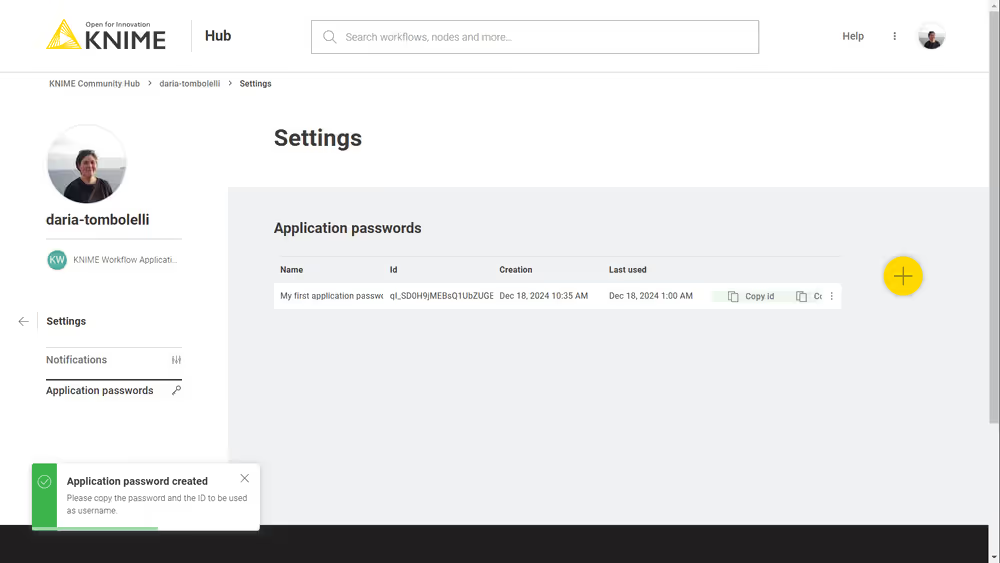

Application passwords

Application passwords can be used to provide authentication to KNIME Hub API, for example when using a KNIME Hub Authenticator node. To create a new application password you can go to your profile page and select Settings ? Application passwords from the menu on the left.

Click Create application password and a side panel will show.

Here you can give a name in order to keep track of the application password purpose. Then click Apply. The ID and the password will be shown only once. You can copy them and use it as username and password in the base authentication when you want to execute a deployed REST service.

From this page you can also click the 16 icon and click Delete from the menu that opens, in order to delete a specific application password.

The application password bears the same permissions as your user account. Hence, to avoid any security risks, make sure to keep it confidential and do not share it with others.

Execution of workflows

Execution allows you to run and schedule your teams workflows directly on KNIME Hub.

To do this the first step is to configure the resources you need.

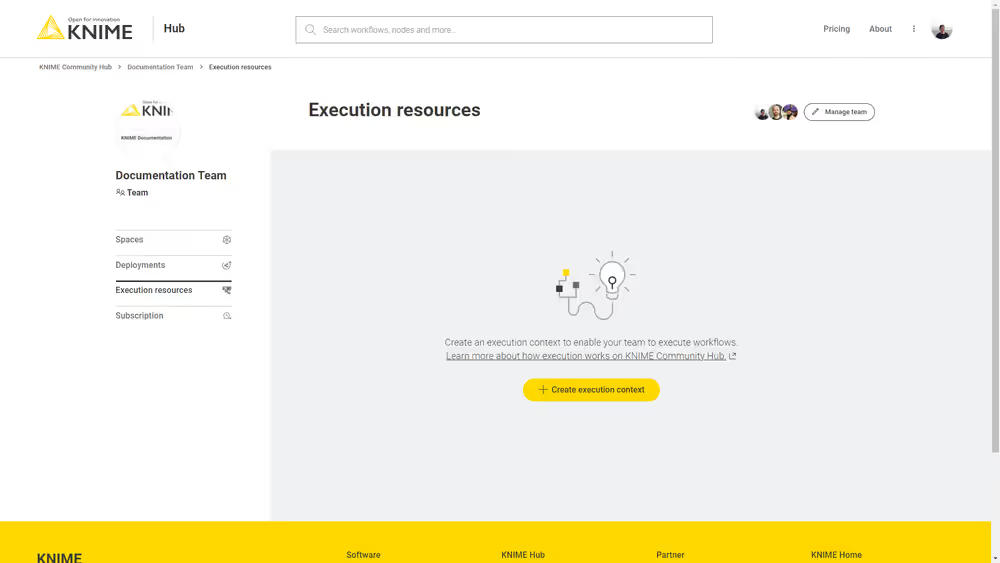

As the team admin, go to your teams page and select Execution resources from the menu on the left. Here you can create an execution context, which contains all the necessary resources to run your workflows.

This is how your page will look like the first time you create an execution context.

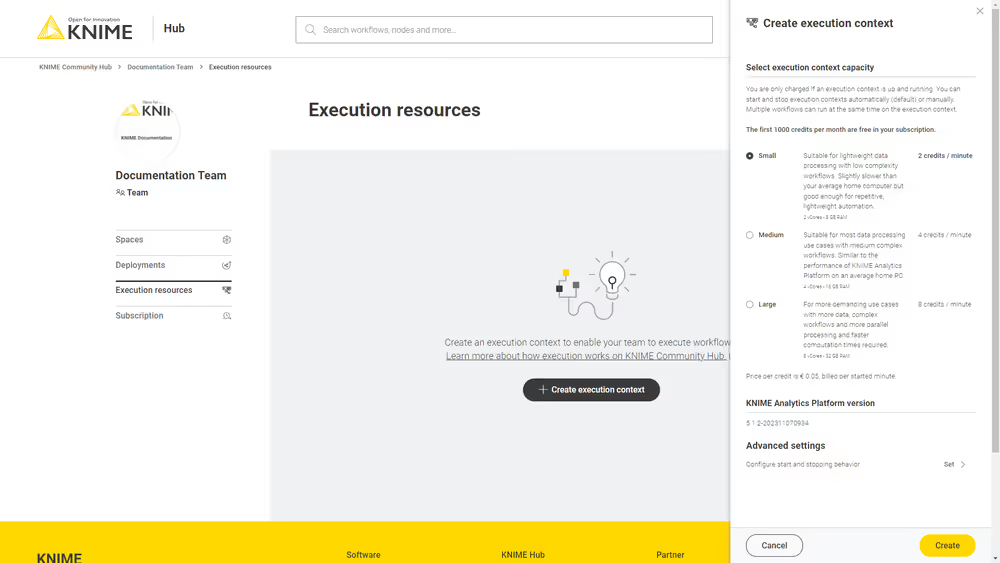

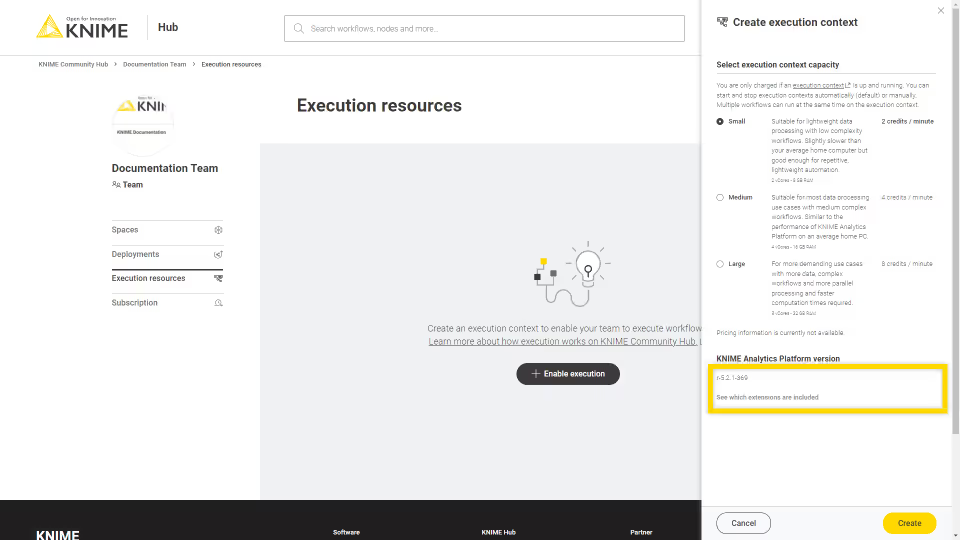

When you click the button to create a new execution context a panel on the right will open.

Here you can configure the capacity of your execution context. Select the size of the execution context to decide how performant your workflows execution will be. You and your team members can execute as many workflows as you wish, even in parallel.

We offer three different execution context capacities:

- Small: Small has 2vCores and 8GB of RAM. This option is suitable for lightweight data processing with low complexity workflows. The execution power would be slightly slower than executing the workflow on your local KNIME Analytics Platform installation, so we recommend this option if you want to perform lightweight automation, e.g. schedule the execution of your workflows.

- Medium: Medium has 4vCores and 16GB of RAM. This option is suitable for most data processing use cases with medium complex workflows. The performance is similar to a local KNIME Analytics Platform installation.

- Large: Large had 8vCores and 32GB of RAM. If you have more demanding use cases, with heavier data, complex workflows and you need more parallel processing power with faster computation times you can select this option.

You will be charged only when the execution context is running. The execution context will start and stop automatically on demand. This means that it will start automatically when the execution of a workflow starts or an executed workflow is being inspected. The execution context will then stop automatically once all the workflows executions are finished and no executed workflow is being inspected.

Each execution context is based on a specific version of KNIME Analytics Platform provided by us. Find an updated list of the extensions available on the executor here.

Manage execution contexts

You can manage the execution context of your team by navigating to your team overview page and selecting Execution resources from the menu on the left.

Here, among other things, you can edit the execution context settings, disable or delete your execution context, or show the details of the CPU usage, the jobs that are running on the execution context and so on.

To do so select the appropriate option in the menu that opens when clicking 16 in the tile of the execution context.

Monitor execution contexts consumption

To monitor your execution consumption and projected costs, you can go to your subscription page.

Go to your team page and select Subscription from the menu on the left.

Execute a workflow

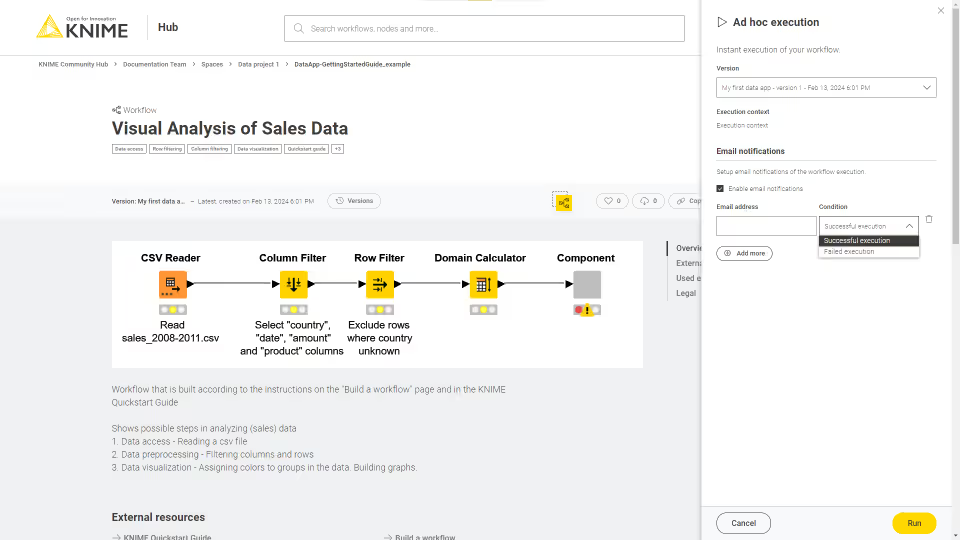

Once the execution context is set up you can run and schedule the workflows that are uploaded to your teams spaces on KNIME Community Hub. Navigate to a workflow in one of your teams spaces, you will see a Run button and a Deploy button.

Click Run to simply execute the current workflow directly in the browser. The latest version of your workflow will be executed, or you can select which version of the workflow you want to run. In the side panel that opens you can also enable the workflow actions, for example allowing the system to send you an email when the chose condition is met.

Be aware that this action will start up your executor, which, once the execution is started will start the billing.

A new tab will open where the result of the workflow execution will be shown. If your workflow is a data app you will be able to interact with its user interface. To know more about how to build a data app refer to the KNIME Data Apps Beginners Guide.

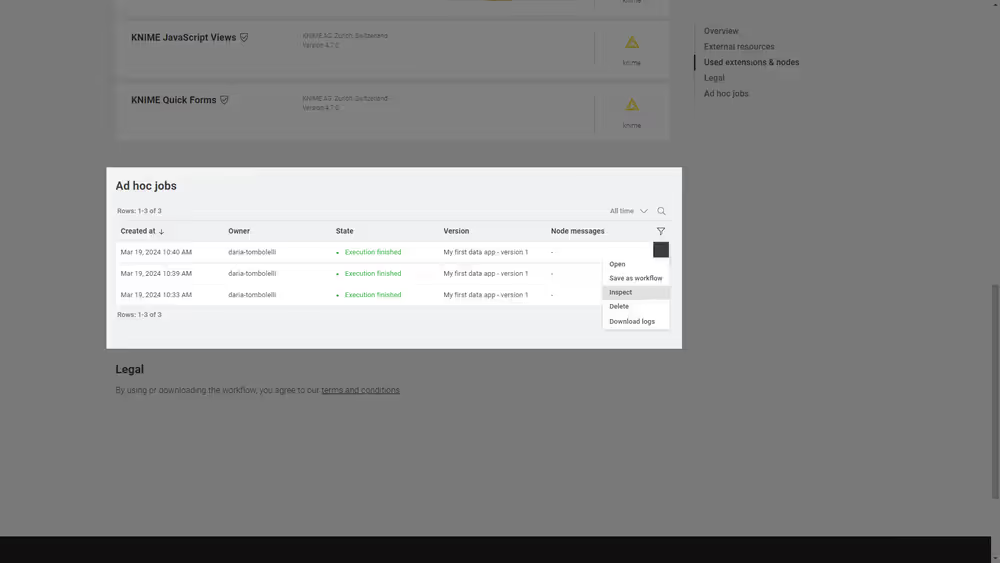

To see all the ad hoc jobs created for a workflow navigate to the workflow page and select Ad hoc jobs from the right side menu.

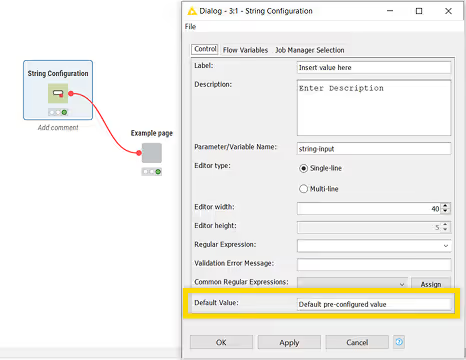

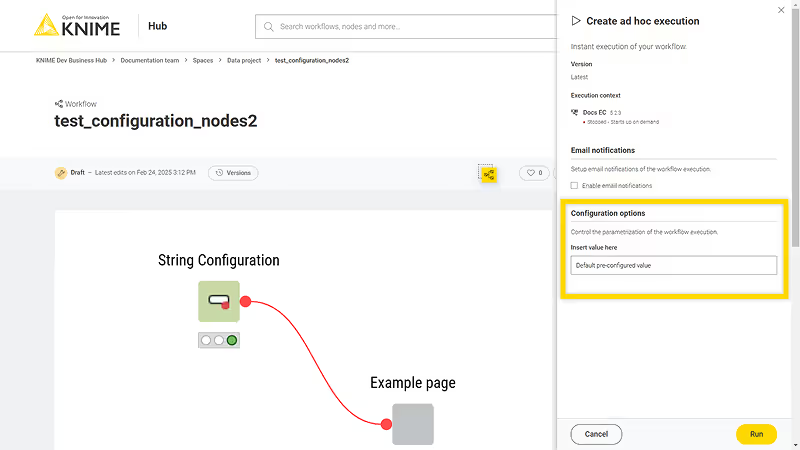

Parameterization of workflows with Configuration nodes

When you execute a workflow on KNIME Hub you can also provide parameters to the workflow using Configuration nodes.

When building the workflow, add a Configuration node in the root of the workflow?meaning the Configuration node should not be nested in a component.

This allows you to perform a number of operations that can be:

- Pre-configured with certain parameters. This can be done by choosing the default value in the configuration dialog of this type of nodes.

- Configured by the user at the time of execution or when creating a deployment. This can be done from the panel that opens when you click Run on the workflow page or when you create a deployment.

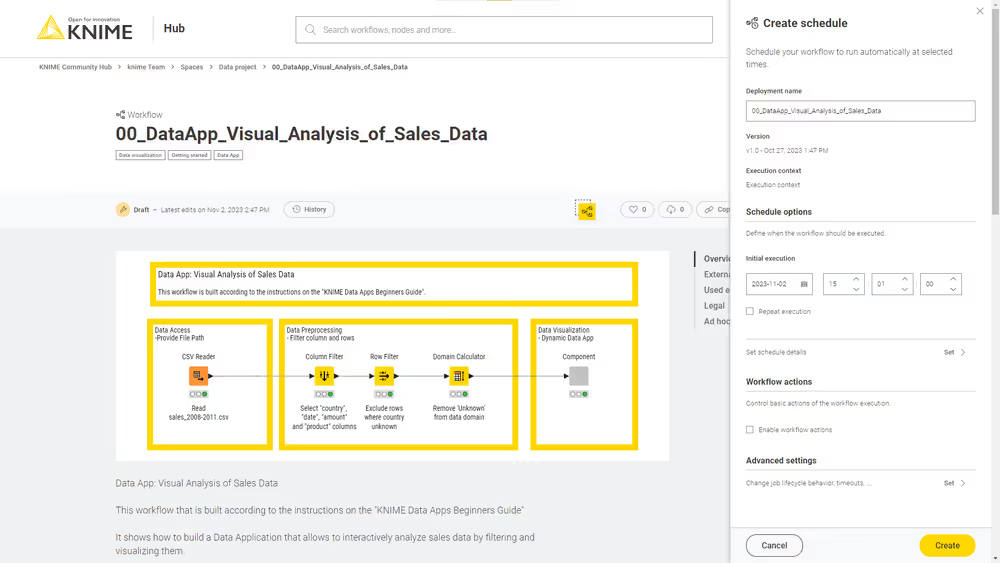

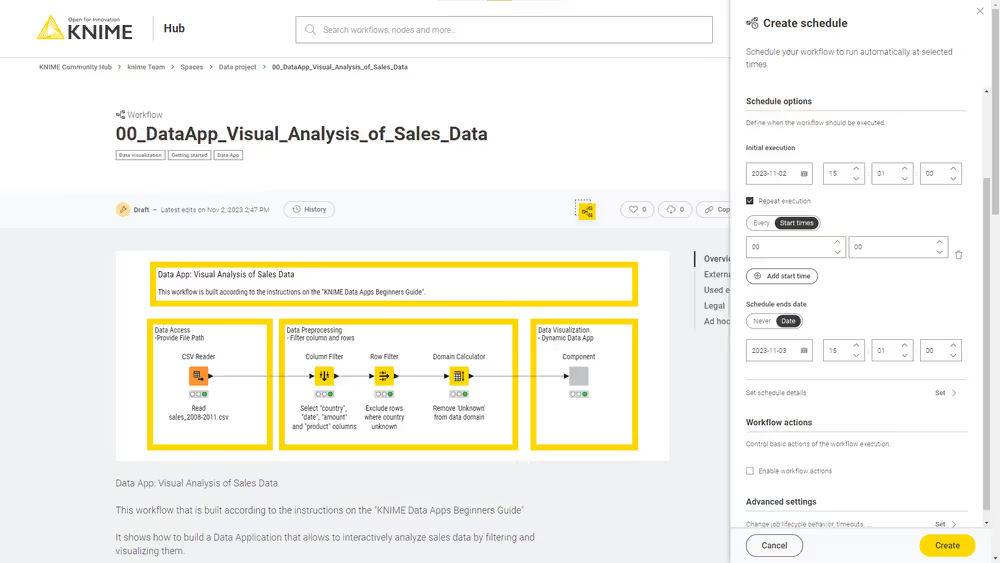

Schedule a workflow execution

A schedule allows you to set up a workflow to run automatically at selected times.

To create a schedule deployment of your workflow navigate to a workflow in one of your teams spaces. In order to be able to create a schedule deployment you need to have created at least one version of your workflow. To know more about versioning read the Versioning section of this guide. Once you have your stable version of the workflow, create a version (click Versions ? Create version). Now you can click the button Deploy and select Create schedule.

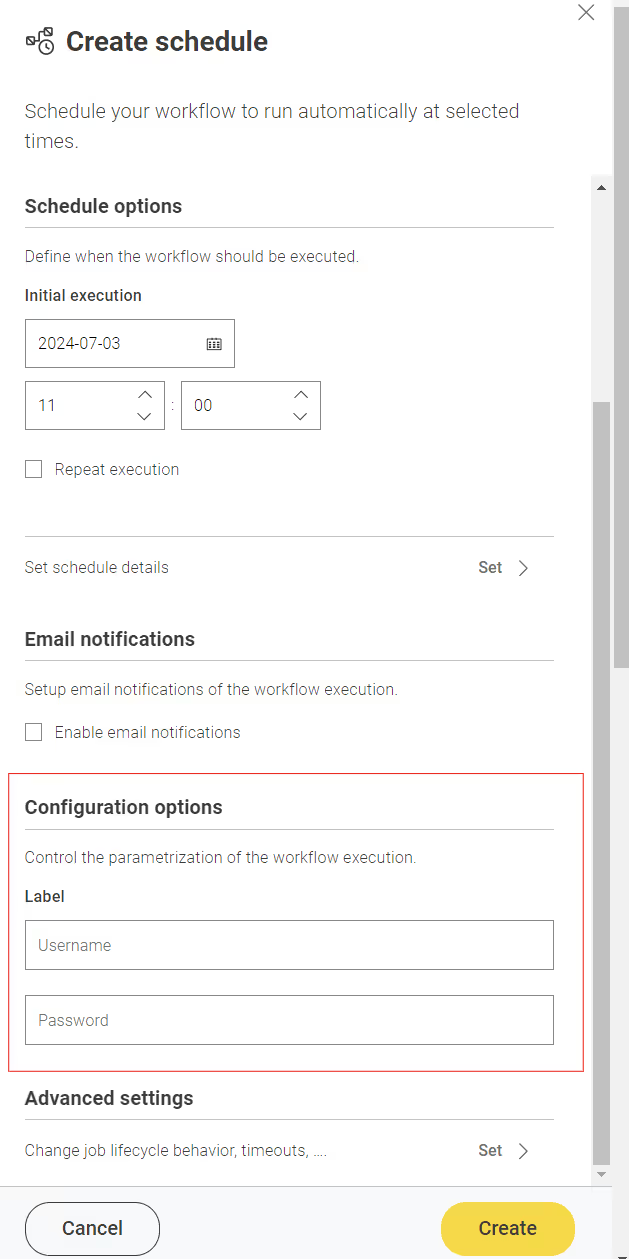

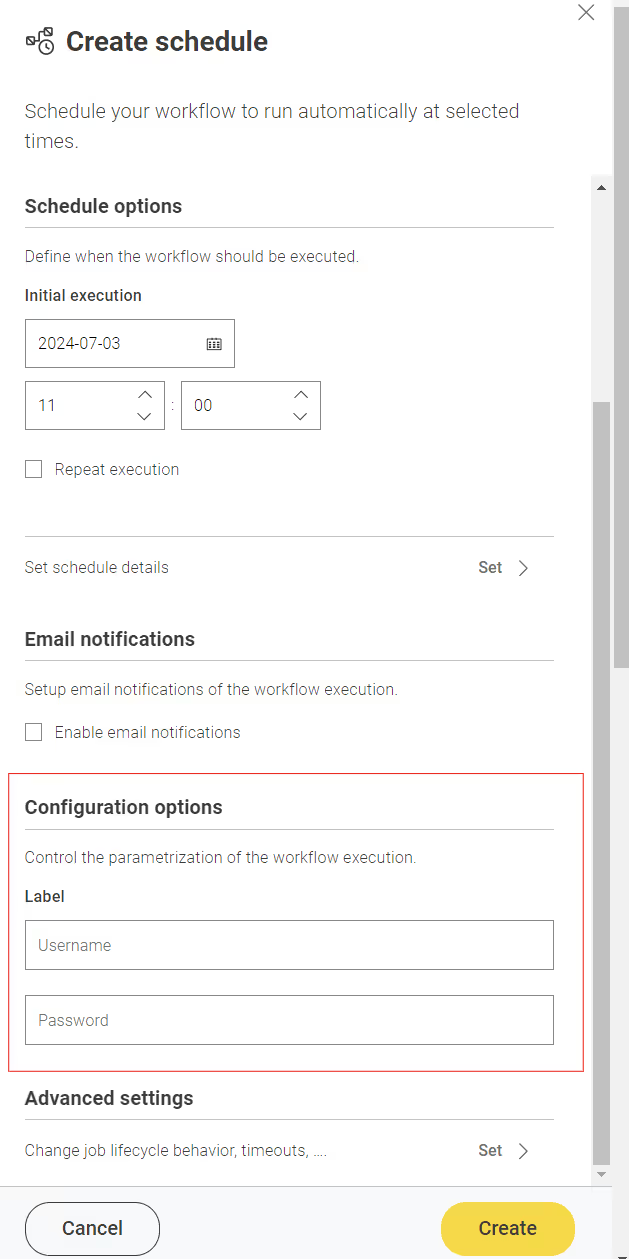

This will open a side panel where you can set up the schedule deployment.

Here you can select a name for your schedule deployment and which version of the workflow you want to schedule for execution.

To see all the deployments created for a workflow navigate to the workflow page and select Deployments from the right side menu. To see all the deployments created by your team navigate to the team page and select Deployments from the left side menu.

In the section Schedule options you can define when the workflow should be executed:

- Set up an initial execution, date and time

- Select Repeat execution to select if you want the workflow to run every specific amount of time (e.g. every 2 hours), or select start times when your workflow will be executed every day at the same time.

- Set up also a schedule ends date, either to never end or to end in a specific date and time.

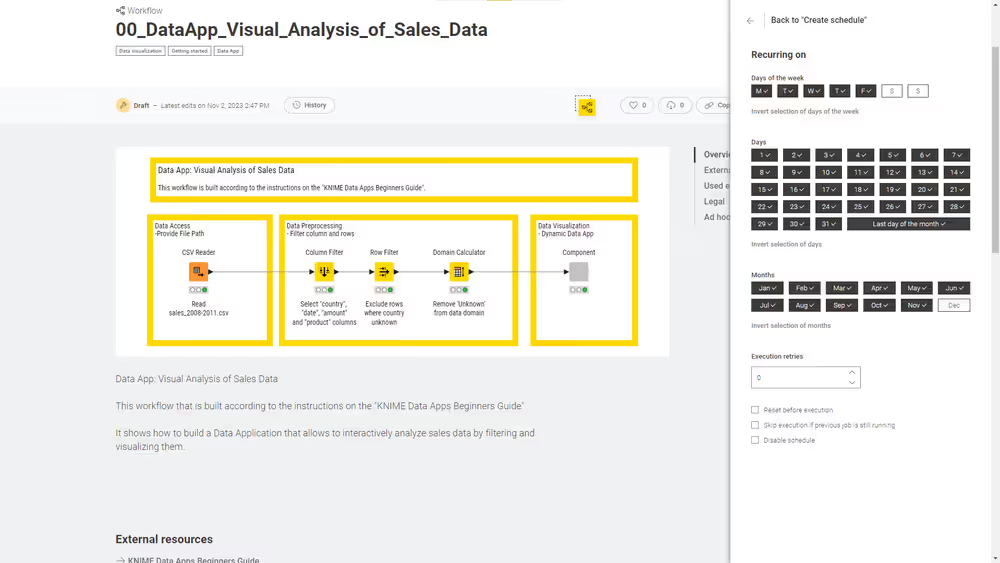

Set additional schedule details

You can also set more advanced schedule details.

First, check the option Repeat execution, under Set schedule details, then you can click Set to set up recurring executions, retries, and advanced schedule details.

Click Set and the following panel will open:

If you did not check the option Repeat execution, you will only find the set up options for retries and the advanced schedule details.

The workflow will run when all the selected conditions are met.

In this example the workflow will run from Monday to Friday, every day of the month, except for the month of December.

Finally, in the section Execution retries you can set up the number of execution retries, and check the following options for advanced schedule details:

Reset before execution: the workflow will be reset before each scheduled execution retries occur.

Skip execution if previous job is still running: the scheduled execution will not take place if the previous scheduled execution is still running.

Disable schedule: Check this option to disable the schedule. The scheduled execution will start run accordingly to the set ups when it is re-enabled again.

Set configuration options

This section will show dynamically when adding Configuration nodes in the root of the workflow.

See the Parameterization of workflows with Configuration nodes section for more information on how to add configuration nodes to your workflow.

Set advanced settings

Finally, you can select advanced settings for each schedule deployment.

Click Set under Advanced settings section to do so.

In the panel that appears you can configure additional settings:

Job lifecycle: such as deciding in which case to discard a job, the maximum time a job will stay in memory, the job life time, or the options for timeout. Particularly important in this section is the parameter Max job execution time: the default setting can be changed according to your needs to keep control over the execution time.

Additional settings: such as execution scope or the option to update the linked components when executing the scheduled job.

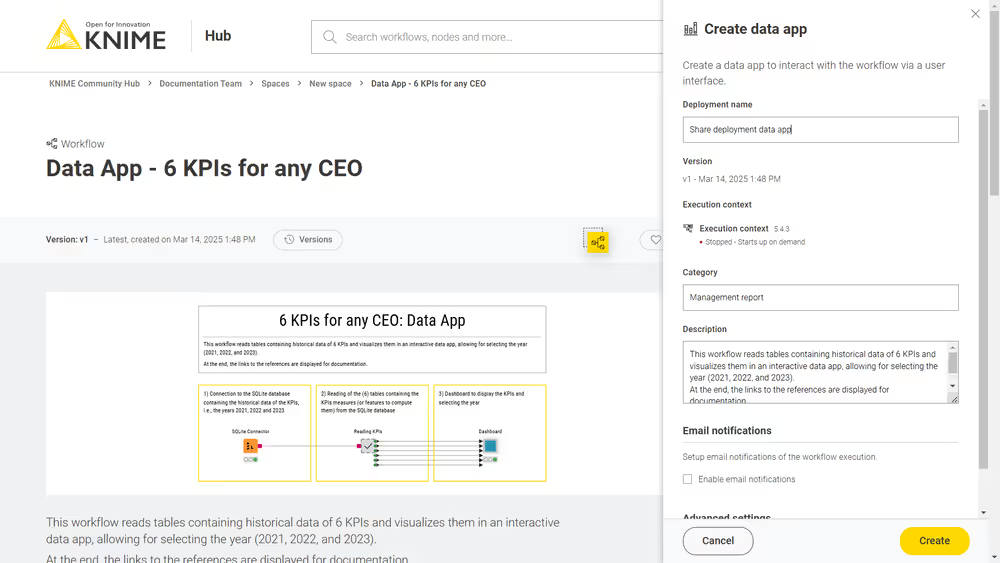

Deploy a workflow as a data app

Deploying a workflow as a data app allows you to share the data application with other users, who can interact with the workflow through a user interface.

To deploy a workflow as a data app navigate to a workflow in one of your teams spaces. In order to be able to deploy a workflow as a data app you need to have created at least one version of your workflow. To know more about versioning read the Versioning section of this guide. Once you have your stable version of the workflow, create a version (click Versions ? Create version). Now you can click the button Deploy and select Create data app.

This will open a side panel where you can set up the data app deployment.

Here you can select a name for your deployment and which version of the workflow you want to use.

To see all the deployments created for a workflow navigate to the workflow page and select Deployments from the right side menu. To see all the deployments created by your team navigate to the team page and select Deployments from the left side menu.

You will also be able to select a Category and a Description. Categories added here will be used to group the data apps in the Data Apps Portal. Also descriptions added here will be visible in the corresponding deployments tile in the Data Apps Portal.

Set configuration options

This section will show dynamically when adding Configuration nodes in the root of the workflow.

See the Parameterization of workflows with Configuration nodes section for more information on how to add configuration nodes to your workflow.

Set advanced settings

Finally, you can select advanced settings for each schedule deployment. Click Set under Advanced settings section to do so.

In the panel that appears you can configure additional set ups:

Job lifecycle: such as deciding in which case to discard a job, the maximum time a job will stay in memory, the job life time, or the options for timeout. Particularly important in this section is the parameter Max job execution time: the default setting can be changed according to your needs to keep control over the execution time.

Additional settings: such as report timeouts, CPU and RAM requirements, check the option to update the linked components when executing the scheduled job and so on.

Run a data app deployment

Once you have created a data app deployment to see the list of Deployments you can go on the specific workflow page.

Click the icon on the row corresponding to the deployment you want to execute, and click Run.

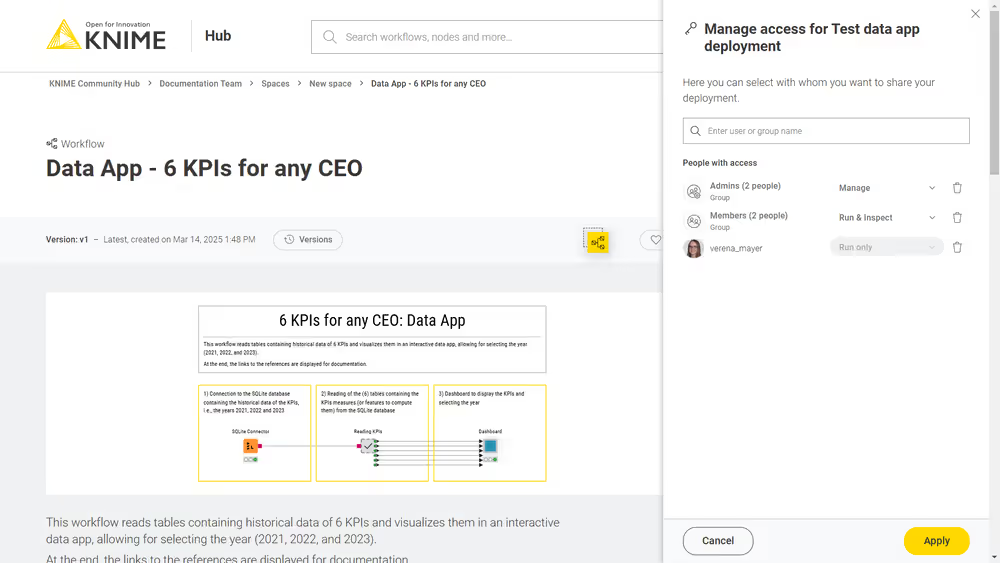

Manage access to data apps

You can change who has access to data apps.

In the Deployments section on the workflow page, click the

icon.

Select Manage access from the menu.

A side panel opens. Here, you can type the name of the user you want to share the data app with and select the access level you want to give to the team members and team admins.

By default, the data app deployment is shared with all members of your team:

Team admins have Manage access. They can change access settings, delete the deployment, and run it.

Team members have Manage access. They can change access settings, delete the deployment, and run it. The access can also be changed to Run & Inspect. Team members with this role can run the deployment and inspect the results, but cannot change access settings or delete it.

Users outside your team: You can share the data app with external users by typing their name into the search bar and clicking Apply. These users receive Run only access, meaning they can run the deployment but cannot change access settings or delete it. This access level cannot be modified.

Jobs

Every time a workflow is executed a job is created on KNIME Hub.

To see the list of all the jobs that are saved in memory for the ad hoc execution of a specific workflow go to the workflow page and on the right side menu click Ad hoc jobs.

To see the list of all the jobs that are saved in memory for each of the deployments created for a specific workflow, go to the workflow page and on the right side menu click Deployments. You can expand each deployment with the icon on the left of the deployment.

Also you can go to your Pro profile page and find a list of all deployments you created. Also here you can click the icon corresponding to a specific deployment to see all its jobs.

On each job you can click the icon on the right of the corresponding job line in the list and perform the following operations:

Open: For example you can open the job from a data app and look at the results in a new tab

Save as workflow: You can save the job as a workflow in a space

Inspect: A job viewer opens in a new tab. Here, you can investigate the status of jobs.

Delete: You can delete the job

Download logs: You can download the log files of a job - this feature allows for debugging in case the execution of a workflow did not work as expected.

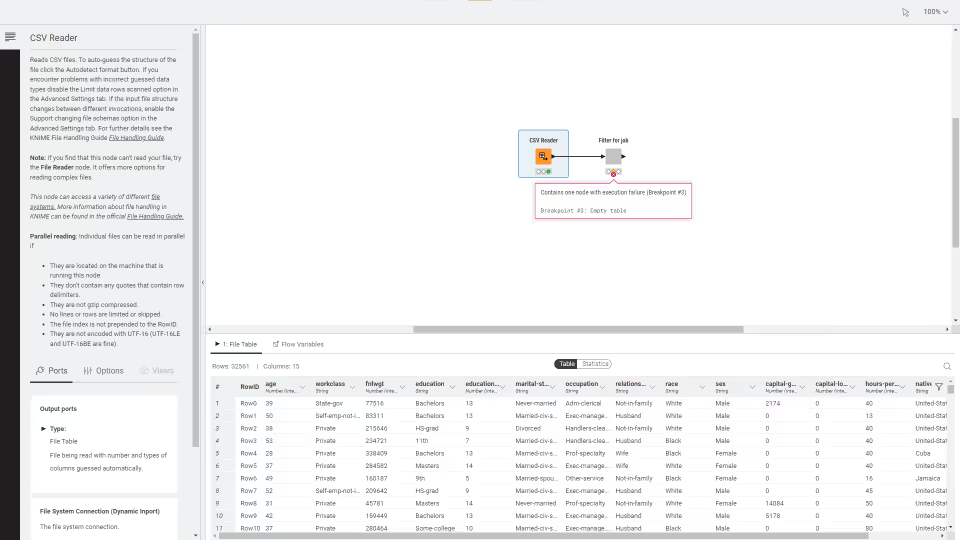

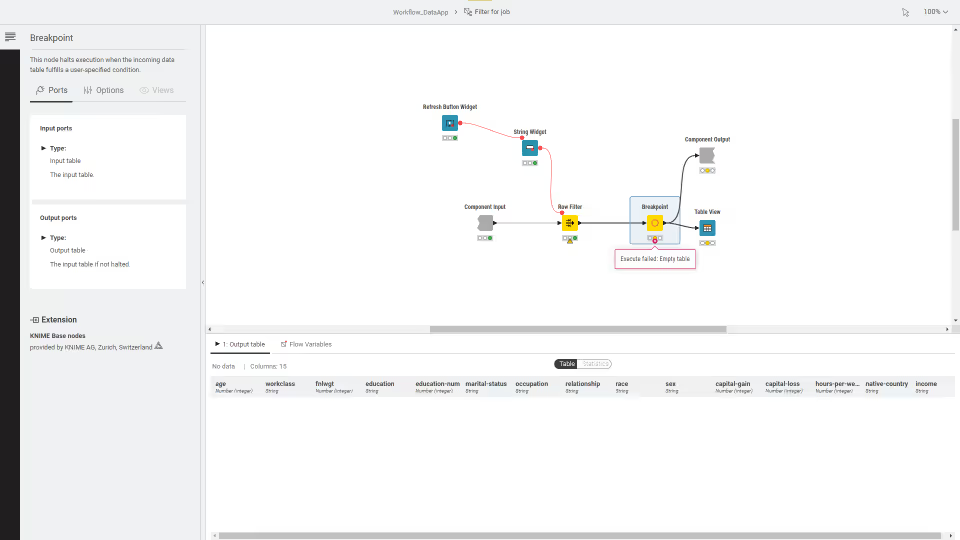

Inspect an executed workflow

You can inspect the status of an executed workflow, for example if the workflow execution did not succeed, or the execution is taking a long time. Click the icon for the desired job and select Inspect.

Be aware that this action will start up your executor, which, once the inspection is started will start the billing.

Here you can hover over info and error icons at the node's traffic light, to visualize the message, check where the execution stopped or the status of specific nodes. You can also inspect the data at any node's output port.

You can also navigate inside components and metanodes and inspect the nodes.

Extensions available for execution on KNIME Community Hub

All the extensions in the following list are available to run workflows on KNIME Community Hub.

Extensions available for execution on KNIME Community Hub

- KNIME Expressions

- KNIME Executor connector

- KNIME Remote Workflow Editor for Executor

- KNIME Remote Workflow Editor

- KNIME Hub Additional Connectivity (Labs)

- KNIME Hub Integration

- KNIME Active Learning

- KNIME AI Assistant (Labs)

- KNIME Autoregressive integrated moving average (ARIMA)

- KNIME Basic File System Connectors

- KNIME Views

- KNIME Nodes for Wide Data (many columns)

- KNIME Big Data Connectors

- KNIME Databricks Integration

- KNIME Extension for Big Data File Formats

- KNIME Extension for Apache Spark

- KNIME Extension for Local Big Data Environments

- KNIME Chromium Embeded Framework (CEF) Browser

- KNIME Integrated Deployment

- KNIME Base Chemistry Types & Nodes

- KNIME Amazon Athena Connector

- KNIME Amazon DynamoDB Nodes

- KNIME Amazon Cloud Connectors

- KNIME Amazon Machine Learning Integration

- KNIME Amazon Redshift Connector And Tools

- KNIME Conda Integration

- KNIME Columnar Table Backend

- KNIME Streaming Execution (Beta)

- KNIME BigQuery

- KNIME JDBC Driver For Oracle Database

- KNIME Microsoft JDBC Driver For SQL Server

- KNIME Vertica Driver

- KNIME Database

- KNIME Data Generation

- KNIME Connectors for Common Databases

- KNIME Distance Matrix

- KNIME Deep Learning - Keras Integration

- KNIME Deep Learning - ONNX Integration

- KNIME Deep Learning - TensorFlow Integration

- KNIME Deep Learning - TensorFlow 2 Integration

- KNIME Email Processing

- KNIME Ensemble Learning Wrappers

- KNIME Expressions

- KNIME Azure Cloud Connectors

- KNIME Box File Handling Extension

- KNIME Chemistry Add-Ons

- KNIME External Tool Support

- KNIME H2O Snowflake Integration

- KNIME H2O Machine Learning Integration

- KNIME H2O Machine Learning Integration - MOJO Extension

- KNIME Extension for MOJO nodes on Spark

- KNIME H2O Sparkling Water Integration

- KNIME Image Processing

- KNIME Itemset Mining

- KNIME Math Expression (JEP)

- KNIME Indexing and Searching

- KNIME MDF Integration

- KNIME Office 365 Connectors

- KNIME SharePoint List

- KNIME Open Street Map Integration

- KNIME SAS7BDAT Reader

- KNIME Excel Support

- KNIME Power BI Integration

- KNIME Tableau Integration

- KNIME Textprocessing

- KNIME Twitter Connectors

- KNIME External Tool Support (Labs)

- KNIME Google Connectors

- KNIME Google Cloud Storage Connection

- KNIME Javasnippet

- KNIME Plotly

- KNIME Quick Forms

- KNIME JavaScript Views

- KNIME JavaScript Views (Labs)

- KNIME JSON-Processing

- KNIME Extension for Apache Kafka (Preview)

- KNIME Machine Learning Interpretability Extension

- KNIME MongoDB Integration

- KNIME Neighborgram & ParUni

- KNIME Network Mining distance matrix support

- KNIME Network Mining

- KNIME Optimization extension

- KNIME Python Integration

- KNIME Interactive R Statistics Integration

- KNIME Report Designer (BIRT)

- KNIME Reporting

- KNIME REST Client Extension

- KNIME Salesforce Integration

- KNIME SAP Integration based on Theobald Xtract Universal

- KNIME Git Nodes

- KNIME Semantic Web

- KNIME Snowflake Integration

- KNIME Statistics Nodes

- KNIME Statistics Nodes (Labs)

- KNIME Timeseries nodes

- KNIME Timeseries (Labs)

- KNIME Modern UI

- KNIME Parallel Chunk Loop Nodes

- KNIME Weak Supervision

- KNIME Webanalytics

- KNIME XGBoost Integration

- KNIME XML-Processing

- SmartSheet extension

- KNIME AI Extension (Labs)

- KNIME Web Interaction (Labs)

- KNIME Nodes for Scikit-Learn (sklearn) Algorithms

- Geospatial Analytics Extension for KNIME

- KNIME Giskard Extension

- KNIME Presidio Extension

- RDKit Nodes Feature

- Vernalis KNIME Nodes

- Slack integration

- Continental Nodes for KNIME

- Lhasa public plugin

To know more about the different extensions see the Extensions and Integrations Guide.

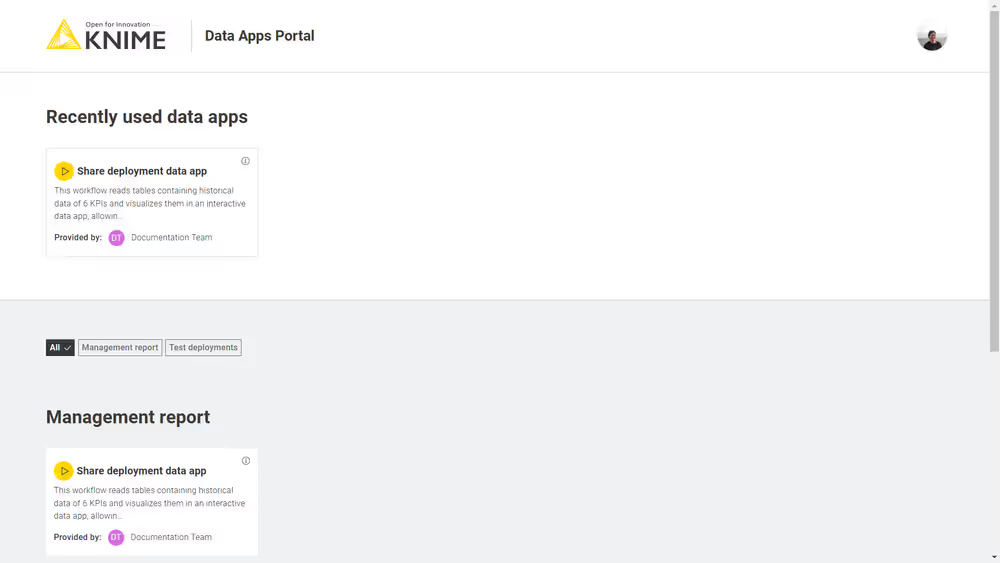

Data Apps Portal

To access the Data Apps Portal you can either go to https://apps.hub.knime.com and sign in with your KNIME Hub account or click on the Data Apps Portal link in the menu on your profile page in the KNIME Hub.

Every signed in user can access to this page to see all the data apps that have been shared with them, execute them at any time, interact with the workflow via a user interface, without the need to build a workflow or even know what happens under the hood.

To execute a data app click the corresponding tile. A new page will open in the browser showing the user interface of the data app.

To share a data app deployment with someone follow the instructions in the Manage access to data apps section.

Secrets management

KNIME Community Hub Pro and Team allows you to manage secrets that can be used in your workflows. Secrets are encrypted pieces of information, such as API keys, passwords, or tokens, that you can securely store and retrieve when needed.

To manage your secrets, navigate to your Pro profile page on KNIME Hub and select the Secrets tab from the left side menu.

To know more about how to create, manage, and use secrets in your workflows refer to the KNIME Secrets User Guide for Community Hub.

Access data from different sources

This section provides information on how to build your workflows to connect to different data sources and which kind of authentication method is recommended for a particular data source, to then run the workflows ad hoc or schedule them on KNIME Community Hub.

Access data from Google Cloud

Refer to the KNIME Google Cloud Integration User Guide to know how to create an API Key and to learn more about the general usage of the Google Authenticator node.

To access data from Google Cloud the first step when building your workflow is to use a Google Authenticator node. One of the possibilities is then to use the API Key authentication method. When using the API Key authentication method in the Google Authenticator node, the API key file will need to be stored somewhere where it is accessible to the node in order for the execution to be successful.

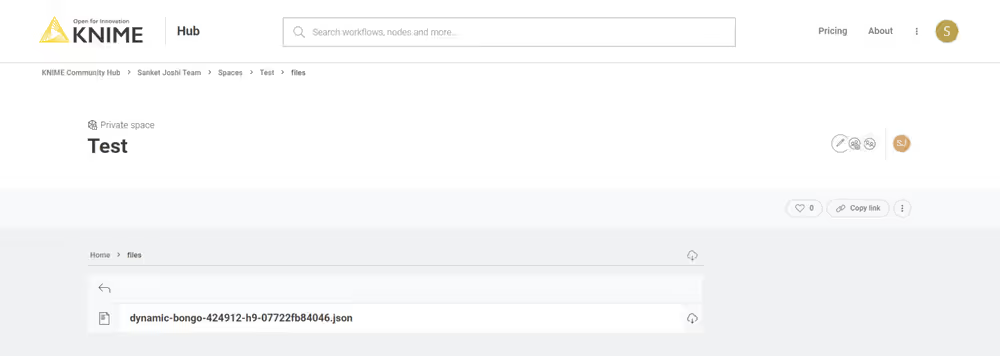

For example, if your folder structure on the KNIME Community Hub (KCH) looks something like the one below, meaning that your API Key file is stored in the following path:

<space> -> <folder> -> <api-key.fileformat>

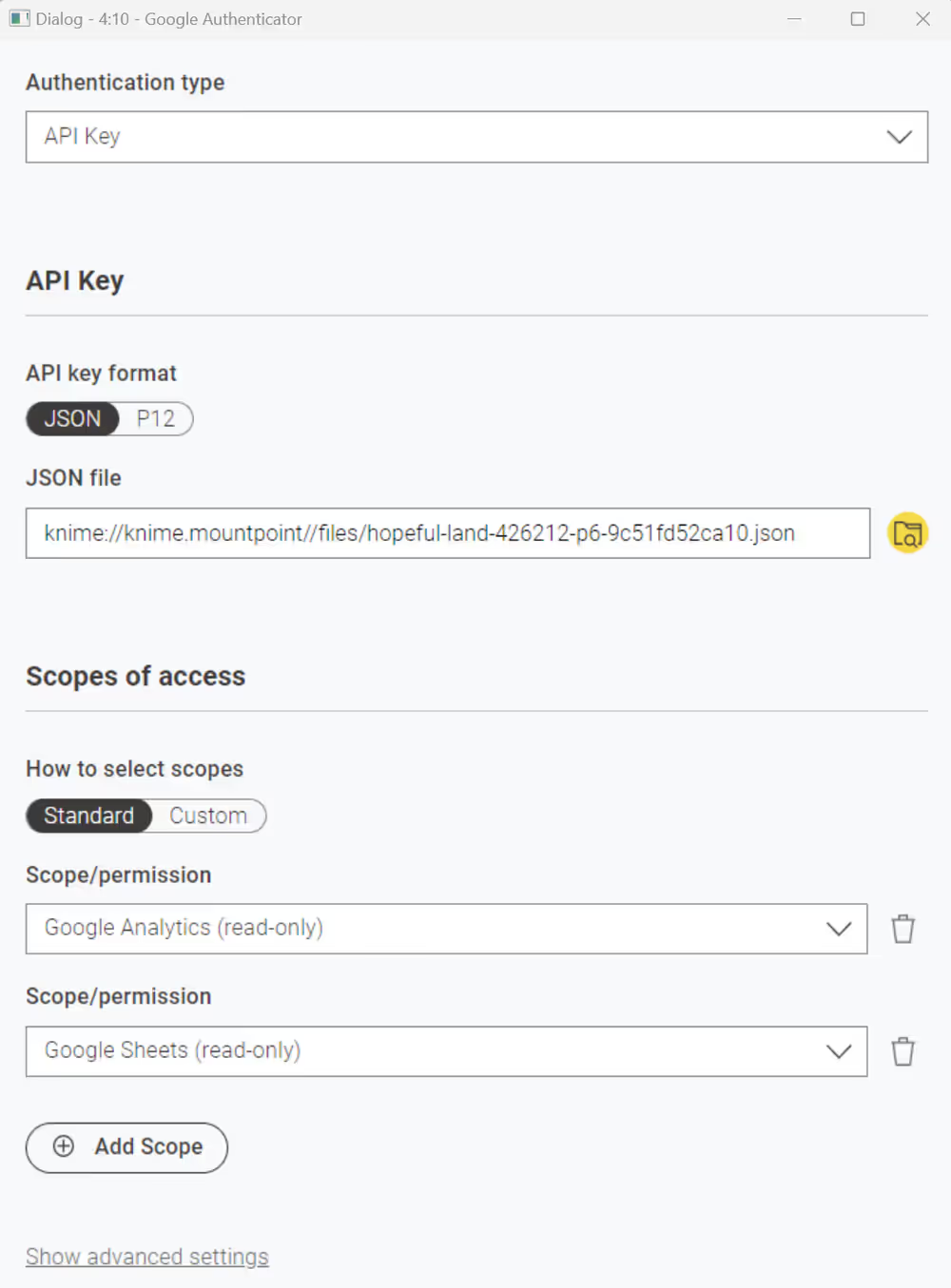

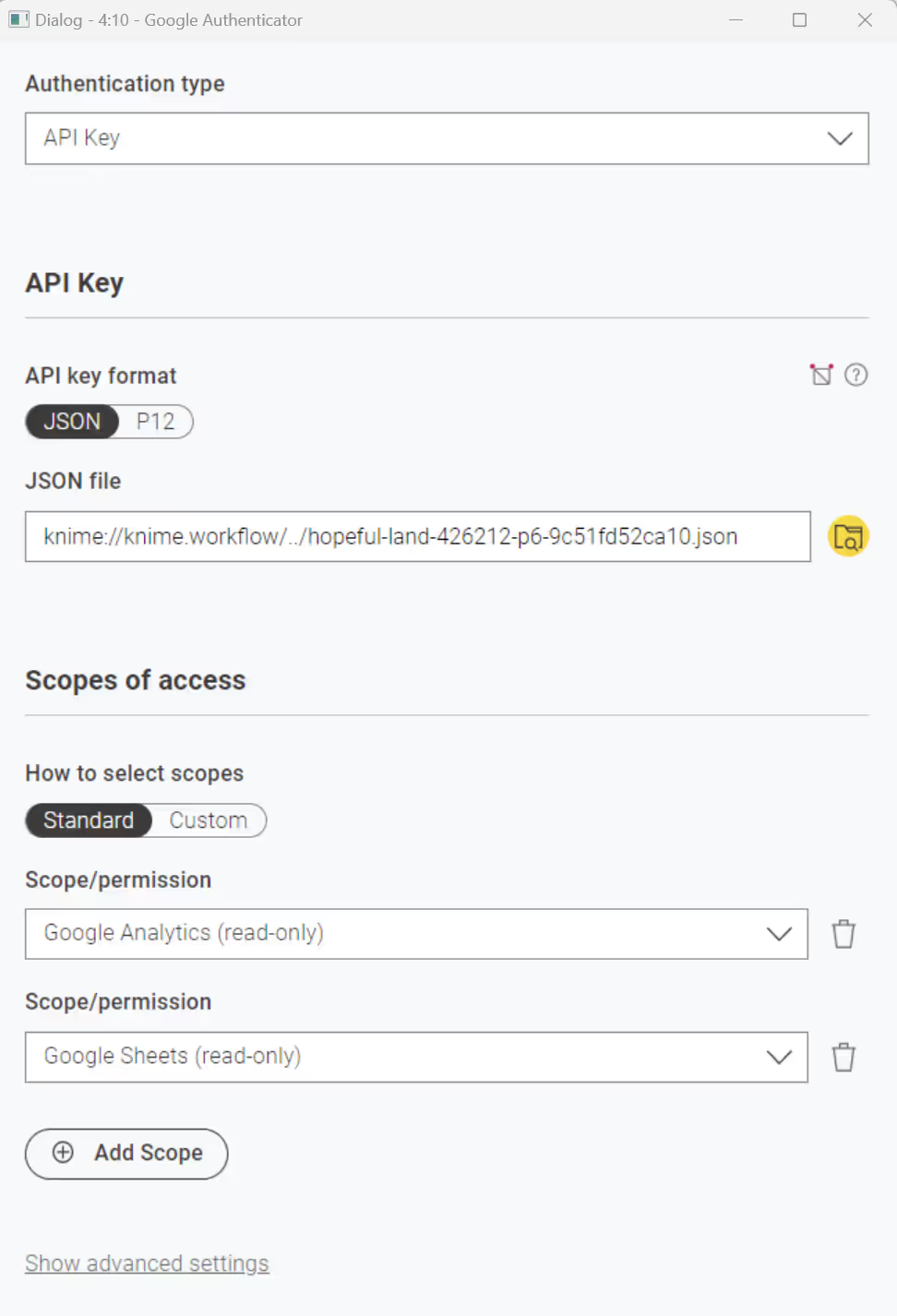

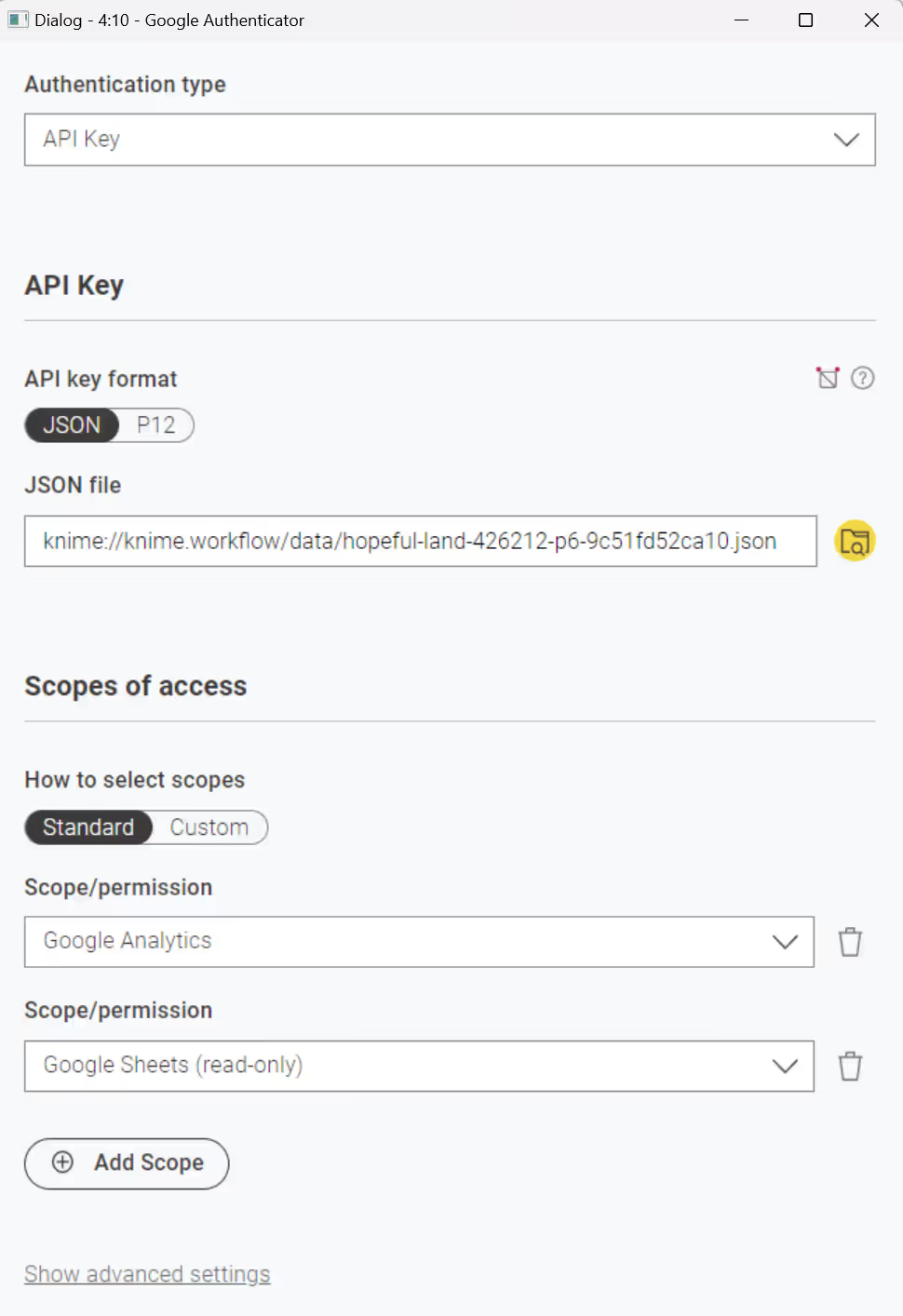

then, you can access the API key using either one of the following paths in the Google Authenticator node configuration dialog.

Mountpoint relative:

knime://knime.mountpoint//<folder>/<api-key.fileformat>

Workflow relative:

knime://knime.workflow/../<api-key.fileformat>

Workflow Data Area:

knime://knime.workflow/data/<api-key.fileformat>To use this path, you need to make sure you have stored the JSON or p12 file in the workflow's Data folder. If the folder does not exist, create one, store the file inside, and then upload the workflow to the KNIME Community Hub.

Access data from Google BigQuery

In order to have access to data on Google BigQuery, the first step is to authenticate with Google, using the Google Authenticator node as described above. Then you can connect to Google BigQuery using the Google BigQuery Connector node.

However, due to license restrictions, you first need to install version 1.5.4 of the Google Big Query JDBC driver, which you can download here. Further details about how to configure the driver, its limitations and support can be found in the Google documentation.

Once you have downloaded the driver, extract it into a directory and register it in your KNIME Analytics Platform as described here.

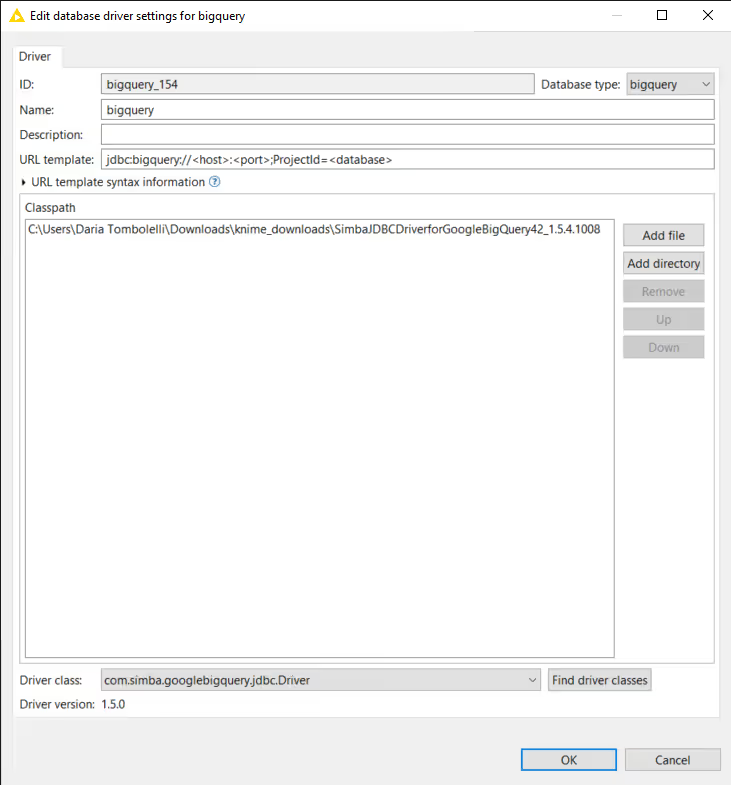

Your driver registration dialog should look like this, where <path-to-folder>\SimbaJDBCDriverforGoogleBigQuery42_1.5.4.1008 is the directory that contains all the JAR files.

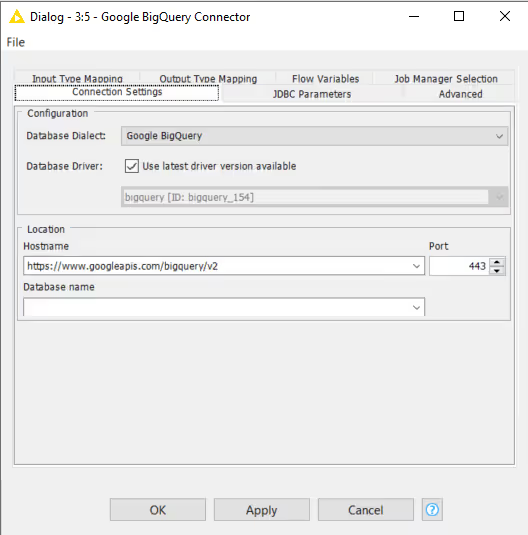

Then you can configure the Google BigQuery Connector node. Make sure to select the option Use latest driver version available as Database Driver in the Google BigQuery Connector node. In this way the node will use the latest driver version available locally or on the KNIME Community Hub disregard of where the node is executed.

Access data from Azure

Refer to the KNIME Azure Integration User Guide for setting up the Azure integration with KNIME.

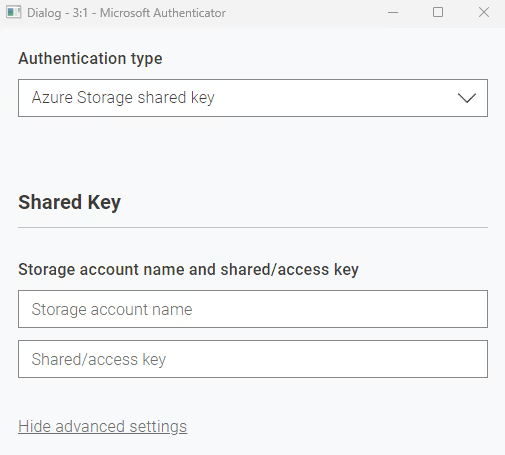

To access data from Azure, the first step when building your workflow is to use a Microsoft Authenticator node.

When configuring the node, select Azure Storage Shared Key as authentication type.

Enter the Storage Account Name and Shared/Access key and connect to Azure.

The credentials could be stored inside the node, which is not recommended, or passed to the node using the Credentials Configuration node. This will allow you to pass the credentials while deploying the workflow, as shown in the below snapshot.

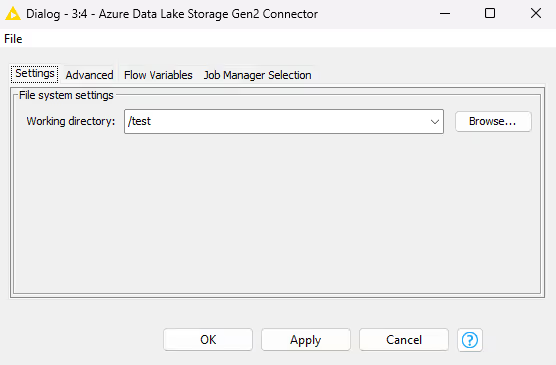

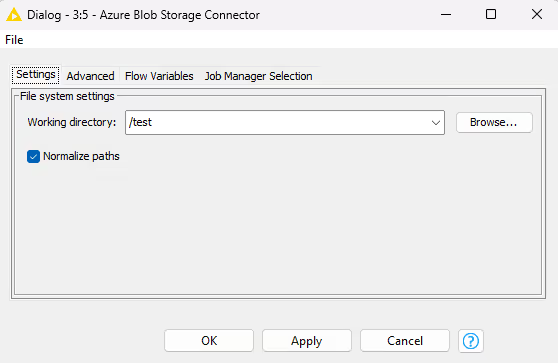

Once authenticated, use Azure Data Lake Storage Gen2 Connector or Azure Blob Storage Connector nodes and select the working directory from where you want to access the data.

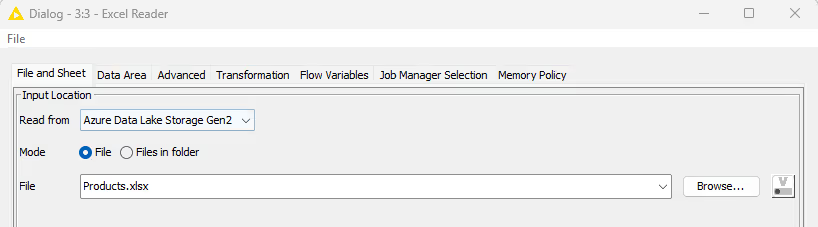

Enter the file name in the respective file reader node and read the file.

Access data from Snowflake

Refer to the KNIME Snowflake Extension Guide for setting up Snowflake with KNIME.

To access data from Snowflake, the first step when building your workflow is to use a Snowflake Connector node.

The credentials could be stored inside the node, which is not recommended, or passed to the node using the Credentials Configuration node. This will allow you to pass the credentials while deploying the workflow.

Access data from Amazon Athena

In order to have access to data on Amazon Athena, the first step is to authenticate with Amazon, using the Amazon Authenticator. Then you can connect to Amazon Athena using the Amazon Athena Connector node.

However, due to license restrictions, you first need to install version 2.1.1 of the Amazon Athena JDBC driver, which you can download here. Further details about how to configure the driver, its limitations and support can be found in the Amazon Athena documentation.

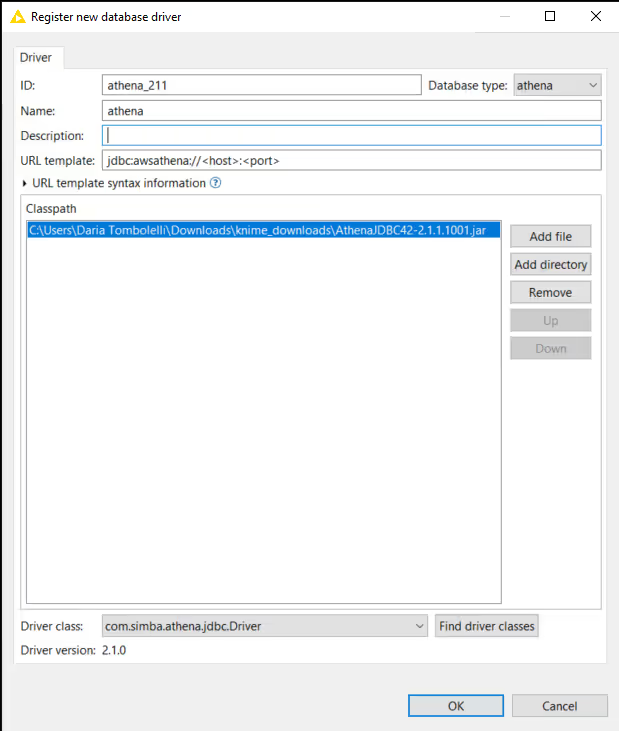

Once you have downloaded the driver, extract it into a directory and register it in your KNIME Analytics Platform as described here.

Your driver registration dialog should look like this, where <path-to-folder>\AthenaJDBC42-2.1.1.1001.jar is the JAR file you downloaded.

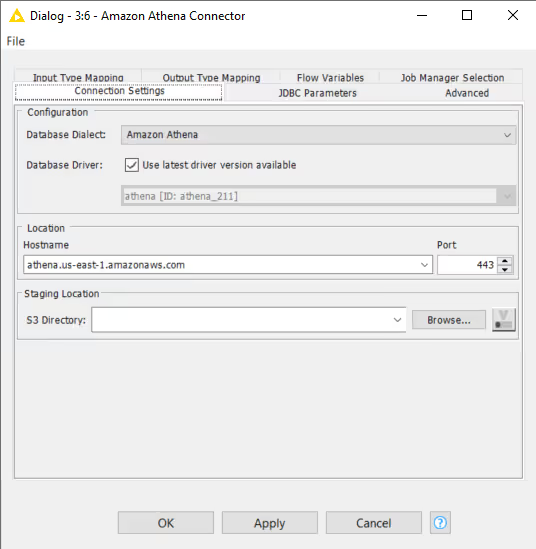

Then you can configure the Amazon Athena Connector node. Make sure to select the option Use latest driver version available as Database Driver in the Amazon Athena Connector node. In this way the node will use the latest driver version available locally or on the KNIME Community Hub disregard of where the node is executed.

Advanced settings

IP whitelisting

Relying solely on IP whitelisting for security is insufficient and potentially risky.

KNIME Community Hub has a dedicated static IP address that executors use: 3.66.133.75. Whitelisting this IP address ensures that your workflows can reach the remote data location.

As this IP address is shared between all executors on Community Hub, IP whitelisting in itself as a secure connection is not recommend (as others on the Hub might also access your data).

It is strongly advised to use it in combination with other security mechanisms. For example: credential based authentication. In this case, rotating/changing the credentials periodically is recommended as a best practice.